The 400G data center network market is growing rapidly, with larger throughput switching chips, higher speed optical connectors, and explosive data growth combined to accelerate the growth of data center networks.

On top of that, with the robust growth of 5G and the rapid growth of video-based data transmissions, data centers will need to upgrade their capacity to meet the rapidly growing demand for data and bandwidth-intensive applications. All of these factors are driving the continued growth of data center traffic and the demand for high-capacity 400G data center network solutions. As the surge in capacity requirements for data-intensive applications far exceeds current high-speed transmission capacity, 400G is poised to be an evolving technology with relatively lower OpEx and a smaller footprint to better meet the urgent need for fiber connectivity.

Table of Contents

Toggle400G Networking Overview

Over the past few decades, we have witnessed a shift in Ethernet speeds from 1G, 10G/40G to 25G/100G, and the Ethernet industry continues to innovate to achieve higher network speeds. Before we can cover what 400G networking is all about, we need to understand the various ways 400G is defined and used in the data center networking conversation.

400G: The terms 100G and 400G refer to the interface serdes rate, i.e., the transmission capacity of a single channel. It is often used to verbally describe the transmission rate of a single interface and is sometimes used interchangeably with 400GbE and 400Gb/s.

400GbE: refers to the next-generation capacity rate that can be transmitted over a single link in an Ethernet interface. It is based on the IEEE 802.3bs certification standard that the 400GbE physical layer, management parameters, and media access control (MAC) parameters must meet.

400Gb/s: refers to the speed of data transmission and usually describes the maximum transmission rate supported by a device port, or the transmission rate of an optical connector.

400G Network Working Principle

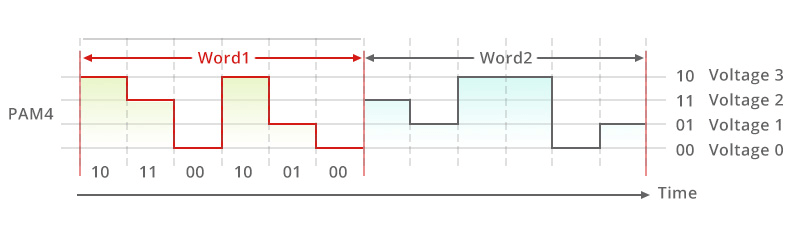

We may be familiar with 100G networks, which use four NRZ-modulated 25G signals on a single interface to complete 100G transmissions. Ethernet transmission rate is usually increased in two ways: increasing the single channel transmission rate and increasing the number of channels, while 400G precisely doubles the single channel transmission and also doubles the number of channels by transmitting eight 50G signals through PAM4 modulation technology to achieve 400G transmission (QSFP-DD).

PAM4 Transmission Principle

Advantages of 400G Network Deployment

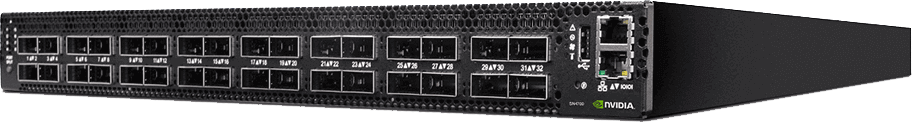

Higher transmission density: Taking the 100G switch as an example, the port density of NVIDIA 100G switch SN2700/3700C is 32*100G/U, while 400G switch SN4700 has 32 400G QSFPDD ports in 1U panel, which is four times higher density in the same height.

Lower transmission power consumption: Standardization Administration of China approved the release of Maximum Allowable Values of Energy Efficiency and Energy Efficiency Grades for Data Centers on October 11, 2021, which makes how to reduce transmission energy consumption a top priority for data centers. Compared with 100G, 400G has lower energy consumption per unit of information transmission, which also conforms to the need for energy conservation and environmental protection.

Scalability: 100G QSFP28 ports generally support two transmission modes: 100G to 100G and 100G to 4X25G. And the 400G switch with NVIDIA SN4700 supports 400G to 400G, 400G to 2X200G, 400G to 4X100G, 400G to 8X50G, and other transmission modes, which can meet various network architectures.

Port Modes Supported by NVIDIA SN4700 Switches

The Trends of 400G Network

Trend 1: QSFP-DD. QSFP-DD is the first of its kind to enable a consistent common form factor for all connecting cables, whether copper or long-haul fiber, without exception. The QSFP-DD Multi-Source Agreement (MSA) Group of 65 organizations also released version 4.0 of the Common Management Interface Specification (CMIS) for the QSFP-DD external specification. As 400G adoption grows, QSFP-DD will provide the smallest 400 modules in the data center market with the highest port bandwidth density, and CMIS will help ensure that QSFP-DD modules are backward compatible with all QSFP based transceivers, including widely deployed 40G/100G/200G products. Widely recognized for its powerful performance, the QSFP-DD is best suited for use as a high-density, high-speed pluggable module in 400G data center networks.

Trend 2: Remote Direct Memory Access over Converged Ethernet (RoCE). 400G data networks are key to accelerating the adoption of RoCE technology in the data center. RDMA supports zero-replication networks that enable network adapters to transfer data directly with application memory, eliminating the need to replicate data between application memory and the operating system’s data buffers. In particular, the RoCEv2 protocol supports RDMA across the L3 boundary.

Trend 3: Smart NIC. The emergence of SmartNIC plays a key role in 400G to meet the new massive bandwidth requirements of data centers. Recent versions of SmartNIC offer two 25G/50G/100G/200G ports or one 400G port, as well as Ethernet connections with 50G PAM4 SerDes capabilities and PCIE 4.0 host connections. We see the SmartNIC as an integral part of improving network programmability and accelerating the application load diversion that is critical to increasing data center efficiency.

FiberMall’s Full-Stack Network Solutions

FiberMall provides customers with full-stack network solutions based on its understanding of the development trend of high-speed networks and breakthroughs in optoelectronic device technology.

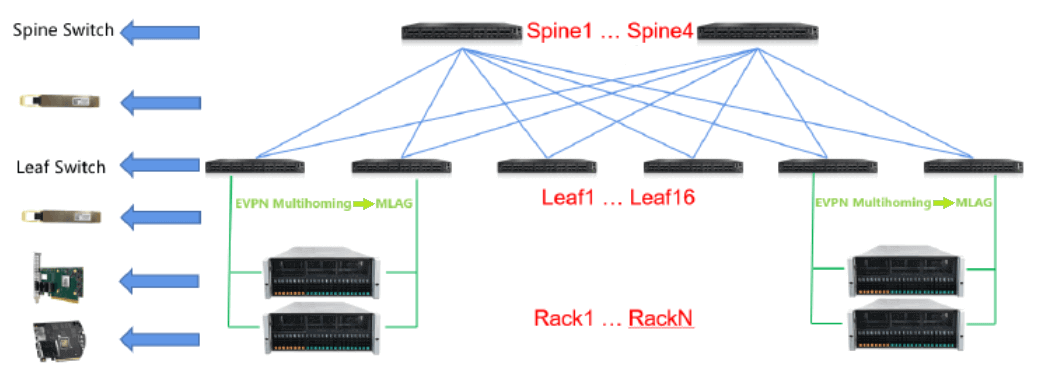

FiberMall‘s 400G network solution for data center

Switches

FiberMall offers the SN4410/SN4700 on the switch side as a leaf/spine switch respectively, and the Spectrum-3 based SN4000 series switches support up to 400G transfer rates along with advanced network virtualization technology, high performance, single channel VXLAN routing and IPv6/Multiprotocol Label Switching (MPLS) Segment Routing, and robust high-bandwidth data paths for RoCE-based and GPUDirect-based applications.

NVIDIA Switch

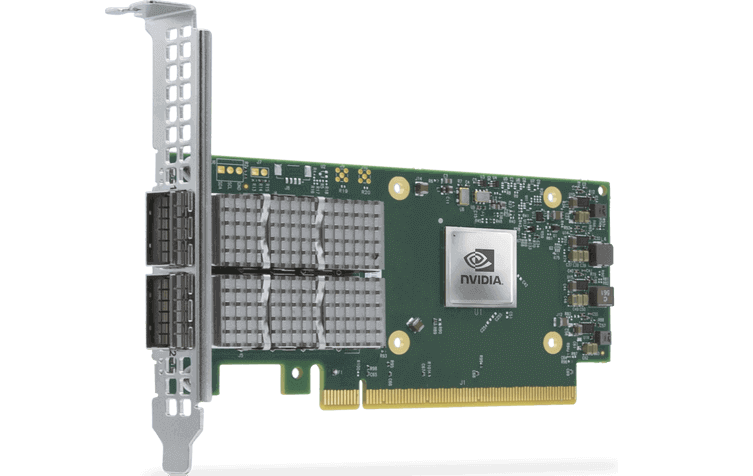

SmartNIC

FiberMall offers the NVIDIA ConnectX SmartNIC on the NIC side, featuring NVIDIA ASAP2 (Accelerated Switching and Datagram Processing Technology®), which accelerates network performance while reducing CPU load when transmitting Internet Protocol (IP) datagrams, freeing up more processor cycles for application execution. With unmatched RoCE performance, ConnectX smart cards deliver efficient, high-performance remote direct memory access (RDMA) services for bandwidth- and latency-sensitive applications. Also available is the NVIDIA Bluefield DPU, which combines the power of ConnectX with programmable ARM cores and other hardware offloads to power software-defined storage, networking, security, and workloads.

NVIDIA SmartNIC

Optical Module

For the network connection, the server can use FiberMall’s 200G QSFP-DD to 2x100G QSFP28 NRZ DAC Breakout Cable to connect 48*100G NIC ports with one SN4410; for the switch connection, you can choose to use FiberMall 400G QSFP-DD SR8 optical module to connect two SN4700s.

FiberMall is a leading provider of optical networking solutions and an Elite Partner of NVIDIA networking products, joining hands with NVIDIA to achieve a strong combination of optical connectivity, networking products, and solutions. FiberMall is a leading provider of optical networking solutions and an Elite Partner of NVIDIA networking products, joining hands with NVIDIA to achieve a strong combination of optical connectivity + networking products and solutions.

Related Products:

-

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00

-

QSFP-DD-400G-XDR4 400G QSFP-DD XDR4 PAM4 1310nm 2km MTP/MPO-12 SMF FEC Optical Transceiver Module

$580.00

QSFP-DD-400G-XDR4 400G QSFP-DD XDR4 PAM4 1310nm 2km MTP/MPO-12 SMF FEC Optical Transceiver Module

$580.00

-

QSFP-DD-400G-LR4 400G QSFP-DD LR4 PAM4 CWDM4 10km LC SMF FEC Optical Transceiver Module

$600.00

QSFP-DD-400G-LR4 400G QSFP-DD LR4 PAM4 CWDM4 10km LC SMF FEC Optical Transceiver Module

$600.00

-

QSFP-DD-400G-PLR4 400G QSFP-DD PLR4 PAM4 1310nm 10km MTP/MPO-12 SMF FEC Optical Transceiver Module

$1000.00

QSFP-DD-400G-PLR4 400G QSFP-DD PLR4 PAM4 1310nm 10km MTP/MPO-12 SMF FEC Optical Transceiver Module

$1000.00

-

QSFP-DD-400G-ER4 400G QSFP-DD ER4 PAM4 LWDM4 40km LC SMF without FEC Optical Transceiver Module

$3500.00

QSFP-DD-400G-ER4 400G QSFP-DD ER4 PAM4 LWDM4 40km LC SMF without FEC Optical Transceiver Module

$3500.00

-

QSFP-DD-400G-LR8 400G QSFP-DD LR8 PAM4 LWDM8 10km LC SMF FEC Optical Transceiver Module

$2500.00

QSFP-DD-400G-LR8 400G QSFP-DD LR8 PAM4 LWDM8 10km LC SMF FEC Optical Transceiver Module

$2500.00

-

QSFP-DD-400G-ER8 400G QSFP-DD ER8 PAM4 LWDM8 40km LC SMF FEC Optical Transceiver Module

$3800.00

QSFP-DD-400G-ER8 400G QSFP-DD ER8 PAM4 LWDM8 40km LC SMF FEC Optical Transceiver Module

$3800.00

-

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

-

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00