In the lead-up to the 2025 Hot Chips conference, NVIDIA officially unveiled the Spectrum-XGS Ethernet technology. This innovative solution, based on network optimization algorithms, introduces “scale-across” capabilities, breaking through the power and space physical limitations of single data centers. It connects multiple data centers distributed across different cities and countries into a unified “AI super factory,” providing underlying infrastructure support for larger-scale AI workloads, especially agentic AI.

Table of Contents

ToggleFrom Scale-Up/Out to Scale-Across: The Inevitable Choice for Spectrum-XGS

Current AI data centers face two core bottlenecks in scaling, and traditional scale-up and scale-out models are struggling to meet giga-scale AI demands:

- Scale-Up Limitations: Achieved by upgrading single systems or racks (e.g., increasing GPU count or enhancing single-device performance), but constrained by power ceilings from infrastructure like water cooling. Existing data centers have physical thresholds for power input and heat dissipation, preventing infinite increases in compute density per rack or data center.

- Scale-Out Limitations: Expanded by adding racks and servers to scale clusters, but limited by the physical space in a single venue, imposing hard caps on equipment capacity.

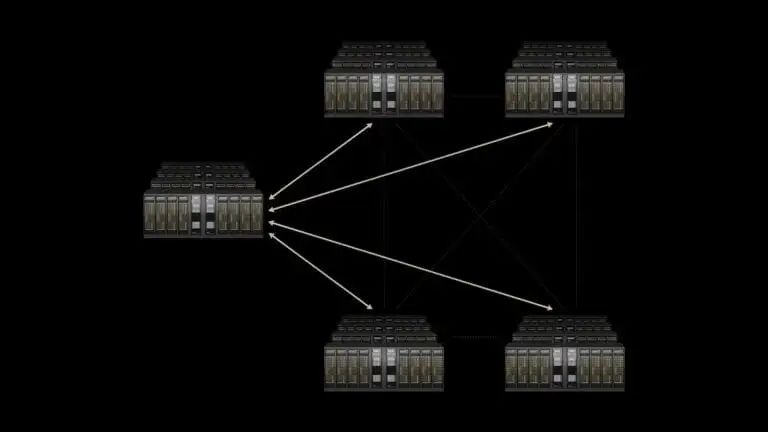

To overcome this dilemma, NVIDIA proposes the new dimension of “scale-across,” optimizing network communication between geographically dispersed data centers to make distributed AI clusters collaborate as one. NVIDIA founder and CEO Jensen Huang describes this cross-regional AI super factory as key infrastructure for the AI industrial revolution, with Spectrum-XGS as the core technology enabler.

Core Technologies of Spectrum-XGS

Spectrum-XGS is not an entirely new hardware platform but an evolution of NVIDIA’s existing Spectrum-X Ethernet ecosystem. Since its 2024 launch, Spectrum-X has delivered 1.6x higher generative AI network performance than traditional Ethernet via the Spectrum-4 architecture’s SN5600 switches and BlueField-3 DPUs, becoming the mainstream choice for AI data centers using NVIDIA GPUs. The breakthrough in Spectrum-XGS lies in three algorithmic innovations and hardware synergies that address communication latency, congestion, and synchronization challenges in cross-regional GPU clusters.

1.Core Algorithms: Dynamic Adaptation to Long-Distance Network Characteristics

Spectrum-XGS’s core is a set of “distance-aware network optimization algorithms” that analyze key parameters of cross-data-center communication in real-time (distance, traffic patterns, congestion levels, performance metrics) and dynamically adjust network policies:

Distance-Adaptive Congestion Control: Unlike traditional Ethernet’s uniform treatment of all connections, Spectrum-XGS algorithms automatically adjust congestion thresholds based on actual distances between data centers (currently supporting deployments up to hundreds of kilometers), avoiding packet loss or buildup in long-distance transmissions.

Precise Latency Management: Through per-packet fine-grained adaptive routing, it eliminates latency jitter from packet retransmissions in traditional networks. Jitter is a critical hazard in AI clusters: if a single GPU lags due to delay, all collaborating GPUs must wait, directly impacting overall performance.

End-to-End Telemetry: Real-time collection of full-link performance data from GPUs to switches and cross-data-center links provides millisecond-level feedback for algorithmic adjustments, ensuring dynamic matching of network status to AI workload demands.

2. Hardware Synergies: Leveraging Spectrum-X Ecosystem’s High-Bandwidth Foundation

Spectrum-XGS achieves optimal performance when combined with specific NVIDIA hardware:

Spectrum-X Switches: As the underlying network backbone, providing high port density and low-latency forwarding.

ConnectX-8 SuperNIC: 800 Gb/s AI-dedicated network adapter for high-speed data transfer between GPUs and switches.

Blackwell Architecture Hardware: Such as B200 GPUs and GB10 superchips, deeply integrated with Spectrum-XGS to reduce end-to-end latency. NVIDIA validated through NCCL (collective communications library) benchmarks: Spectrum-XGS boosts communication performance between cross-data-center GPUs by 1.9x while controlling end-to-end latency at about 200 milliseconds—a level that feels responsive and lag-free for user interactions, meeting real-time requirements for AI inference.

Full-Stack Optimization for AI Training and Inference Efficiency with Spectrum-XGS

Spectrum-XGS is not an isolated technology but a key addition to NVIDIA’s full-stack AI ecosystem. In this release, NVIDIA also revealed software-level performance enhancements that synergize with Spectrum-XGS for hardware-algorithm-software collaboration:

- Dynamo Software Upgrade: Optimized for Blackwell architecture (e.g., B200 systems) to boost AI model inference performance by up to 4x, significantly reducing compute consumption for large model inference.

- Speculative Decoding Technology: Uses a small draft model to predict the next output token of the main AI model in advance, reducing the main model’s computation and enhancing inference performance by an additional 35%. This is especially suited for conversational inference scenarios in large language models (LLMs).

NVIDIA’s accelerated computing department director Dave Salvator stated that the core goal of these optimizations is to scale ambitious agentic AI applications. Whether training trillion-parameter large models or supporting AI inference services for millions of simultaneous users, the combination of Spectrum-XGS and the software ecosystem delivers predictable performance.

Early Applications and Industry Impact of Spectrum-XGS

First Users: CoreWeave Pioneers Cross-Domain AI Super Factory GPU cloud service provider CoreWeave is among the first adopters of Spectrum-XGS. The company’s co-founder and CTO Peter Salanki noted that this technology will enable its customers to access giga-scale AI capabilities, accelerating breakthroughs across industries. For example, supporting ultra-large-scale AI projects like the Stargate initiative from Oracle, SoftBank, and OpenAI.

Industry Trends: Ethernet Replacing InfiniBand as AI Network Mainstream Although InfiniBand held about 80% of the AI backend network market in 2023, the industry is rapidly shifting to Ethernet. NVIDIA’s choice to develop Spectrum-XGS on Ethernet aligns with this trend:

Compatibility and Cost Advantages: Ethernet is the universal standard for global data centers, more familiar to network engineers, and cheaper to deploy than InfiniBand.

Market Scale Projections: Dell’Oro Group data shows the Ethernet data center switch market will reach nearly $80 billion over the next five years.

NVIDIA’s Own Growth: 650 Group reports indicate NVIDIA as the “fastest-growing vendor” in the 2024 data center switch market, with its networking business revenue reaching $5 billion in Q2 2024 (ending April 27), up 56% year-over-year.

The launch of Spectrum-XGS extends NVIDIA’s full-stack monopoly strategy in AI infrastructure, while sparking new competitive dynamics:

- NVIDIA’s Full-Stack Layout: From GPUs (Blackwell), interconnects (NVLink/NVLink Switch), networks (Spectrum-X/Spectrum-XGS, Quantum-X InfiniBand) to software (CUDA, TensorRT-LLM, NIM microservices), NVIDIA has formed a closed loop covering “compute-connect-software” for AI infrastructure. Spectrum-XGS synergizes with NVLink for three-level scaling: intra-rack (NVLink), intra-data-center (Spectrum-X), and cross-data-center (Spectrum-XGS).

- Competitors’ Responses: Broadcom’s earlier SUE technology shares similar goals with Spectrum-XGS, aiming to optimize Ethernet performance to close the gap with InfiniBand. Additionally, vendors like Arista, Cisco, and Marvell are accelerating AI-dedicated Ethernet switches, with competition focusing on performance-cost-ecosystem compatibility.

The core value of Spectrum-XGS lies in pushing AI data center scaling from “single-site constraints” to “cross-regional collaboration.” As power and land become hard limits for single data centers, cross-city and cross-country AI super factories will become the core form supporting next-generation AI applications (e.g., general artificial intelligence, large-scale agent clusters).

As NVIDIA’s networking department senior vice president Gilad Shainer previewed at the Hot Chips conference: “Cross-data-center fiber optic physical networks have long existed, but software algorithms like Spectrum-XGS are the key to unlocking the true performance of these physical infrastructures.”

Related Products:

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMS4A00 (980-9IAH1-00XM00) Compatible 1.6T OSFP DR8D PAM4 1311nm 500m IHS/Finned Top Dual MPO-12 SMF Optical Transceiver Module

$2600.00

NVIDIA MMS4A00 (980-9IAH1-00XM00) Compatible 1.6T OSFP DR8D PAM4 1311nm 500m IHS/Finned Top Dual MPO-12 SMF Optical Transceiver Module

$2600.00

-

NVIDIA Compatible 1.6T 2xFR4/FR8 OSFP224 PAM4 1310nm 2km IHS/Finned Top Dual Duplex LC SMF Optical Transceiver Module

$3100.00

NVIDIA Compatible 1.6T 2xFR4/FR8 OSFP224 PAM4 1310nm 2km IHS/Finned Top Dual Duplex LC SMF Optical Transceiver Module

$3100.00

-

NVIDIA MMS4A00 (980-9IAH0-00XM00) Compatible 1.6T 2xDR4/DR8 OSFP224 PAM4 1311nm 500m RHS/Flat Top Dual MPO-12/APC InfiniBand XDR SMF Optical Transceiver Module

$3600.00

NVIDIA MMS4A00 (980-9IAH0-00XM00) Compatible 1.6T 2xDR4/DR8 OSFP224 PAM4 1311nm 500m RHS/Flat Top Dual MPO-12/APC InfiniBand XDR SMF Optical Transceiver Module

$3600.00