- Casey

John Doe

Answered on 2:41 am

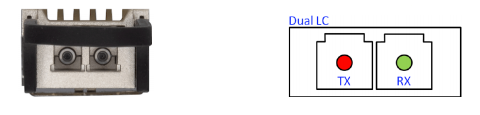

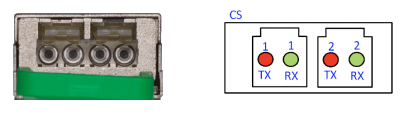

400G-FR4 / 400G-LR4: These transceivers use duplex LC connectors. The FR4 and LR4 transceivers use WDM (wavelength division multiplexing) to allow the use of two fibers instead of eight.

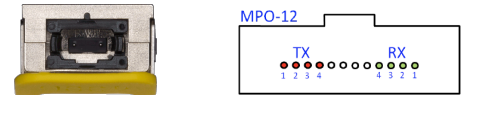

400G-DR4 / 400G-XDR4 / 400G-PLR4: These transceivers use a MPO-12 connector since the DR4 standard splits the 400Gbps signal into four parallel 100Gbps channels.

400G-BIDI (400G-SRBD): Bi-directional transceivers typically use LC connectors as they work by simultaneously transmitting and receiving data over one fiber.

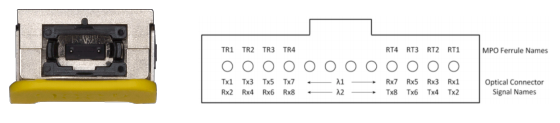

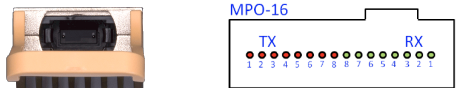

400G-SR8: This transceiver uses MPO-16 connectors, as the SR8 standard splits the 400Gbps signal into eight parallel 50Gbps channels.

400G-2FR4: This transceiver would typically use duplex LC connectors. It’s a two-lane 400GBASE-FR4 variant that uses two wavelengths on a single fiber, enabling duplex LC operation.

People Also Ask

In-Depth Analysis Report on 800G Switches: Architectural Evolution, Market Landscape, and Future Outlook

Introduction: Reconstructing Network Infrastructure in the AI Era Paradigm Shift from Cloud Computing to AI Factories Global data center networks are undergoing the most profound transformation in the past decade. Previously, network architectures were primarily designed around cloud computing and internet application traffic patterns, dominated by “north-south” client-server models. However,

Global 400G Ethernet Switch Market and Technical Architecture In-depth Research Report: AI-Driven Network Restructuring and Ecosystem Evolution

Executive Summary Driven by the explosive growth of the digital economy and Artificial Intelligence (AI) technologies, global data center network infrastructure is at a critical historical node of migration from 100G to 400G/800G. As Large Language Model (LLM) parameters break through the trillion level and demands for High-Performance Computing (HPC)

Key Design Constraints for Stack-OSFP Optical Transceiver Cold Plate Liquid Cooling

Foreword The data center industry has already adopted 800G/1.6T optical modules on a large scale, and the demand for cold plate liquid cooling of optical modules has increased significantly. To meet this industry demand, OSFP-MSA V5.22 version has added solutions applicable to cold plate liquid cooling. At present, there are

NVIDIA DGX Spark Quick Start Guide: Your Personal AI Supercomputer on the Desk

NVIDIA DGX Spark — the world’s smallest AI supercomputer powered by the NVIDIA GB10 Grace Blackwell Superchip — brings data-center-class AI performance to your desktop. With up to 1 PFLOP of FP4 AI compute and 128 GB of unified memory, it enables local inference on models up to 200 billion parameters and fine-tuning of models

RoCEv2 Explained: The Ultimate Guide to Low-Latency, High-Throughput Networking in AI Data Centers

In the fast-evolving world of AI training, high-performance computing (HPC), and cloud infrastructure, network performance is no longer just a supporting role—it’s the bottleneck breaker. RoCEv2 (RDMA over Converged Ethernet version 2) has emerged as the go-to protocol for building lossless Ethernet networks that deliver ultra-low latency, massive throughput, and minimal CPU

Related Articles

800G SR8 and 400G SR4 Optical Transceiver Modules Compatibility and Interconnection Test Report

Version Change Log Writer V0 Sample Test Cassie Test Purpose Test Objects:800G OSFP SR8/400G OSFP SR4/400G Q112 SR4. By conducting corresponding tests, the test parameters meet the relevant industry standards, and the test modules can be normally used for Nvidia (Mellanox) MQM9790 switch, Nvidia (Mellanox) ConnectX-7 network card and Nvidia (Mellanox) BlueField-3, laying a foundation for

In-Depth Analysis Report on 800G Switches: Architectural Evolution, Market Landscape, and Future Outlook

Introduction: Reconstructing Network Infrastructure in the AI Era Paradigm Shift from Cloud Computing to AI Factories Global data center networks are undergoing the most profound transformation in the past decade. Previously, network architectures were primarily designed around cloud computing and internet application traffic patterns, dominated by “north-south” client-server models. However,

Why Is It Necessary to Remove the DSP Chip in LPO Optical Module Links?

If you follow the optical module industry, you will often hear the phrase “LPO needs to remove the DSP chip.” Why is this? To answer this question, we first need to clarify two core concepts: what LPO is and the role of DSP in optical modules. This will explain why

Global 400G Ethernet Switch Market and Technical Architecture In-depth Research Report: AI-Driven Network Restructuring and Ecosystem Evolution

Executive Summary Driven by the explosive growth of the digital economy and Artificial Intelligence (AI) technologies, global data center network infrastructure is at a critical historical node of migration from 100G to 400G/800G. As Large Language Model (LLM) parameters break through the trillion level and demands for High-Performance Computing (HPC)

Key Design Constraints for Stack-OSFP Optical Transceiver Cold Plate Liquid Cooling

Foreword The data center industry has already adopted 800G/1.6T optical modules on a large scale, and the demand for cold plate liquid cooling of optical modules has increased significantly. To meet this industry demand, OSFP-MSA V5.22 version has added solutions applicable to cold plate liquid cooling. At present, there are

NVIDIA DGX Spark Quick Start Guide: Your Personal AI Supercomputer on the Desk

NVIDIA DGX Spark — the world’s smallest AI supercomputer powered by the NVIDIA GB10 Grace Blackwell Superchip — brings data-center-class AI performance to your desktop. With up to 1 PFLOP of FP4 AI compute and 128 GB of unified memory, it enables local inference on models up to 200 billion parameters and fine-tuning of models

RoCEv2 Explained: The Ultimate Guide to Low-Latency, High-Throughput Networking in AI Data Centers

In the fast-evolving world of AI training, high-performance computing (HPC), and cloud infrastructure, network performance is no longer just a supporting role—it’s the bottleneck breaker. RoCEv2 (RDMA over Converged Ethernet version 2) has emerged as the go-to protocol for building lossless Ethernet networks that deliver ultra-low latency, massive throughput, and minimal CPU

Related posts:

- If the Server’s Module is OSFP and the Switch’s is QSFP112, can it be Linked by Cables to Connect Data?

- Can I Plug an OSFP Module into a QSFP-DD Port, or a QSFP-DD Module into an OSFP Port?

- What is the Difference Between 400G-BIDI, 400G-SRBD and 400G-SR4.2?

- What are the Pros and Cons of Using OSFPs or QSFP-DDs?