FiberMall has extrapolated the AI infrastructure including optical transceivers that ChatGPT brings to the table.

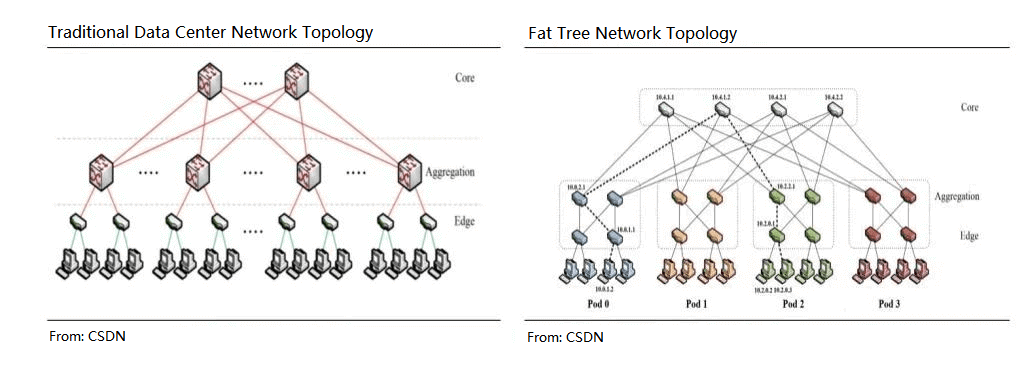

The difference from a traditional data center is that with the InfiniBand fat tree structure common to AI, more switches are used and the number of ports upstream and downstream at each node is identical.

One of the basic units corresponding to the AI clustering model used by NVIDIA is the SuperPOD.

A standard SuperPOD is built with 140 DGX A100 GPU servers, HDR InfiniBand 200G NICs, and 170 NVIDIA Quantum QM8790 switches with 200G and 40 ports each.

Based on the NVIDIA solution, a SuperPOD with 170 switches, each switch has 40 ports, and the simplest way is to interconnect 70 servers each, and the corresponding cable requirement is 40×170/2=3400, considering the actual deployment situation up to 4000 cables. Among them, the ratio of copper cable: AOC: optical module = 4:4:2, corresponding to the number of optical transceivers required = 4000 * 0.2 * 2 = 1600, that is, for a SuperPod, the ratio of server: switch: optical module usage = 140: 170: 1600 = 1: 1.2: 11.4

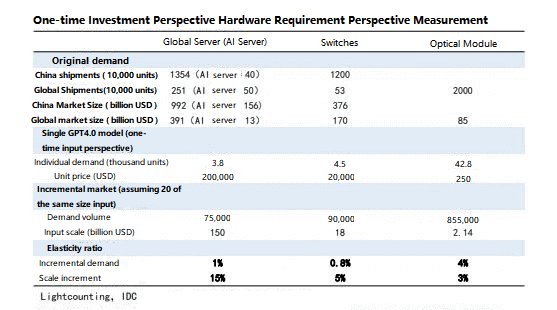

A requirement similar to GPT4.0 entry-level requirements requires approximately 3750 NVIDIA DGX A100 servers. The requirements of optical transceivers under this condition are listed in the following table.

According to IDC, the global AI server market is $15.6 billion in 2021 and is expected to reach $35.5 billion by 2026. The market size of China’s AI server industry is $6.4 billion in 2021. According to IDC data, 200/400G port shipments are expected to increase rapidly in data center scenarios, with a compound growth rate of 62% from 22-26 years. Global switch port shipments are expected to exceed 870 million in 2026, with a market size of over $44 billion.

FiberMall extrapolates the demand for servers, switches, and optical transceivers from AI data center architecture. In this extrapolation process, FiberMall uses the ratio of 4:4:2. The use of optical modules in the data center is ultimately directly related to traffic demand. This ratio is likely to exist only at full capacity, and it is still worthwhile to study in depth how the service traffic within the AI data center is now.

Related Products:

-

QSFP-DD-200G-ER4 200G QSFP-DD ER4 PAM4 LWDM4 40km LC SMF FEC Optical Transceiver Module

$1500.00

QSFP-DD-200G-ER4 200G QSFP-DD ER4 PAM4 LWDM4 40km LC SMF FEC Optical Transceiver Module

$1500.00

-

QSFP-DD-200G-CWDM4-10 2X100G QSFP-DD CWDM4 10km Dual CS SMF Optical Transceiver Module

$1300.00

QSFP-DD-200G-CWDM4-10 2X100G QSFP-DD CWDM4 10km Dual CS SMF Optical Transceiver Module

$1300.00

-

QSFP-DD-200G-CWDM4 2X100G QSFP-DD CWDM4 2km CS SMF Optical Transceiver Module

$1100.00

QSFP-DD-200G-CWDM4 2X100G QSFP-DD CWDM4 2km CS SMF Optical Transceiver Module

$1100.00

-

QSFP-DD-200G-SR8 2x 100G QSFP-DD SR8 850nm 70m/100m OM3/OM4 MPO-16(APC) MMF Optical Transceiver Module

$350.00

QSFP-DD-200G-SR8 2x 100G QSFP-DD SR8 850nm 70m/100m OM3/OM4 MPO-16(APC) MMF Optical Transceiver Module

$350.00

-

QSFP-DD-400G-ER4 400G QSFP-DD ER4 PAM4 LWDM4 40km LC SMF without FEC Optical Transceiver Module

$3500.00

QSFP-DD-400G-ER4 400G QSFP-DD ER4 PAM4 LWDM4 40km LC SMF without FEC Optical Transceiver Module

$3500.00

-

QSFP-DD-400G-LR4 400G QSFP-DD LR4 PAM4 CWDM4 10km LC SMF FEC Optical Transceiver Module

$600.00

QSFP-DD-400G-LR4 400G QSFP-DD LR4 PAM4 CWDM4 10km LC SMF FEC Optical Transceiver Module

$600.00

-

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00

-

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

-

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

-

QSFP-DD-200G-LR4 200G QSFP-DD LR4 PAM4 LWDM4 10km LC SMF FEC Optical Transceiver Module

$1000.00

QSFP-DD-200G-LR4 200G QSFP-DD LR4 PAM4 LWDM4 10km LC SMF FEC Optical Transceiver Module

$1000.00