Data Center Interconnection (DCI) is a network solution that realizes interconnection between multiple data centers. The data center is an important infrastructure for digital transformation. Thanks to the rise of cloud computing, big data, and artificial intelligence, enterprise data centers are increasingly widely used. More and more organizations and enterprises deploy multiple data centers in different regions to meet the needs of scenarios such as cross-regional operations, user access, and remote disaster recovery. At this time, multiple data centers need to be interconnected.

What is a data center?

With the continuous development of industrial digital transformation, data has become a key production factor. Data centers responsible for the calculation, storage, and forwarding of data, are the most critical digital infrastructure in the new infrastructure. A modern data center mainly includes the following core components:

- A computing system, including general computing modules for deploying services and high-performance computing modules that provide supercomputing power.

- Storage systems, including mass storage modules, data management engines, and dedicated storage networks.

- The energy system includes the power supply, temperature control, IT management, etc.

- The data center network is responsible for connecting general computing, high-performance computing, and storage modules within the data center, and all data interactions between them must be realized through the data center network.

Schematic diagram of the composition of the data center

Among them, the general computing module directly undertakes the user’s business, and the physical basic unit it relies on is a large number of servers. If the server is the body that the data center operates, the data center network is the soul of the data center.

Why do we need data center interconnection?

At present, the construction of data centers for various organizations and enterprises is very common, but it is difficult for a single data center to meet the business needs of the new era. As a result, there is an urgent need for the interconnection of multiple data centers, which is mainly reflected in the following aspects.

- The rapid growth of the business scale

Nowadays, emerging businesses such as cloud computing and intelligence are developing rapidly, and the number of related applications that are strongly dependent on data centers is also increasing rapidly. Therefore, the scale of business undertaken by data centers is growing rapidly, and the resources of a single data center will soon become insufficient.

Restricted by factors such as the occupation of land and energy supply of the data center construction, it is impossible for a single data center to expand indefinitely. When the business scale grows to a certain level, it is necessary to build multiple data centers in the same city or in different places. At this time, several data centers need to be interconnected and cooperate to complete business support.

In addition, in the context of economic digital transformation, in order to achieve common business success, companies in the same industry and in different industries need to frequently share and cooperate at the data level, which also requires interconnection and intercommunication between data centers of different companies.

- Cross-regional user access is increasingly common

In recent years, the central business of data centers has changed from web services to cloud services and data services. The scope of users of related organizations and enterprises is no longer limited by regions. Especially when the mobile Internet is very popular, users expect to enjoy high-quality services anytime and anywhere. In order to meet the above requirements and further improve the user experience, qualified enterprises usually build multiple data centers in different regions, so as to facilitate the nearby access of users across regions. This requires that business deployment can cross data centers and support the interconnection of multiple data centers.

Cross-regional user access

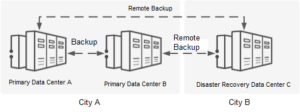

- Rigid requirements for remote backup and disaster recovery

People’s daily work is more and more dependent on various application systems, whose continuity depends on the stable operation of the data center system. At the same time, more and more attention is paid to data security, business reliability, continuity, backup and disaster recovery have become rigid requirements.

Data centers are always facing potential threats such as various natural disasters, man-made attacks, and unexpected accidents in an environment full of uncertainty and various risks. It has gradually become an effective solution generally recognized by the industry that improves business continuity and robustness as well as the high reliability and availability of data by deploying multiple data centers in different places. The data center interconnection must be completed first to deploy backup and disaster recovery solutions between different data centers.

Remote backup and disaster recovery

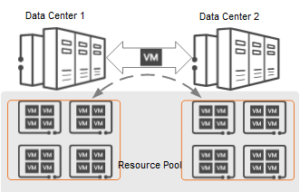

- Trends in data center virtualization and resource pooling

With the stepwise maturity of the cloud computing business model, various applications and traditional IT services are “moving towards the cloud”, and cloud service is becoming a new value center. Therefore, the transition from traditional data centers to cloud-based data centers has become a mainstream trend. Virtualization and resource pooling are key features of cloud-based data centers. The core idea is to transform the smallest functional unit of a data center from a physical host into a VM (Virtual Machine).

These VMs have nothing to do with the physical location, and their resource usage can be flexibly stretched. They support free migration across servers and data centers, thereby realizing resource integration within and across data centers, forming a unified resource pool, which greatly improves the flexibility and efficiency of resource utilization. The interconnection between data centers is a prerequisite for realizing VM migration across data centers. Therefore, data center interconnection is also an important link in realizing data center virtualization and resource pooling.

Virtualization and resource pooling

What are the options for data center interconnection?

To better meet the needs of cloud-based data centers, many data center network solutions have emerged, such as HW data center switches (CloudEngine series), HW data center controllers (iMaster NCE-Fabric), and intelligent network analysis platforms (iMaster NCE-FabricInsight). Here are two recommended data center interconnection solutions.

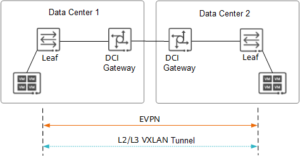

- End-to-end VXLAN solution

Data center interconnection based on end-to-end VXLAN tunnels means that the computing and network of multiple data centers are unified resource pools, which are centrally managed by a cloud platform and a set of iMaster NCE-Fabric. Multiple data centers are unified end-to-end VXLAN domains and users’ VPC (Virtual Private Clouds) and subnets can be deployed across data centers, enabling direct service interoperability. The deployment architecture is shown in the following figure.

Schematic diagram of the end-to-end VXLAN solution architecture

In this solution, end-to-end VXLAN tunnels need to be established between multiple data centers. As shown in the figure below, firstly, the Underlay routes between the data centers are required to communicate with each other; secondly, at the overlay network level, EVPN must be deployed between the Leaf devices of the two data centers. In this way, the Leaf devices at both ends discover each other through the EVPN protocol, and transmit VXLAN encapsulation information to each other through the EVPN route, thereby triggering the establishment of an end-to-end VXLAN tunnel.

Schematic diagram of an end-to-end VXLAN tunnel

This solution is mainly used to match Muti-PoD scenarios. PoD (Point of Delivery) refers to a set of relatively independent physical resources. Multi-PoD refers to using a set of iMaster NCE-Fabric to manage multiple PoDs, and multiple PoDs form an end-to-end VXLAN domain. This scenario is suitable for the interconnection of multiple small-scale data centers that are close to each other in the same city.

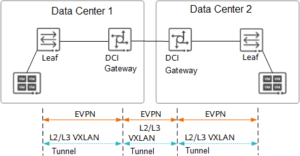

- Segment VXLAN Solution

The data center interconnection based on the Segment VXLAN tunnel means that in a multi-datacenter scenario, the computing and network of each data center are independent resource pools, which are independently managed by their own cloud platforms and iMaster NCE-Fabric. Each data center is an independent VXLAN domain, and another DCI VXLAN domain needs to be established between data centers to achieve interoperability. Moreover, users’ VPCs and subnets are deployed in their own data centers. Therefore, the business interoperability between different data centers needs to be orchestrated by a higher-level cloud management platform. The deployment architecture is shown in the following figure.

Segment VXLAN solution architecture diagram

In this solution, VXLAN tunnels must be established within and between data centers. As shown in the figure below, firstly, underlay routes between data centers are required to communicate with each other; secondly, at the overlay network level, EVPN must be deployed between Leaf devices and DCI gateways within the data center, as well as between DCI gateways in different data centers. In this way, related devices discover each other through the EVPN protocol, and transmit VXLAN encapsulation information to each other through EVPN routes, thereby triggering the establishment of Segment VXLAN tunnels.

Segment VXLAN tunnel diagram

This solution is mainly used to match the Multi-Site scenario. The scenario is suitable for the interconnection of multiple data centers located in different regions, or the interconnection of multiple data centers that are too far away to be managed by the same set of iMaster NCE-Fabric.

What key technologies are required for DCI?

VXLAN is a tunneling technology that can superimpose a Layer 2 virtual network on any network that can be reached by route, and realize intercommunication within the VXLAN network through a VXLAN gateway. Meanwhile, intercommunication with traditional non-VXLAN networks can also be achieved. VXLAN uses MAC in UDP encapsulation technology to extend the Layer 2 network, encapsulates Ethernet packets on top of IP packets, and transmits them into the network through IP routing. The intermediate device does not need to pay attention to the MAC address of the VM. What’s more, the IP routing network has no network structure restrictions, with large-scale scalability, so that VM migration is not limited by the network architecture.

EVPN is a next-generation full-service bearer VPN solution. EVPN unifies the control plane of various VPN services and uses the BGP extension protocol to transmit the accessibility information of Layer 2 or Layer 3, realizing the separation of the forwarding plane and the control plane. With the in-depth development of data center networks, EVPN and VXLAN have been gradually integrated.

VXLAN introduces the EVPN protocol as the control plane, which makes up for the lack of a control plane at first. EVPN uses VXLAN as the public network tunnel, which enables EVPN to be more widely used in scenarios such as data center interconnection.

FiberMall 200G QSFP56 ER4 suitable for metro DCI is in R&D

In the context of 5G, broadband speed, transmission distance, and cost control are the directions of data center and metro optical transmission network users. FiberMall is developing the 200G QSFP56 ER4 optical module to meet the market demand. It adopts the mainstream 4-channel WDM optical engine architecture in the market and integrates a 4-channel cooling EML laser and APD photodetector. It also supports a 200GE rate (4X53Gbps) and OTN standard, suitable for 200G metro DCI long-distance interconnection and 5G backhaul.

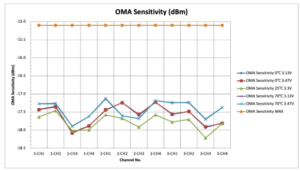

FiberMall’s 200G QSFP56 ER4 optical module has a power consumption of 6.4W at room temperature, and power consumption of less than 7.5W at three temperatures, with excellent energy-saving performance. The optical module complies with QSFP56 MSA and IEEE 802.3cn 200GBASE-ER4 Ethernet standard, with superior receiving OMA sensitivity better than -17dBm. Due to the use of 50G PAM4 CDR based on DSP technology, the product has excellent performance and fully meets the single-mode dual-fiber transmission distance of 40km.

The excellent performance of the product is as follows:

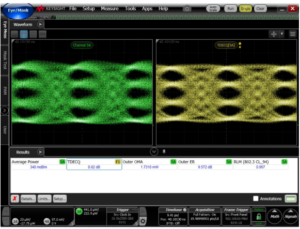

Optical eye diagram

Passing the 40km fiber test at a high temperature of 70℃ and the FEC Margin performance test

200G QSFP56 ER4 OMA sensibility

FiberMall 200G product line:

- Active optical cable series:

200G QSFP56 AOC

200G QSFP-DD AOC

- 4-channel optical module:

200G QSFP56 SR4

200G QSFP56 DR4

200G QSFP56 FR4

200G QSFP56 LR4

FiberMall QSFP56 200G SR4

Table of Contents

ToggleRelated Products:

-

Cisco Compatible 100G DWDM QSFP28 C25 C26 100GHz CS DDM Optical Transceiver

$1600.00

Cisco Compatible 100G DWDM QSFP28 C25 C26 100GHz CS DDM Optical Transceiver

$1600.00

-

Q28-DW100G27-80C Compatible 100G DWDM QSFP28 PAM4 Single Wave C27 1555.75nm 100GHz LC 80km DDM Optical Transceiver Module

$1900.00

Q28-DW100G27-80C Compatible 100G DWDM QSFP28 PAM4 Single Wave C27 1555.75nm 100GHz LC 80km DDM Optical Transceiver Module

$1900.00

-

EDFA 40/80 Channels DWDM C-Band Optical Pre-Amplifier Maximal Output Power +16dBm Gain 25dB Saturated Optical Power -9dBm

$1139.00

EDFA 40/80 Channels DWDM C-Band Optical Pre-Amplifier Maximal Output Power +16dBm Gain 25dB Saturated Optical Power -9dBm

$1139.00

-

DCM 80km DCF-based Passive Dispersion Compensation Module, 8.0dB Low Loss, LC/UPC Connector

$929.00

DCM 80km DCF-based Passive Dispersion Compensation Module, 8.0dB Low Loss, LC/UPC Connector

$929.00

-

CFP2-200G-DCO 200G Coherent CFP2-DCO C-band Tunable Optical Transceiver Module

$6500.00

CFP2-200G-DCO 200G Coherent CFP2-DCO C-band Tunable Optical Transceiver Module

$6500.00

-

CFP2-400G-DCO 400G Coherent CFP2-DCO C-band Tunable Optical Transceiver Module

$6500.00

CFP2-400G-DCO 400G Coherent CFP2-DCO C-band Tunable Optical Transceiver Module

$6500.00

-

QSFP-DD-400G-DCO-ZR 400G Coherent QSFP-DD DCO C-band Tunable Optical Transceiver Module

$6000.00

QSFP-DD-400G-DCO-ZR 400G Coherent QSFP-DD DCO C-band Tunable Optical Transceiver Module

$6000.00

-

40G/100G OTU (OEO) Service Card, Transponder, 2 Channels, Supports Four 40G QSFP+ or 100G QSFP28, with 3R System

$900.00

40G/100G OTU (OEO) Service Card, Transponder, 2 Channels, Supports Four 40G QSFP+ or 100G QSFP28, with 3R System

$900.00

-

200G Transponder/Muxponder : 2x 100G QSFP28 to 1x 200G CFP2 DP-8QAM or DP-16QAM

$4550.00

200G Transponder/Muxponder : 2x 100G QSFP28 to 1x 200G CFP2 DP-8QAM or DP-16QAM

$4550.00

-

400G Transponder/Muxponder : 4x 100G QSFP28 to 1x 400G CFP2 DP-16QAM and 200G DP-16QAM

$5000.00

400G Transponder/Muxponder : 4x 100G QSFP28 to 1x 400G CFP2 DP-16QAM and 200G DP-16QAM

$5000.00