- Mia

- August 29, 2023

- 2:15 am

Harry Collins

Answered on 2:15 am

CX7 network card can interconnect with other 400G Ethernet switches that support RDMA, but you need to pay attention to the following points:

- The switch and the network card need to use suitable cables and transceivers, such as OSFP or QSFP112.

- The switch and the network card need to use the same rate and frame size, such as 400 Gb/s and 4 KB MTU.

- The switch and the network card need to configure the same RoCE v2 parameters, such as priority, flow control, congestion control, etc.

- The switch and the network card need to ensure the stability and reliability of the network and avoid packet loss or retransmission that causes performance degradation.

People Also Ask

Related Articles

800G SR8 and 400G SR4 Optical Transceiver Modules Compatibility and Interconnection Test Report

Version Change Log Writer V0 Sample Test Cassie Test Purpose Test Objects:800G OSFP SR8/400G OSFP SR4/400G Q112 SR4. By conducting corresponding tests, the test parameters meet the relevant industry standards,

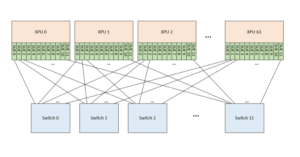

AI Compute Clusters: Powering the Future

In recent years, the global rise of artificial intelligence (AI) has captured widespread attention across society. A common point of discussion surrounding AI is the concept of compute clusters—one of

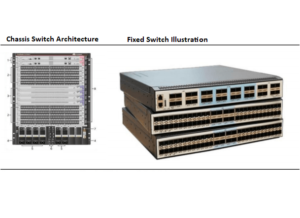

Data Center Switches: Current Landscape and Future Trends

As artificial intelligence (AI) drives exponential growth in data volumes and model complexity, distributed computing leverages interconnected nodes to accelerate training processes. Data center switches play a pivotal role in

Comprehensive Guide to 100G BIDI QSFP28 Simplex LC SMF Transceivers

The demand for high-speed, cost-effective, and fiber-efficient optical transceivers has surged with the growth of data centers, telecommunications, and 5G networks. The 100G BIDI QSFP28 (Bidirectional Quad Small Form-Factor Pluggable

NVIDIA SN5600: The Ultimate Ethernet Switch for AI and Cloud Data Centers

The NVIDIA SN5600 is a cutting-edge, high-performance Ethernet switch designed to meet the demanding needs of modern data centers, particularly those focused on artificial intelligence (AI), high-performance computing (HPC), and

How Ethernet Outpaces InfiniBand in AI Networking

Ethernet Challenges InfiniBand’s Dominance InfiniBand dominated high-performance networking in the early days of generative AI due to its superior speed and low latency. However, Ethernet has made significant strides, leveraging

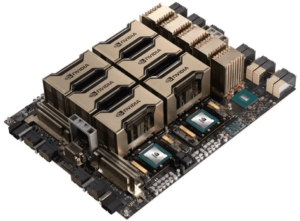

Understanding NVIDIA’s Product Ecosystem and Naming Conventions

Compute Chips—V100, A100, H100, B200, etc. These terms are among the most commonly encountered in discussions about artificial intelligence. They refer to AI compute cards, specifically GPU models. NVIDIA releases

Related posts:

- If the Server’s Module is OSFP and the Switch’s is QSFP112, can it be Linked by Cables to Connect Data?

- What FEC is Required When the 400G-BIDI is Configured for Each of the Three Operating Modes?

- What Type of Optical Connectors do the 400G-FR4/LR4, 400G-DR4/XDR4/PLR4, 400G-BIDI (400G SRBD), 400G-SR8 and 400G-2FR4 Transceivers Use?

- What is the 100G-SRBD (or “BIDI”) Transceiver?