Table of Contents

ToggleTwo Key Design Principles for GPU Cluster Networks

- 1:1 Bandwidth Convergence Ratio: This is the foundation of a lossless network. It means the total bandwidth from the server access layer to the network core layer is never reduced at any point. For instance, if the total uplink bandwidth of all access switches is 100Tbps, the aggregation and core layers must provide at least 100Tbps of switching capacity to carry this traffic, preventing congestion points caused by merging multiple low-speed ports into a single high-speed port.

- Consistency in Access-to-Core Port Ratios: This is one of the specific methods used to achieve 1:1 convergence. It is crucial to understand the specific definition of “Core Ports” in this context.

The Definition of Core Ports

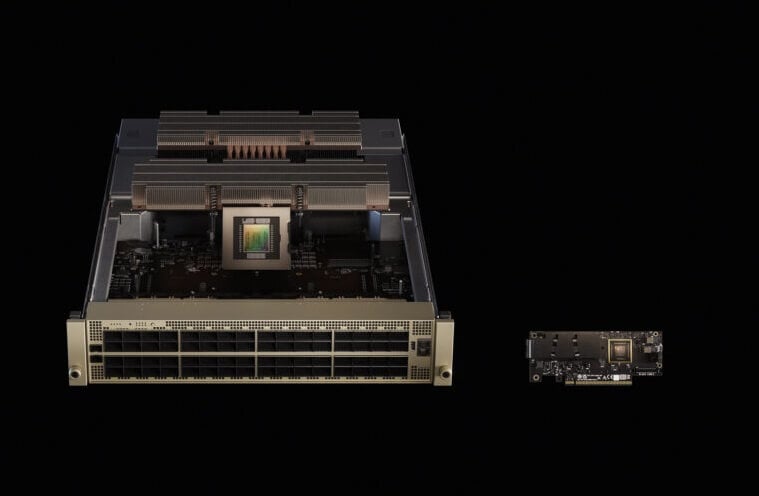

-1024x505.png)

In a typical Spine-Leaf (CLOS) network architecture for intelligent computing centers:

- Access Layer (Leaf Layer): Switches directly connected to GPU servers via high-speed Network Interface Cards (NICs).

- Core Layer (Spine Layer): Switches acting as the traffic hub, responsible for interconnecting all Leaf switches.

- “Core Ports”: Generally refers to the uplink ports on the Leaf switches used to connect to the Spine switches.

Consistent Access-to-Core Port Ratios

The number and bandwidth of “downlink ports” (used to connect servers) on a Leaf switch should maintain a fixed and sufficient ratio—typically 1:1 or a high-standard configuration—relative to the “uplink ports” (core ports) used to connect to Spine switches.

Case Study: Core Port Ratios

Scenario: Assume a Leaf switch has 64 downlink ports, each with a rate of 400Gbps, used to connect 64 GPU servers.

Requirement: To achieve 1:1 non-convergence, the Leaf switch needs sufficient uplink bandwidth to reach the Spine layer.

Ideal Setup: If each uplink (core) port is also 400Gbps, the switch would need at least 64 uplink ports to ensure non-blocking traffic for all 64 downlink ports simultaneously.

Common Practice: Due to limitations in switching capacity and port density, common configurations often use a higher ratio. For example:

Configuration: 48 x 400G downlink ports + 32 x 400G uplink ports.

Result: Total Downlink = 19.2Tbps; Total Uplink = 12.8Tbps. This results in a convergence ratio of approximately 1.5:1, which is not strictly 1:1.

High-Requirement Design: To get closer to 1:1, designers may use higher-capacity switches or a “Dual-Plane” design, doubling the number of Spine switches to provide more uplink paths.

Deep Meaning: In design, one must strictly calculate and reserve Leaf switch uplink (core) ports based on server bandwidth requirements. In top-tier intelligent computing networks, this ratio should be as close to 1:1 as possible to ensure no bottlenecks occur under maximum traffic pressure.

Importance to GPU Clusters

AI training (especially for Large Language Models) has unique traffic patterns:

- All-to-All Communication: Distributed training involves collective communication (like All-Reduce) across all GPUs, generating massive “East-West” traffic that can instantly saturate the network.

- High Burstiness: Traffic arrives in bursts during the communication phases that alternate with computation.

- Sensitivity to Latency and Packet Loss: Even minor congestion or packet loss causes the entire cluster to wait, idling expensive GPU resources and drastically reducing training efficiency.

If the core port ratio is insufficient, Leaf switch uplinks become congestion points during All-to-All communication, leading to:

- Increased latency due to buffering.

- TCP retransmissions or RoCE congestion control triggers.

- PFC backpressure and performance jitter.

Summary

- Core Ports: Specifically refers to the uplink ports on Leaf switches connecting to Spine switches.

- Ratio Consistency: Network designs must ensure Leaf switches have enough high-bandwidth uplink ports to match total downlink bandwidth. This supports the full-bandwidth, full-mesh traffic of AI training, achieving a truly lossless, non-blocking network. This is the most distinct feature of intelligent computing centers compared to traditional data centers.

Related Products:

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

-

NVIDIA MCP7Y00-N001 Compatible 1m (3ft) 800Gb Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Direct Attach Copper Cable

$160.00

NVIDIA MCP7Y00-N001 Compatible 1m (3ft) 800Gb Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Direct Attach Copper Cable

$160.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$850.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$850.00

-

NVIDIA MCP7Y70-H001 Compatible 1m (3ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$120.00

NVIDIA MCP7Y70-H001 Compatible 1m (3ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$120.00