Nvidia DGX GH200 represents a paradigm shift in artificial intelligence (AI) and machine learning, ushering in a new chapter for AI supercomputers. It has been designed as a cutting-edge system capable of handling complex AI workloads with unmatched computational power, rapidity, and energy efficiency that meet expanding needs. This article will delve into technical specifications, innovative features, and potential applications of DGX GH200, illustrating how it is about to change the way businesses run by accelerating AI research and development. From the medical sector to self-governing cars, various industries will be driven forward by DGX GH200’s capacities, which underscore Nvidia’s dominance over AI.

Table of Contents

ToggleExploring the Nvidia Grace Hopper GH200 Superchips

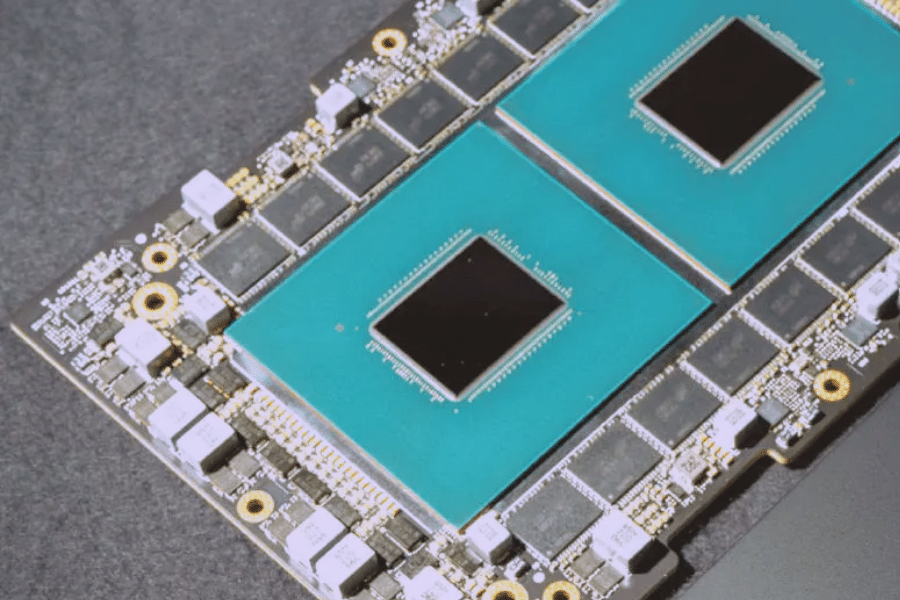

The GH200 Grace Hopper Superchip represents incredible progress in computing technology, especially for artificial intelligence and machine learning. Nvidia’s GH200 chip is powering what might be called the AI era of today as it incorporates a number of innovative technologies aimed at solving specific problems faced by AI researchers and developers now.

First, the GH200 takes advantage of modern Nvidia architecture, which combines Grace CPU and Hopper GPU. This unification lets merge high performance computing with graphics processing capabilities that are essential for effectively running complex AI algorithms.

Secondly, the GH200 introduces NVLink interconnect technology which significantly enhances data transfer rates between the CPU and GPU. This results in reduced latency and increased bandwidth leading to faster communication and consequently quicker AI workloads processing.

Additionally, the GH200 is built with a pioneering memory architecture that includes High Bandwidth Memory (HBM2e), offering a significant increase in both capacity and speed. Now, with this, AI models can analyze huge data sets much more quickly than ever before: important for tasks like natural language processing, image recognition, or real-time decision making.

GH200 has another critical aspect to consider – its thermal and energy efficiency. It uses advanced cooling technologies as well as power management techniques to maintain optimal performance while minimizing power consumption. It does so by reducing operational expenditures but also responding to overall demands for ecologically responsible computing solutions.

Basically, the GH200 Superchip is designed by Nvidia’s Grace Hopper group to address three key requirements in common AI calculations: quickness, compactness plus extensibility. This is indicative of innovation happening at Nvidia that will have ripple effects throughout various industries such as healthcare or automotive sectors or financial services industries among other entertainment platforms too.

The Role of the DGX GH200 AI Supercomputer in Advancing AI

The DGX GH200 AI Supercomputer is the most recent innovation in Artificial Intelligence (AI) and High-Performance Computing (HPC).

Improved Compute Capability: By incorporating thousands of deep learning optimized Tensor Cores, GH200 achieves an unprecedented level of compute density. This plays a key role in generative AI solutions, where such programs should have very high computational abilities for dealing with complex data patterns and generating exact outputs.

Advanced Parallel Processing: The DGX GH200 utilizes NVLink interconnect technology which allows high-speed parallel processing to be performed on large scale and complex AI models. It is, therefore, important that Generative AI workloads have parallelism since they are often applied to very large datasets simultaneously.

High Bandwidth Memory (HBM2e): HBM2e technology has been included in order to increase bandwidth significantly as compared to conventional memory solutions. For Generative AI, this means improved processing speeds when using large data sets, hence quicker training and inference times during model development.

Optimized Data Flow: The cutting-edge architecture of the GH200 has been developed to optimize data flow between processor cores, memory, and storage. Thus, it lessens bottlenecks by enabling smooth execution for real-time data access and processing tasks commonly used by Generative AI.

Energy and Thermal Efficiency: Generative AI tasks are highly computationally expensive and energy-intensive. Advanced cooling technologies together with power management strategies incorporated into the GH200 makes it possible for even demanding AI operations to be done efficiently while reducing energy consumption thereby minimizing operational costs.

Briefly, the DGX GH200 AI Supercomputer is a giant step forward towards integrating AI with high-performance computing capabilities. The architectural innovations and technological advancements presented by it, therefore, become a base for pushing forward generative AI boundaries, thus accelerating the development/distribution of sophisticated artificial intelligence models in many areas.

Nvidia H100 and GH200: Complementary Forces in AI Supercomputing

Comparing H100 and GH200 Grace Hopper Superchip Capabilities

Nvidia has taken a great step in AI supercomputing through the introduction of H100 and the subsequent GH200 Superchips. Nvidia’s strategic AI hardware technology development is further demonstrated by these two powerhouses, which address the growing needs of an AI workload in diverse ways.

Nvidia H100 – Setting the Stage for AI Acceleration

The Nvidia H100, with the Transformer Engine, is purposefully designed to speed up AI workloads in natural language processing (NLP) deep learning inference, and training tasks. Key features of the H100 are:

- Transformative Engine: It boosts the efficiency of models optimized for Transformers which are indispensable in natural language processing and AI supported analytics, hence it reduces computation time to a considerable extent.

- AI Oriented Tensor Cores: These make a big difference in terms of how quickly AI work is carried out thus making data processing more efficient and insights faster.

- Multiprocessor Instance GPU (MIG) Capability: It enables the simultaneous running of various independent instances on one single graphics card. Therefore, it optimally allocates resources and maximizes their utilization.

GH200 Grace Hopper – Advancing the Frontier

Taking the H100 a step further, GH200 explores new horizons of AI acceleration, concentrating on Generative AI workloads requiring bare computational capacity as well as instant data processing and power saving techniques. Some of the remarkable features of GH200 are:

- Enhanced Compute Units: Providing even more compute units for parallel processing tasks, which is very important for Generative AI model training and inference.

- Advanced Memory Solution (HBM2e): It offers more bandwidth than that of H100’s memories to address the voracious data appetite of Generative AI Platforms in fast and large-scale dataset processing.

- Optimized Data Flow Architecture: Ensuring easy data transfer within the system, hence reducing delays, and enhancing real-time performance by AI jobs.

- Superior Energy and Thermal Management: The advanced cooling technology and power management strategies are used for sustainability as well as cost-effectiveness in operations especially given how energy demanding Generative AI workloads can be.

Impact and Advancement

The AI model training and NLP tasks were led by the H100. Of particular significance is the GH200, which, with its targeted enhancements, pushes the envelope further in Generative AI applications. Nvidia’s investment in this progressive development is not only a response to the ever-evolving AI landscape but also a means of shaping it as well. The GH200, therefore, stands out as the epitome of AI supercomputing that offers higher parallel processing capabilities, more advanced memory systems, and better data flow management while ushering in new AI innovations and applications.

For the most part, the H100 and GH200 both accelerate AI workloads generally as their overarching goals. However, the GH200’s specialized functionalities meet the nuanced requirements of Generative AI thus bringing in subtle gains that can have a huge impact on efficiency and feasibility of next-generation AI model development and deployment.

Understanding NVLink’s Role in Nvidia’s AI Supercomputers

The Evolution of NVLink in Supporting High-Speed Data Transfer

Data transfer abilities in computer systems have been taken to another level by Nvidia’s proprietary high-speed interconnect technology called NVLink. Initially, NVLink was designed as a solution to the increasing demand for faster data flow between GPUs and between GPUs and CPUs. Hence, it has undergone several improvements to align with the increasing needs of sophisticated computing tasks such as AI and ML. NVLink operates on significantly higher bandwidth than traditional PCIe interfaces, thereby enabling faster data transfer and lessening data bottlenecks during data-intensive operations. This development is indicative of Nvidia having anticipated the future demands for bandwidth by ensuring that computational ecosystems will scale appropriately without being limited by transfer rates.

How NVLink Enhances the Performance of Nvidia AI Supercomputers

A good performance is ensured by NVLink on Nvidia AI supercomputers, which allows for faster and more efficient sharing of data among system components. Another key use of this component is in AI computations that are distributed over many GPUs where NVLink’s high bandwidth significantly contributes towards diminishing the period within which one GPU to another can send data, hence accelerating the overall speed of processing AI tasks. Such data sharing between devices plays an important role for computational efficiency and effectiveness in training complex AI models or processing large datasets. As a result, this makes it possible for various devices to share and access data quickly while processing these kinds of tasks, thus directly influencing their effectiveness. This ensures efficient running of these machines by minimizing the time needed to transfer files, resulting in unprecedented speeds for AI research breakthroughs and applications.

Integrating Nvidia NVLink with GH200 for Advanced AI Modeling

The integration of NVLink with the GH200 GPU is a profound leap in Nvidia’s AI supercomputing architecture. When GH200’s specialized AI computing ability is combined with NVLink’s superior speed, Nvidia has developed a very well-optimized platform for advanced AI modeling. In this way, multiple GH200 units can seamlessly interact and as well increase system parallelism to accommodate for more complex and bigger AI models that can be trained faster. This makes it great for generative AI applications that entail extensive data manipulation and real-time adjustment because it ensures fast movement of data across the units leading to reduced processing times and better development of sophisticated AI solutions. This merger between cutting-edge data transfer technology and state-of-the-art processing power signifies Nvidia’s resolve in advancing the frontier of AI supercomputing capabilities.

Navigating the Future of AI with Nvidia’s DGX GH200 Supercomputer

The DGX GH200 AI Supercomputer: Enabling Next-Gen AI Applications

DGX GH200 of Nvidia is the front runner in AI innovation, and it marks a major milestone in the development of AI supercomputing. The vision for Nvidia’s future lies at the heart of GH200’s AI supercomputing with a focus on enabling next-generation artificial intelligence applications.

The scope of these applications includes more than enhanced machine learning models to cover but not limited to, complex simulations for autonomous vehicle technology, and advanced research in healthcare.

The significance of DGX GH200 as an essential tool in AI development becomes clear when we go through its specific technical features and advancements:

Parallel Processing Capabilities: The DGX GH200 has several interconnected Gs that are equipped with NVLink thereby providing unmatched parallel processing capabilities. This architecture makes it possible for the computer to undertake various operations at once which consequently decreases time spent on complex computations.

High-Speed Data Transfer: NVLink allows fast data exchange between GPUs within GH200 system so that there is minimal latency in communication which is very important when training large-scale AI models that require quickly sharing data across processing units.

Advanced AI Processing Power: Each GPU of GH200 comes with dedicated AI processing cores which are individually optimized for jobs demanding high levels of computing accuracy and efficiency such as deep learning or neural network training process.

Massive Data Handling Capacity: Its memory capacity and storage options allow GH200 to handle very huge datasets. This is important because most real-world AIs use large amounts of data for training and optimization purposes.

Energy Efficiency: Notwithstanding its great performance, DGX GH200 is designed to be energy efficient. It aids in reducing operational costs by cutting down power consumption bills and encouraging green computing practices during AI research & development.

It is, therefore, wrong to refer to Nvidia’s DGX GH200 supercomputer as having incorporated both NVLink and GH200 GPUs purely as hardware; rather, it is a holistic answer that tackles numerous pressing problems present within this field, such as slow processors, long data transfer latencies and power demands. Thus, it is a very important resource to push the limits of artificial intelligence capabilities and applications forward.

Practical Applications and Impacts of the Nvidia DGX GH200 in Industry

The deployment of Nvidia’s artificial intelligence booster, DGX GH200 AI supercomputer, represents an important milestone in many fields as well as driving innovation and enhancing efficiency across operational procedures. Here is the list of some key areas and real-world applications where DGX GH200 has made a significant impact.

Healthcare and Life Sciences: In genomics, the aforementioned GH200 has boosted the speed of analyzing genetic sequences which allow researchers to discover genetic markers related with diseases more rapidly than was possible before. This portends well for personalized medicine and targeted therapy development.

Autonomous Vehicle Development: The processing power of GH200 has been harnessed by automotive manufacturers in simulating and training autonomous driving systems within virtual environments that tremendously cut down on development timelines while improving system safety and reliability. The massive data sets from real-world vehicle driving scenarios, which this AI supercomputer can process, are useful in refining algorithms for vehicle autonomy.

Financial Services: In high-frequency trading platforms, GH200 employs its cores that carries out AI processing to analyze vast volumes of live financial data thus enabling better predictive models as well as algorithmic trading strategies that increase profits while minimizing risks.

Energy Sector: For energy exploration and production, GH200’s large amount of data manipulation capabilities support seismic data analysis to enhance subsurface imaging accuracy. It makes oil/gas exploration efforts efficient hence reducing environmental impacts associated with drilling activities along with their operating costs.

Climate Research: The processing capability provided by GH200 helps climate scientists develop complex climate models and process large scale environmental datasets. This aids in speeding up research into climate patterns, weather forecasts, impacts of climate change; eventually providing insights needed for policy formulation alongside sustainability initiatives.

Each one of these illustrative studies demonstrates how important DGX GH200 has been in transforming industries through AI, not just leading to breakthroughs but also setting new yardsticks for productivity, precision, or innovation by making use of the supercomputer’s powers behind them.

Reference sources

- Nvidia Official Website – Product Launch Announcement

Nvidia’s website has the most direct and authoritative information about the DGX GH200, giving its specifications, features, and purposes in terms of AI supercomputing. It is a source that introduces the product as well as offers insights into its new technology meant to support AI, machine learning, and computational science research. The technical specifications part is extremely important for understanding hardware capacities and software supports, which turn this device into an advanced solution in high-performance computing environments (HPC). - IEEE Xplore Digital Library – Technical Paper on AI Supercomputing Advances

There is a peer-reviewed article by the IEEE Xplore Digital Library that looks at the developments in AI supercomputers, with a focus on technologies like Nvidia’s DGX GH200. This scholarly paper uses a comparative analysis to outline current AI supercomputers, explaining technological advancements and performance indicators of the model DGX GH200. It discusses its contribution to speeding up workloads of artificial intelligence and machine learning, making it an apt source for researchers or practitioners who need to know more about AI infrastructure trends and tools. - TechRadar Pro – Review of Nvidia DGX GH200

As an expert, TechRadar Pro reviews the Nvidia DGX GH200 by examining its performance, usability, and how it can be utilized in professional settings. The review also compared the DGX GH200 with similar products of earlier generations and competing models to give potential users a clear view of its superiorities and improvements over previous technologies. It evaluates practical application scenarios, energy consumption levels, and their impact on speeding up complicated computational tasks in corporate or research arenas. This review contains a comprehensive account of how the DGX GH200 is poised to revolutionize AI supercomputing.

Frequently Asked Questions (FAQs)

Q: What is the Nvidia DGX GH200, and how does it power the future of AI supercomputers?

A: The groundbreaking GPU and CPU hybrid system called Nvidia DGX GH200 incorporates the Nvidia GH200 Grace Hopper super chip. For modern AI and HPC (High-Performance Computing) workloads, this is an important step towards building AI supercomputers that have never been seen before in terms of computational power. These GH200 Superchips exploit the powers of Nvidia Grace CPU and Hopper GPU to deliver exceptional performance that drives modern AI from generative to complex simulations.

Q: What makes the Nvidia Grace Hopper superchip so revolutionary?

A: The combination of the Nvidia Grace CPU with the Hopper GPU makes the Nvidia Grace Hopper super chip revolutionary. This is unprecedented in terms of efficiency and performance for processing AI and HPC workloads. It’s one of several key components that are enabling NVIDIA to build more powerful and energy-efficient AI supercomputers. The design of this superchip increases interconnect bandwidth as well as memory coherence, thereby catalyzing new products’/technologies’ development in the Artificial Intelligence area.

Q: Will the specifications of the Nvidia DGX GH200 change over time?

A: Yes, like most technological advancements, changes to specifications for both Nvidia’s DGX Gh200 and its other components, including the Grace Hopper, are subject to updates at any time possible since they consist of crucial technologies. Due to continuous improvements, users might be required by often even free updates from those offered by NVIDIa.The latest information about fresh product lines can be found in NVIDIA press releases

Q:Is it available for immediate purchase?

A: Although NVidia has announced its G h 2 0 0 G r a c e H o p p e r s u p e rc h i pi t d o e s no t necessarily imply all potential customers will find the DGX GH200 systems available at any given time. The most recent information on availability can be found in a Nvidia newsroom or by contacting Nvidia directly. Nvidia often utilizes its customers for customization purposes, which includes allowing them to test and integrate their new technologies early enough.

Q: How does the Nvidia DGX GH200 add to the Nvidia AI ecosystem?

A: In this regard, the Nvidia DGX GH200 acts as an integral part of the Nvidia AI ecosystem that includes the likes of Nvidia AI Enterprise software and the introduction of the Nvidia Omniverse. Through its provision of a firm foundation for artificial intelligence (AI) and high-performance computing (HPC) workloads, it is possible for businesses and researchers to utilize premium AI software as well as tools. This system has been designed to enhance easy creation, deployment, and scaling up of artificial intelligence applications, thus promoting faster adoption of advancements in AI.

Q: Can generative AI tasks be managed by Nvidia DGX GH200?

A: No doubt about it; Nvidia DGX GH200 is particularly good at managing generative AI tasks. The presence of Grace CPU and Hopper GPU, combined with being integrated into the greater framework through which various kinds of generative intelligent algorithms are generated by this model guarantees sufficient computational power and efficiency needed by these demanding areas in question. For example, when generating texts or images or videos that can push forward boundaries for what’s currently achievable in terms of Artificial Intelligence.

Q: How does the Nvidia DGX GH200 help build new AI applications?

A: By providing a robust platform built on top-of-the-line NVIDIA technology such as Grace Hopper Superchips powered Gh200, NVIDIA DGX GH2OO supports building new AI apps. Developers can quickly design, train, and deploy their own models using NVIDIA AI enterprise solutions and NVIDIA base command that manages deployments of their models. It fosters a space where experimentation around advanced simulations and deep learning applications can occur & and a place where emerging AI paradigms may be tested out.

Q: Has there been any recent press release about DGX GH200 from NVidia?

A: From time to time, NVidia updates its products, including Grace Hopper Superchips and DGX GH200. The newsroom of Nvidia should be visited for the most recent information on technical specifications, availability, and how this technology is being used across industries. Such press releases help contextualize how Nvidia’s innovations, like DGX GH200, have been shaping AI and HPC landscapes. Remember that statements in these press releases about future updates and products are subject to change and could differ materially from expectations.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00