Unlock the Power of AI with Nvidia H100: The Ultimate Deep Learning GPU

In the fast-changing world of artificial intelligence (AI) and deep learning, there has been a spike in demand for powerful computational resources. The Nvidia H100 GPU is an innovative answer to these needs that is projected to open up the next era of AI breakthroughs. This blog post will start by giving an overview of its architecture, features, and the role it plays in advancing deep learning technology as a whole. By utilizing the potentials of H100, scientists in this field are provided with necessary instruments for making significant progress in such areas of AI as natural language processing or autonomous vehicle development among others. However, we will not only focus on technical specs but also show some real-life uses cases along with their transformative potentialities which could be achieved through utilization this cutting-edge deep learning GPU.

Table of Contents

ToggleWhat Makes Nvidia H100 Stand Out in AI and Deep Learning?

Introduce Nvidia H100 Tensor Core GPU

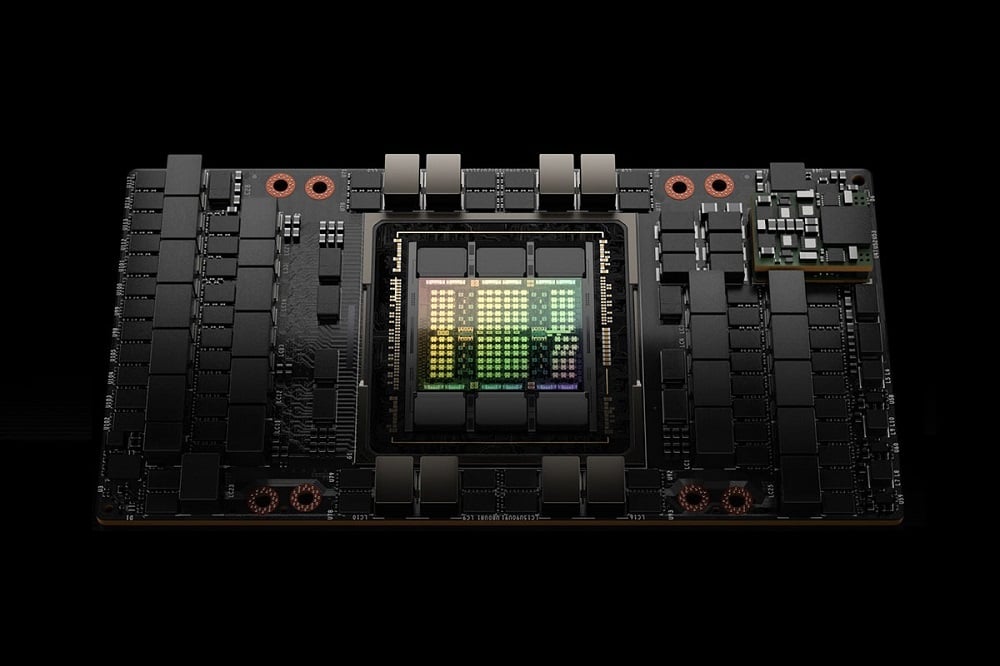

The Nvidia H100 Tensor Core GPU is built on a state-of-the-art technology base, the Hopper architecture, which is the most recent advancement in the design of Nvidia’s GPUs. This structure allows for unprecedented computational throughput in AI and deep learning workloads by using third-generation Tensor Cores and Multi-Instance GPU (MIG) capabilities. Such inventions provide needed flexibility and power to deal with various artificial intelligence tasks from training large scale models to inferencing at the edge. Additionally, its usefulness is improved as it comes with NVLink and PCIe Gen 5 interconnects that offer higher transfer speeds for data management required when working with massive datasets typical for deep learning tasks. In a word, it can be said that H100 is a marvel of technology that sets new records in performance, efficiency and scalability within AI computing.

The Role of 80GB Memory in Accelerating AI Models

One of the key components that makes it possible to speed up artificial intelligence models by means of the Nvidia h100 tensor core GPU is its impressive 80GBs hbm2e memory. This large capacity of storage is essential for dealing with big or complex datasets often associated with deep learning systems and other ai applications as well. It allows processing much larger models — orders more than what previous generations could handle thus greatly reducing model training time while also making iteration faster too. Moreover, high bandwidth delivered by hbm2e ensures fast feeding data into compute cores thereby minimizing bottlenecks and maximizing throughputs so that they can quickly process them; this combination between greater capacity & speed access to memory may significantly boost the development pace around advanced Artificial Intelligence models; therefore making such card necessary tool for those who want push limits within ai researches or implementations.

Comparing Nvidia H100 with Other Graphics Processing Units

In order to fully understand where Nvidia h100 stands among graphics processing units (GPUs) designed for deep learning and AI, it is important to take into account several key parameters, including computational power, memory capacity, energy efficiency, and the support of AI-specific features.

- Computational Power: H100 GPU is based on hopper architecture which represents breakthroughs in computation capability especially when measured using teraflops (TFLOPs) that indicates how many floating point operations per second can be performed by a given card; its performance exceeds significantly previous generations’ cards and those currently available on the market making them suitable for most demanding artificial intelligence computations.

- Memory Capacity and Bandwidth: The h100 comes with an 80GB HBM2e memory offering large space for directly storing datasets within gpu leading to quicker access plus processing while also having high bandwidth necessary during training complex AI models with no significant delays.

- Energy Efficiency: A good gpu should be efficient particularly in data centers where operational costs are directly affected by power consumption. The h100 incorporates advanced power management technologies thus ensuring it delivers maximum performance per watt which makes this card ideal for massive scale artificial intelligence deployments due its low cost of operation.

- AI-Specific Features and Support: Apart from raw technical specifications, there are certain things that make h100 different from other gpus like tensor cores or frameworks/libraries optimized specifically for machine learning.

- Connectivity and Integration: The H100 guarantees fast connectivity options for data sharing and distribution across systems by supporting NVLink and PCIe Gen 5, which is important in the context of AI projects’ scaling up as well as data transfer bottlenecks reduction.

To comprehend why the Nvidia H100 GPU is better than any other GPUs available in terms of high computation, huge memory space and specific artificial intelligence functions, one should consider these parameters during comparison. What makes it ideal for large scale AI researches and applications are mainly its design features.

The Future of High-Performance Computing with Nvidia DGX H100

Exploring the Power and Performance of Nvidia H100 in Workloads

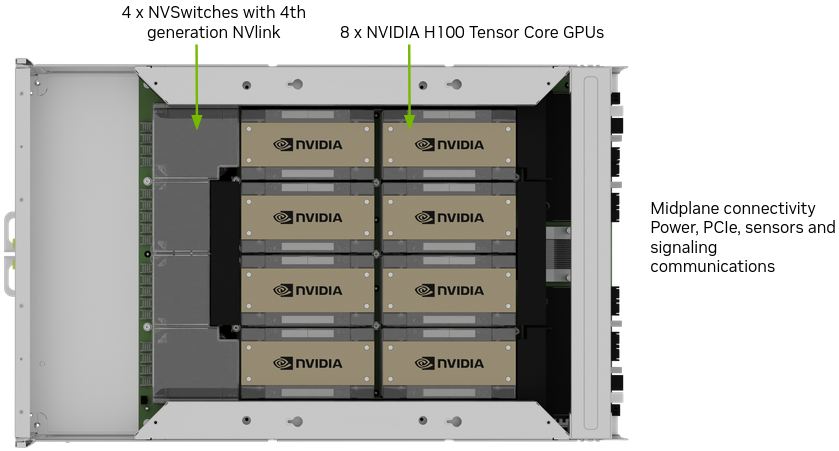

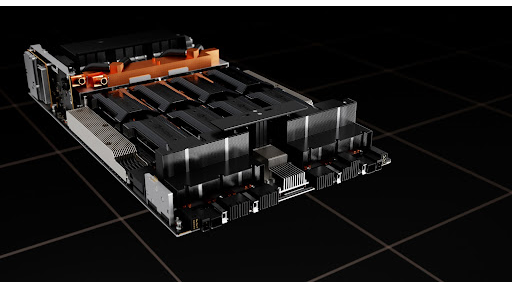

Nvidia DGX H100 is changing data centres by providing unprecedented computing power and efficiency designed for high-performance computing (HPC) and artificial intelligence (AI) workloads. As a representation of the most advanced GPU technology, the DGX H100 greatly reduces AI model training time and inferencing thus allowing research and development at an unprecedented rate. Energy efficiency together with advanced cooling addresses the most critical problem in data centers which is operational cost optimization through power usage while maximizing throughput. Additionally, robust connectivity features enable dense scalable networking required for complex data-intensive tasks that define next-generation computing challenges like those posed by IoT devices or autonomous vehicles processing huge amounts of sensor information every second. The NVIDIA DGX H100’s strong points are its high performance capabilities combined with efficiency levels never seen before which make it possible to connect many units together thereby creating massive computing power clusters close proximity ensuring low latency communication among them.

What Does This Mean For Enterprises? Architecture Matters – A Lot!

The architecture of the Nvidia H100 GPU has deep implications on enterprise solutions as it signifies a quantum leap in terms of what businesses can do when dealing with intricate computational tasks. There are certain aspects about this architecture that contribute significantly towards how effective or not so good it may be within an enterprise setting:

- Tensor Cores: The latest generation of Tensor Cores have been integrated into the H100 for enhanced deep learning algorithm acceleration unmatched by any other device currently available on earth. This means faster training times for AI models hence organizations can iterate more quickly improving their solutions based on artificial intelligence.

- HBM3 Memory: Being equipped with High Bandwidth Memory generation three (HBM3), enables higher volumes data to be handled at once due increased memory bandwidth capacity realized here; essential real time handling large datasets required by some AI applications analytics which necessitate fast processing speeds large quantities information achieved through storing them all into RAM during computation phase instead separate locations like hard disks drives (HDD).

- Multi-Instance GPU (MIG): MIG allows administrators to split one GPU into several small independent instances thereby allocating them different tasks or tenants depending upon need thus maximizing resource utilization reducing operational costs.

- AI Security: H100 comes with advanced protection features tailor made for securing artificial intelligence workloads so that even if attacked during operations its data remains confidential throughout processing; this ensures enterprises dealing with sensitive data stay safe from new threats emerging against such systems.

- Scalability: Designed being able scale easily using NVLink alongside NVSwitch technologies makes it possible connecting many units together thereby creating huge clusters of computational power within close proximity each other leading to lower latencies between them while still maintaining high performance levels without compromising any aspect at all. This factor becomes critical for businesses planning grow their infrastructure around.

- Energy Efficiency: Finally, despite having great power behind it, the H100 has been built keeping energy efficiency mind; achieved through employment advanced cooling techniques power management capabilities which help in cutting down electricity usage while running various artificial intelligence workloads simultaneously thereby saving on operation expenses as well environmental impacts associated with traditional methods used prior like air conditioners fans etcetera working overtime consuming more watts than necessary just cool down hot components inside server cabinets rooms where these supercomputers reside most times.

In conclusion, what does this mean for enterprises? Architecture matters – a lot!

An architectural marvel of the Nvidia H100 GPU, made specially for high-performance computing (HPC) and artificial intelligence (AI) workloads, truly takes processing power to a different level for businesses. This means faster analysis of data, more sophisticated AI model training as well as ability to handle complex simulations better and faster than ever before. With Tensor Cores optimized for AI and HPC-compatible CUDA Cores integrated into this model’s architecture alongside its support for latest memory technologies among others like fast cache etc., there is no doubt that such a device can process huge chunks (volume) of data at extremely high speeds – efficiency too should not be overlooked here. Therefore if enterprises adopt this technology not only will their heavy computational tasks take less time but also overall productivity levels would shoot up leading to increased operational efficiencies throughout the company. Performance, power efficiency coupled with scalability features make H100 an invaluable tool for any industry seeking breakthroughs in AI and HPC capabilities according to this article.

Why Should Businesses Consider Nvidia H100 for Their Data Processing Needs?

The Impact of AI and Deep Learning on Business Technology

Artificial Intelligence (AI) and Deep Learning have caused quite the stir in the world of business technology. There are countless ways in which this technology has affected various industries; it is able to analyze data with a depth and precision never before seen, thus allowing businesses insights that were once unattainable. The impacts of AI and Deep Learning are as follows:

- Better Decision Making: Thanks to its ability to detect trends, patterns, and anomalies within massive datasets, AI algorithms greatly assist decision-making processes. This means that companies can now make their choices based on informed insights derived from hard facts rather than assumptions or hunches. For example, financial establishments use AI to predict market trends and decide where they should invest their money; this has resulted in significantly improved performances across portfolios.

- Higher Efficiency & Productivity: Automation powered by deep learning technologies has led to an unprecedented leap in operational efficiency by taking over repetitive tasks . Time-consuming manual jobs have been automated leading to significant time saving . Accenture reports shows that labor productivity could be increased twice by 2035 through automation.

- Customer Personalization: Businesses can now use artificial intelligence systems which analyse customer data thereby enabling identification of customer preferences as well behaviour trends . This enables creation of personalized shopping experience , targeted marketing campaigns among other things geared towards better customer service delivery . For instance Amazon uses AI for product recommendations leading not only satisfaction but also loyalty among consumers .

- Product Innovation: By meeting changing needs through developing new products or services with the help of deep learning capability; therefore fostering creativity within organizations . In healthcare industry for instance more accurate diagnosis is made possible at an earlier stage thanks to tools driven by artificial intelligence leading better patient outcome .

- Operational Risk Management : Real-time transaction monitoring helps financial institutions detect frauds hence reducing operational risks associated with them ; predictive analytics combined with machine learning capabilities enhances potential risk identification when dealing with such matters.

These impacts highlight just how strategically important it is to integrate AI and Deep Learning technologies into a business. By using the Nvidia H100, companies can speed up their AI projects while also giving themselves an advantage over competitors in the marketplace.

Maximizing Data Analysis and Processing with Nvidia H100

The Nvidia H100 Tensor Core GPU has been built specifically for large-scale AI and data analytics workloads, offering unmatched efficiency together with performance. When discussing how best to maximize one’s capability when it comes to data analysis as well processing using this particular device , certain parameters need mentioning:

- Scalability: The design of the GPU allows for scalability across various workloads thus making handling larger datasets easier by ensuring better management . This ensures that even if there is a growth in volume of information being dealt with , performance levels will still be maintained without necessarily requiring complete system overhaul .

- Processing Power: When compared against previous models , H100 comes with more cores as well bandwidth increases thereby giving it enough muscle required during complex calculations which in turn leads faster analysis alongside processing data . This feature becomes critical especially when dealing with systems that rely heavily on deep learning algorithms and utilize huge volumes of information.

- Energy Efficiency: Higher performance per wattage delivered due higher level consideration given towards energy efficiency during design process for such devices .This helps reduce operational costs at same time minimizing environmental impact caused by carbon emissions from these types of equipment located within data centers.

- Software that Thinks for Itself: The AI of Nvidia and its data analysis software stack are designed for the H100 which eases the deployment of artificial intelligence models and speeds up data processing. This merger enables businesses to take advantage of cutting-edge artificial intelligence capabilities with little customization required.

- Support and Compatibility: There is a wide array of software tools and frameworks that support the H100; this guarantees compatibility with leading artificial intelligence as well as analytics platforms. These compatibilities expand on what solutions can be provided by the H100 thereby catering for diverse industry needs and applications.

If these considerations are followed, then any organization can maximize their usage of Nvidia H100 thus greatly improving its capability in analyzing big data sets. This will lead to better decisions making through more innovation in product/service development as well addressing complex problems faster than ever before.

Nvidia H100: A Powerful Answer For Difficult Calculations

The Nvidia H100 GPU solves many problems associated with high-performance computing across various sectors. It has increased energy efficiency, processing power, integrated AI software, and broad compatibility, among others, making it not only an improvement over previous models but also a stepping stone towards future computers. It lives up to its potential of enabling speedy processing of complicated datasets while at the same time fueling research breakthroughs which leaves no doubt that this device will foster innovation in different industries. For those who want to push boundaries when it comes to working on big data sets using such tools like machine learning algorithms or even creating new ones themselves through trial/error methods; they need something scalable enough so as not limit their creativity but efficient at the same time – this is where H100 comes into play since there’s no other alternative like it available right now. This combination cannot be found in any other brands, hence why people say, “There’s nothing else like Nvidia.” As we continue venturing deeper into realms where numbers talk louder than words if we fail realize how crucial fast computers are then all our efforts shall be in vain. Whether you’re a business or research institution seeking breakthroughs through artificial intelligence, H100 offers best performance per watt making it perfect choice for anyone who wants his/her machine learn quickly thus saving time thereby creating more opportunities for discovery hence propelling mankind forward towards greater heights which can only be achieved by using such technologies; therefore I would say that without doubt this product is worth every penny spent on it.

How to Integrate Nvidia H100 into Your Existing Systems

How to Install Nvidia H100 GPU in 10 Steps

The installation of the Nvidia H100 GPU into your existing system is not a simple plug-and-play procedure. It involves a number of steps that must be followed diligently to ensure compatibility and maximum performance while maintaining system stability. This guide is a brief yet comprehensive manual on how you can integrate this powerful graphics card into your infrastructure.

Step 1: Check System Requirements

Before you proceed with the installation process, make sure your computer meets all necessary prerequisites for the H100 GPU. These include having a compatible motherboard with at least one available PCIe 4.0 x16 slot, enough power supply (700W recommended minimum), and sufficient space inside the case to accommodate its dimensions.

Step 2: Prepare Your System

Turn off your machine and unplug it from the wall socket. You need to ground yourself properly so as not to cause any static damage on components like GPUs or even memory modules; hence touch something metal before proceeding further. Remove the side panel of your PC case where the graphics card will be mounted.

Step 3: Take Out Existing GPU (if any)

In case there already exists another graphic accelerator in your system unit; carefully detach it from power connectors then softly pull it out of PCI Express slot after releasing locking tab.

Step 4: Install Nvidia H100 GPU

Align H100 graphics adapter above desired PCIe slot such that its notch aligns with that found on motherboard connector before lowering it down gently but firmly until you hear click sound produced by locking mechanism snapping into place which secures device firmly within expansion bay area allocated for adding more cards; do not apply excessive force during insertion because this might lead damaging both card itself as well as main board.

Step 5: Connect Power Supply

Hundred series requires two separate connections from PSU (Power Supply Unit); use appropriate wires supplied together with power pack and link them accordingly onto corresponding ports located towards end of video board. Make sure these plugs are firmly inserted; also ensure that no cables obstruct airflow around other parts of system or components like hard drives.

Step 6: Close the Case and Reconnect Your System

After installing GPU onto motherboard, close up computer chassis by fixing screws back into their respective positions so as to secure everything firmly then reconnect all peripherals (such as keyboard, mouse, monitor etc.) along with any other external devices such as printers or scanners which were disconnected earlier during this process before plugging power cord into wall outlet socket again.

Step 7: Install Drivers & Software

Turn on your PC. For optimal functioning of H100 GPU, one needs to download latest Nvidia drivers together with other related applications available at official website for this manufacturer. Follow given instructions throughout installation setup till finish.

Step 8: Optimize BIOS Settings (if necessary)

Sometimes after successfully installing new hardware like graphic cards some users have reported experiencing slow performance issues; therefore visit system’s BIOS configuration utility and activate features that can boost speed specifically designed to work well in tandem with such type of accelerator.

Step 9: Performance Tuning (optional)

Depending on intended usage scenario; it may be required to fine tune various settings within operating system so as achieve maximum efficiency from newly installed Nvidia H100 GPU card. Refer documentation supplied together with product package or visit recommended website for further details on how best optimize performance based upon different workloads being run concurrently across multiple threads simultaneously using processor cores available in host machine

Step 10: Verify Installation Success

Launch diagnostic utilities bundled along installer disk provided by manufacturer then test operational capabilities graphics adapter following prompts displayed during application execution period.

By diligently following these steps you will be able to successfully use Nvidia H100 GPU in any of your existing systems and tap into its enormous potential for high-level computing tasks. Always refer back to official Nvidia installation guide as well as relevant sections contained within specific equipment manuals for more detailed instructions or warnings during the installation process.

Increasing performance of Nvidia H100 through proper system configuration

To get the most out of the Nvidia H100 GPU, it is important to configure your system in such a way that takes advantage of its advanced computational capabilities. This includes updating the BIOS on your motherboard to ensure that all hardware features of the H100 are supported. It is recommended that people use high-speed, low-latency memory systems when they want their computers’ processing power not to be slow as compared with what this type of GPU offers. In terms of storage solutions, using NVMe SSDs can help reduce data retrieval times which will in turn complement the already high throughput rate provided by this graphics card unit. Updating software environments regularly; including but limited only by having up-to-date drivers as well as CUDA toolkit installed together with other necessary programs required by an application, may fully utilize features embedded inside them thereby making such applications faster than before especially if those apps had been taking much time before responding due lack or outdatedness some libraries used during development process etc.

Making sure that everything works fine after upgrading your system with a new Nvidia H100 GPU can be quite challenging and requires a number of considerations to avoid any issues or conflicts that might arise during installation. Here are some things you should take into account before proceeding: check first if the physical dimensions of the card fit well within your computer case – pay attention also on clearance space needed around for good air flow; power supply units (PSU) always demand more wattage than normal so make sure yours has enough juice left plus appropriate connectors; motherboards must have compatible PCIe slots available and provide sufficient bandwidth required by this graphic adapter i.e., at least x16 or higher if possible; cooling system needs improvement because these cards tend to heat up quickly which means extra fans might be needed alongside better airflow optimization methods such as liquid cooling etc.

Maximizing Performance and Compatibility while Installing nvidia h100 GPU

There are many considerations to put in place before upgrading to Nvidia H100 GPU so that it can work well with your computer. The first thing is to ensure that you have enough space in the CPU for the graphic card. The second consideration should be power supply; this type of card requires more power than any other card hence one must use a power supply unit that can provide enough energy for it. Motherboard to, has its own requirements; it should have compatible PCIe slots which are at least x16 or higher, and these slots must also offer enough bandwidth needed by the graphic adapter. Lastly, cooling system should be reviewed because nvidia h100 heating if not adequately cooled may cause damage to some other parts which could cost much money during replacement.

Boosting Performance for Nvidia H100 by Configuring the System Correctly

To increase performance on a NvidiaH100GPU, you need a precise system configuration that takes full advantage of its advanced computational capabilities. This involves ensuring all hardware features of the H100 are supported through updating BIOS on motherboards with latest versions available from respective manufacturers’ websites as well as using high-speed low latency memory systems so as not to bottleneck processing power against what this type of GPU offers. Furthermore, NVMe SSDs can be deployed for storage purposes which will greatly reduce data retrieval times, thereby complementing already high throughput rates provided by these graphics card units while keeping software environment up-to-date, such having latest drivers together with CUDA toolkit among others installed alongside them may enable software applications fully utilize features embedded within them making such apps faster than previous especially those taking long before responding due lack or outdatedness required libraries etc during the development process.

Improving Performance on Nvidia H100 GPUs Through Proper Configuration of Systems

You need a perfect system configuration when dealing with performance optimization on NvidiaH100GPUs, as these devices boast advanced computational abilities that can only be exploited through appropriate setup measures being taken into consideration. First things come first; check if the card will fit in your computer case – pay attention also to clearance space required around it for proper air flow; power supply units always demand more wattage than normal so ensure yours has enough juice left as well as suitable connectors; motherboards should have compatible PCIe slots available and capable of providing sufficient bandwidth which is at least x16 or higher if possible; cooling systems need improvement because such cards tend to heat up quickly meaning additional fans might be required together with better airflow optimization methods like liquid cooling etc.

Navigating Through Nvidia H100’s Product Support and Warranty

What to anticipate from the customer support of Nvidia concerning H100

The customer support of Nvidia for the GPU H100 provides a full range of services aimed at ensuring that your device works without any interruption. This includes technical support that is ready to be reached through multiple channels like phone calls, emails or an online helpdesk. The assistance starts with identifying problems and finding solutions; it also involves optimizing system settings and sharing knowledge about how to make the most out of this product’s features. Moreover, there are detailed guides supplied by Nvidia as well as articles based on their experience in different situations described at community forums where people can ask questions so others may answer them having faced similar issues themselves. Warranty service does not take long but ensures prompt repair if necessary; otherwise, customers can receive a new item instead of waiting until the old one is fixed, which saves time, too. The whole customer service ecosystem provided by NVIDIA is professional and convenient so they strive not only for operational excellence but also high satisfaction rate among clients who own H100s.

Understanding warranty policy for Nvidia graphics card -H100

Warranty policy for Nvidia graphics card -H100 establishes rules under which professional users get compensation if anything goes wrong with their hardware investment made in top-tier products produced by this company. Conditions usually cover manufacturing faults together with other defects caused by materials used during production while being used normally within the specified period stated during the purchase phase; thus, it is important to know the terms applicable depending on where they bought from since vendors might have slightly different durations indicated among provisions listed here. Procedures required when filing claims should be taken into consideration such as presenting evidence proving ownership plus following recommended steps on how best pack damaged unit prior shipping back faulty parts because advanced replacements play major role in minimizing downtimes experienced due critical operations where original items are replaced before sending them first therefore reflecting care towards business continuity among valuable clients like those served by nvidia h100 cards.

Claims procedure and how does warranty work with NVIDIA H100?

NVIDIA H100 warranty claim process has been simplified so that it takes shortest time possible to solve any problem experienced without interrupting normal activities. Before starting, ensure you have the necessary documents, which include receipts showing the date purchased as well as serial number for easy identification purposes; then, proceed by opening Official Support Portal found on the Nvidia website, where there is a Warranty Claims section provided specifically for this purpose only . Once reached, fill up relevant details about your challenge encountered along with the device’s serial number plus proof of purchase, which could be invoice number, among others. In case issue qualifies under warranty, instructions will be given out indicating how safely pack & send back faulty graphic cards so that they can either assess or directly replace them depending on what was stated during shipping guidelines because transit damages must be avoided at all costs. A credit card may also required during advanced replacement service when sending another unit before evaluating the original one such that there is continuous operation throughout the evaluation period where records should be kept safe until everything has been completed successfully.

Exploring Customer Reviews and Similar Items to Nvidia H100

The Real-World Performance of Nvidia H100: What Customers Say

From what I have seen in my job, the Nvidia H100 performs extraordinarily well in many different kinds of applications. Its architecture is designed for speeding up the most complex AI and high-performance computing workloads by a large factor. This means that calculations take less time, which lets people process and analyze data more efficiently. Many customers have reported great improvements in training speeds for AI models; some say they are three times faster than with the previous generation of technology. The third-generation Tensor Cores integrated with the Hopper architecture also give unprecedented computational power, so it is particularly good at dealing with scientific research, 3D rendering or financial modeling kind of stuff where you need a lot of calculations done quickly. Feedback received underscores its ability to not just meet but exceed current requirements for big data operations.

Comparison Against Other Similar Products On The Market

In comparison to other similar products on the market, such as AMD’s Instinct series or Intel Xeon processors with built-in AI acceleration capabilities, etc., it is clear why the Nvidia H100 stands out – this groundbreaking Hopper architecture is newer and provides a significantly higher level of performance especially when it comes to AI and high-performance computing tasks. The raw computational power shown by this product featuring third-generation Tensor Cores cannot be matched easily; besides there are state-of-the-art technologies like Transformer Engine which has been specifically designed for accelerating large language models (LLMs) among other complex AI algorithms – these things make all the difference during heavy duty compute works requiring lots of calculations done quickly. Additionally, Nvidia has strong ecosystem play comprising extensive software libraries as well as development tools that further enhance value proposition around integration and optimization of workloads vis-à-vis competitors’ offerings though being equally robust themselves they still fall short against such an innovation leader as Nvidia represented by its latest release named “H100”.

Why Do Customers Prefer Using Nvidia H100 Over Other GPUs For AI Tasks

There are several reasons why customers choose the Nvidia H100 GPU over other GPUs for AI tasks. Firstly, the H100 has better performance in AI and machine learning workloads due to its advanced Hopper architecture combined with third generation Tensor Cores which are specifically designed to accelerate deep learning.

Secondly, it features a Transformer Engine optimized for large language models like GPT (Generative Pre-trained Transformer) or BERT (Bidirectional Encoder Representations from Transformers) that need to perform complex mathematical operations quickly. This allows for faster completion of AI projects working on big data sets requiring manipulation through different algorithms.

Another reason is Nvidia’s comprehensive ecosystem including CUDA, cuDNN and TensorRT among others which provides developers with a wide range of tools and libraries needed to create efficient AI applications running on hardware from this manufacturer.

Furthermore, scalability is possible when using Nvidia products since one can start off small by buying just one card before scaling up massively through interconnected clusters via NVLink and NVSwitch technologies thereby making them suitable even for enterprise-wide initiatives involving artificial intelligence.

Lastly, power efficiency matters too and here again the H100 wins hands down by delivering more computations per watt thus lowering operational costs while still supporting environmental sustainability goals.

Basically, buyers prefer the Nvidia H100 among other AI GPUs because of its better performance, specialisation in artificial intelligence speed up, stronger support for ecosystem and scalability, and power efficiency.

Reference sources

Selected Sources for “Unlock the Power of AI with Nvidia H100: The Ultimate Deep Learning GPU”:

- NVIDIA Official Website – “NVIDIA H100 Tensor Core GPU”

- Source: NVIDIA

- Summary: This official product page from NVIDIA provides detailed information about the NVIDIA H100 Tensor Core GPU, emphasizing its capabilities in accelerating workloads from Enterprise to Exascale HPC and supporting Trillion Parameter AI models. The site offers insights into the technical specifications, architectural advancements, and unique features that make the H100 an essential tool for deep learning and AI research. It’s an authoritative source for understanding the manufacturer’s perspective on the product’s impact on AI and deep learning.

- Deep Learning Performance Analysis Blog – “NVIDIA H100 GPU Deep Learning Performance Analysis”

- Source: Lambda Labs

- Summary: Lambda Labs conducts an in-depth performance analysis of the NVIDIA H100 GPU, focusing on its throughput across various data types, including FP32 and FP64, with next-generation Tensor Cores. The blog post critically evaluates the GPU’s performance improvements over previous models, providing benchmarks and computational efficiency data. This source is invaluable for readers looking for an independent review that focuses on how the H100 stands up to real-world deep learning tasks.

- Forbes Technology Article – “NVIDIA H100 GPU Performance Shatters Machine Learning Benchmarks for Model Training”

- Source: Forbes

- Summary: This article from Forbes discusses the groundbreaking performance of the NVIDIA H100 GPU in machine learning benchmarks, particularly in model training. It provides a comparative analysis of the H100’s speed relative to its predecessor, the A100, showcasing the significant advancements in accelerator speedup. The piece is aimed at technology enthusiasts and professionals in the AI sector, offering insights into the practical implications of the H100’s capabilities for advancing machine learning research and applications.

These sources collectively offer a well-rounded perspective on the NVIDIA H100 GPU, from the manufacturer’s technical descriptions and independent performance analyses to industry-wide implications discussed in reputable technology news. Each source contributes valuable insights into the GPU’s role in enhancing AI and deep learning research and applications, ensuring readers have access to comprehensive and credible information on the topic.

Frequently Asked Questions (FAQs)

Q: Can you give a description of the Nvidia H100 GPU?

A: Nividia H100 is a new chip made for AI and deep learning applications. The most powerful GPU technology to date, it delivers unprecedented performance that can greatly speed up artificial intelligence (AI), machine learning (ML) and high-performance computing (HPC) workloads. Its architecture is designed to handle large amounts of data and complex calculations, so it is perfect for scientists, researchers and developers working in these areas.

Q: What video and gaming capabilities does the Nvidia H100 have?

A: Although its main focus is on AI and deep learning tasks, the robustness of Nvidia H100’s design allows it to process videos with high resolutions. That said, this device is not optimized for gaming per se: the processing unit was created more as an aid to computation in AI researches than for video games production industry. Nonetheless, if used in hosting rich AI models – this one could enhance development of such games.

Q: Are there also other GPUs recommended for deep learning?

A: Yes – apart from Nvidia H100 Graphics Processing Unit (GPU), there are some others recommended for use when dealing with deep learning too. For instance; A100 & V100 Graphic Cards under same brand name “Nvidia” which are both targeted at Artificial Intelligence projects by this company . Each card has its own unique features but still fall under category known as “AI-focused” products manufactured by nvidia corporation– however; being newer model among them all , h100 offers better improvements in terms of speed , scalability & efficiency than any other previous versions released before hence it should be taken into consideration depending on need.

Q: What category on Amazon does the Nvidia H100 GPU fall under?

A: Under Amazon listings , Graphics cards or Video cards would be found within computers & accessories section given that they are aimed at high level computations required by AI technologies . Particularly those people who want to try out more advanced machine learning techniques using powerful computing components might have a look at this subcategory.

Q: What do customers also search for when looking at the Nvidia H100?

A: When customers look at NVIDIA H100, they usually search for other high-performance computing components and accessories that are related to it. For example, this may include memory modules with higher speeds, cutting-edge CPUs, motherboards capable of supporting advanced computing tasks as well as cooling systems suitable for intense operations. Alongside the H100 model, users may also go through software needed for AI or machine learning projects and other GPUs designed to perform similar tasks.

Q: Could you provide product information for the Nvidia H100?

A: The NVIDIA H100 GPU is built on a new architecture called Hopper which has various technology features that transform AI work and high-performance computing. It has a wide memory interface that enhances fast data processing and supports latest data transfer speeds standards as well as connectivity options. Product information often stresses its ability to handle never before seen big sizes of AI models with advanced chip design and powerful performance metrics.

Q: How does the product description highlight the Nvidia H100’s ideal use cases?

A: Deep learning, AI research and complex scientific computations are among the key use cases highlighted by product description of NVIDIA’s H100 GPU. It also demonstrates this by showing off its high bandwidths together with processing capabilities necessary for running large scale artificial intelligence models or simulations. In addition to that, it should indicate efficiency gains over prior generations so as not to leave any doubt about whom this GPU is made for – professionals or organizations seeking maximum computational power in their AI apps.

Q: What is the best way to buy the Nvidia H100?

A: The best place where one can buy NVIDIA’s H100 would be electronics stores dealing specifically with computers because they have original items covered under warranty from manufacturers themselves. This means if anything goes wrong during usage then such needs will be covered by customer services offered by these shops which stock genuine Nvidias products authorized dealerships. On top of that some people might decide directly from the source like nvidia or major online markets such as amazon where you can find also customer reviews and ratings.

Q: How can customers ensure that prices remain competitive when they buy the Nvidia H100?

A: The simplest way to achieve this is by comparing different retailers’ prices against each other so as to identify those which offer lower amounts for same product. Another method would be keeping an eye on any changes in pricing especially during special sales times because sometimes discounts may apply. Alternatively subscribing to favorite stores newsletters or setting up alerts within large online marketplaces might assist in getting hold of NVIDIA H100 at a better price than usual. Finally participating in various forums and groups covering computing hardware could reveal places where one can get good deals or even best discounts available.

Related Products:

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$850.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$850.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

OSFP-FLT-800G-PC2M 2m (7ft) 2x400G OSFP to 2x400G OSFP PAM4 InfiniBand NDR Passive Direct Attached Cable, Flat top on one end and Flat top on the other

$300.00

OSFP-FLT-800G-PC2M 2m (7ft) 2x400G OSFP to 2x400G OSFP PAM4 InfiniBand NDR Passive Direct Attached Cable, Flat top on one end and Flat top on the other

$300.00

-

OSFP-800G-PC50CM 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

OSFP-800G-PC50CM 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

-

OSFP-800G-AC3M 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$600.00

OSFP-800G-AC3M 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$600.00

-

OSFP-FLT-800G-AC3M 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Flat top on the other

$600.00

OSFP-FLT-800G-AC3M 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Flat top on the other

$600.00