The explosive growth of Large Language Models (LLM) and Mixture-of-Experts (MoE) architectures is fundamentally reshaping the underlying logic of computing infrastructure. As model parameters cross the trillion mark, traditional cluster architectures—centered on standalone servers connected by standard networking—are hitting physical and economic ceilings.

In this context, NVIDIA’s GB200 NVL72 is not just a hardware iteration; it marks the official arrival of the “Rack-Scale Computing” era. By integrating 36 Grace CPUs and 72 Blackwell GPUs into a single rack with an innovative copper spine, NVIDIA has created a single computing domain with 130TB/s of aggregate bandwidth.

Table of Contents

ToggleThe Evolution of Computing Paradigms

From Heterogeneous Servers to Exascale Racks

For the past decade, performance gains relied on Moore’s Law and Domain-Specific Architecture (DSA). However, with AI models growing 10x annually, single-GPU gains can no longer keep pace. This has led to the “Interconnect Wall”.

Traditional x86 server architectures face significant limitations:

PCIe Bandwidth Constraints: Even PCIe Gen5 x16 provides only 128GB/s, far lower than GPU memory bandwidth, leaving GPUs idle during data transfers.

Communication Latency: Data must travel through a long chain of PCIe, NICs, and switches, introducing heavy latency.

Energy Inefficiency: In spine-leaf networks, massive power is consumed in Optical-Electrical-Optical (OEO) conversions rather than computation.

The Design Philosophy: Exascale in a Rack The GB200 NVL72 concentrates the core units of a data center into one rack. It is no longer a collection of servers, but a physically separated yet logically unified giant GPU.

| Feature | Traditional H100 IB Cluster | GB200 NVL72 Rack System |

| Computing Unit | Independent server nodes | Unified NVLink domain |

| Interconnect Media | Optical fiber | Copper |

| Communication BW | 900 GB/s (NVLink 4) | 1.8 TB/s (NVLink 5) |

| Aggregate BW | Limited by switch ports | 130 TB/s (Full Mesh) |

| Cooling | Primarily Air-cooled | Full Liquid Cooling |

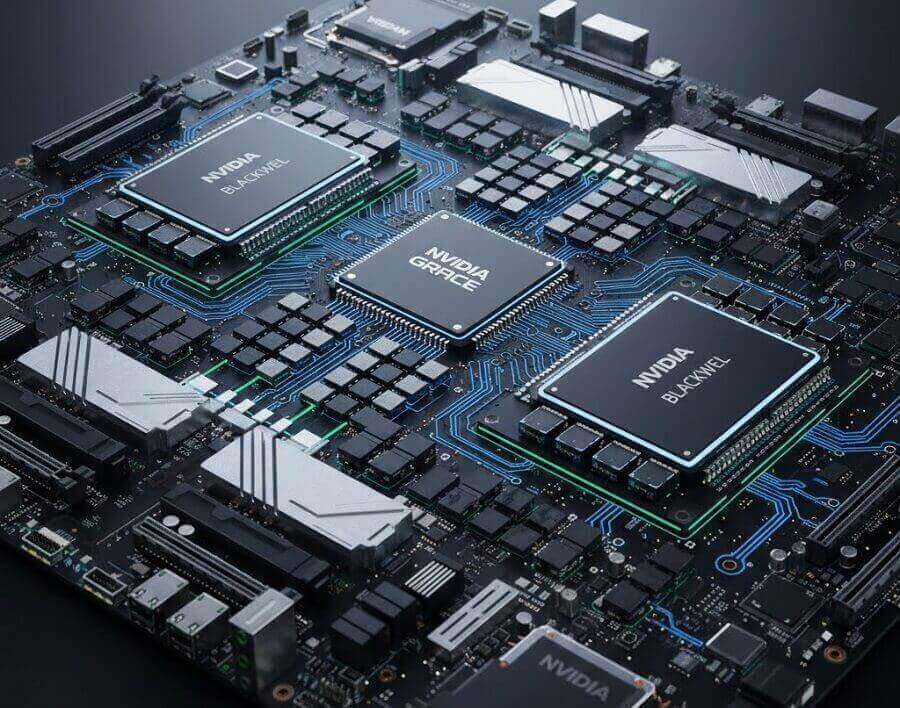

Core Unit: GB200 Grace Blackwell Superchip

The foundation is the GB200 Superchip, featuring a tight CPU+GPU coupling: one Grace CPU paired with two Blackwell GPUs.

Blackwell GPU: Introduces the second-generation Transformer Engine supporting FP4 precision, enabling 30x faster AI inference performance. The two GPUs connect via a 10TB/s NVLink-C2C, acting as a unified unit.

Grace CPU: Features 72 high-performance ARM Neoverse V2 cores. Its value lies in being a high-speed memory extension; with 500GB/s LPDDR5X bandwidth, it solves memory capacity bottlenecks for trillion-parameter models.

NVLink-C2C: Provides 900GB/s bidirectional bandwidth. This allows Unified Memory Addressing, where the GPU accesses CPU memory as if it were local VRAM, bypassing complex PCIe protocols. It also consumes only 1/5 the power of PCIe Gen5.

Mechanical Design: Deconstructing the Rack

The Compute Tray

The 1U-thin Compute Tray is the basic unit, housing two GB200 Superchips (2 CPUs, 4 GPUs). To maximize signal integrity and fit the tight 1U space, LPDDR5X memory is soldered directly to the motherboard. Massive cold plates cover these chips to dissipate thousands of watts of heat.

The NVLink Switch Tray

Nine 1U Switch Trays enable full connectivity. Each tray houses two fifth-generation NVLink Switch chips. With 144 ports per tray, the 1,296 total ports perfectly match the 72 GPUs (72 GPUs × 18 links). While the front panel handles management via RJ45, the data throughput relies entirely on rear blind-mate connectors.

Blind Mate Connectivity

The system utilizes high-precision floating connectors at the rear of the trays. When a tray is pushed into the rack, it automatically “bites” into the copper backplane, simplifying maintenance and avoiding errors from manual cabling.

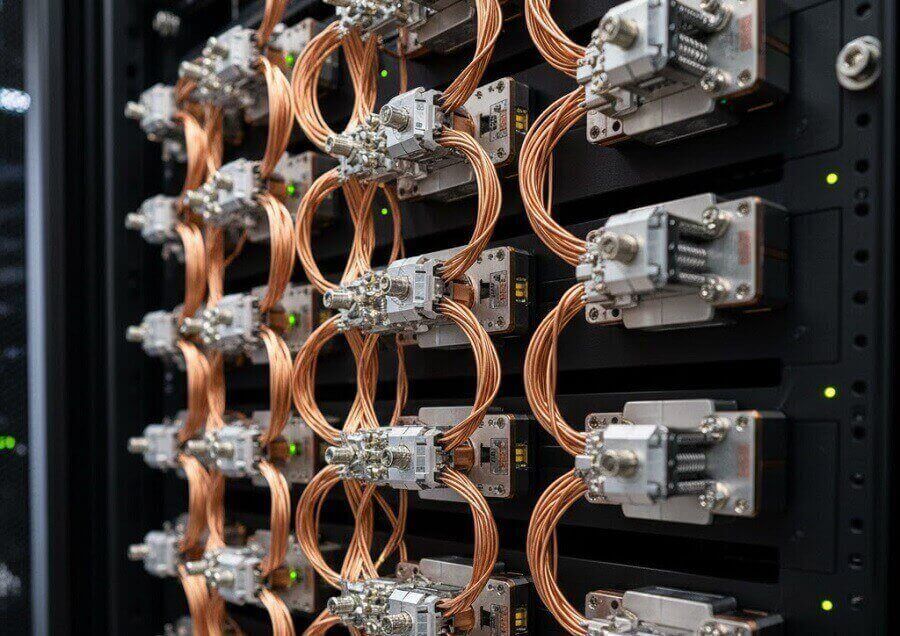

The Copper Revolution: The Copper Spine

NVIDIA’s choice to return to copper for intra-rack connections is a deep consideration of physics.

Power Efficiency: Optical links require power-hungry DSPs and lasers. Passive copper cables require no power, saving 20kW per rack.

Cost & Reliability: Copper is a fraction of the cost of optical components and has virtually no lifespan limits.

The “Spine”: The backplane integrates over 5,000 high-frequency copper cables. This “hard-wires” the NVLink topology, ensuring every GPU port is precisely routed to the switch trays. This is an engineering feat of signal integrity and mechanical reliability.

Building a 130TB/s Communication Matrix

The fifth-generation NVLink delivers 1.8TB/s bidirectional bandwidth per GPU, 14x faster than PCIe Gen5.

SHARP (Scalable Hierarchical Aggregation and Reduction Protocol): The NVLink Switch isn’t just a pipe; it’s a computer. It features built-in mathematical units to perform “All-Reduce” operations (summing gradients) directly inside the switch. This “In-Network Computing” reduces traffic and is key to the 4x improvement in training performance. The resulting 130TB/s aggregate bandwidth is higher than total global internet peak traffic.

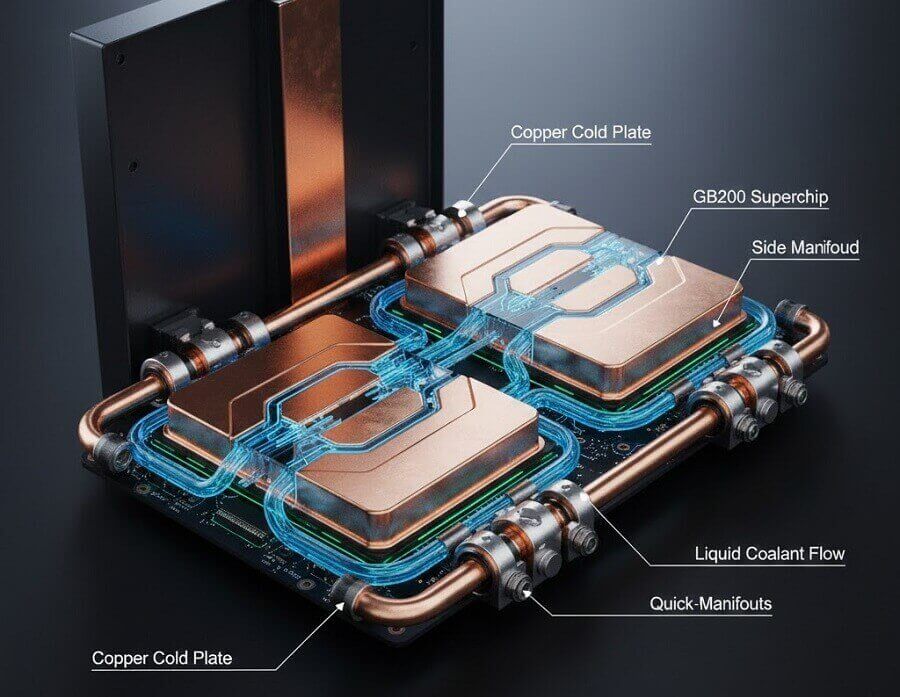

Thermal and Power Challenges

A 120kW rack is 10x the density of traditional air-cooled setups.

Cold Plate Liquid Cooling: Coolant enters trays via side manifolds with leak-proof quick-connects. It enters at roughly 25°C and exits above 45°C.

Power Architecture: Centralized Power Shelves at the top and bottom feed high-voltage DC through a vertical Bus Bar at the rear. This eliminates massive cable bundles and reduces resistance (I²R) losses.

Scale-Up vs. Scale-Out

The GB200 architecture balances node-level expansion (Scale-Up) and cluster-level networking (Scale-Out).

| Feature | NVL72 | NVL576 |

| GPU Count | 72 | 576 (8 Racks) |

| Switch Layers | 1 Layer (L1) | 2 Layers (L1 + L2) |

| Interconnect | Copper Backplane | Optical (between racks) |

For massive clusters, NVIDIA uses Quantum-X800 InfiniBand for AI training and Spectrum-X800 Ethernet for multi-tenant cloud environments.

Software-Defined Performance

The hardware is optimized for advanced parallel strategies:

Tensor Parallelism (TP): NVL72 allows TP to span all 72 GPUs, significantly reducing latency for the largest models.

MoE Acceleration: The all-to-all communication pattern of Mixture-of-Experts models is perfectly handled by the full-mesh NVLink, eliminating “communication hotspots”.

Conclusion: The New Infrastructure

The GB200 NVL72 signals that the unit of AI compute has shifted from the “server” to the “rack”. With copper’s resurgence and the mandatory adoption of liquid cooling, data center infrastructure must undergo a total upgrade. Embracing this architecture is no longer just a performance choice—it’s a choice for survival in the AI era.

Related Products:

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

-

NVIDIA MCP7Y00-N001 Compatible 1m (3ft) 800Gb Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Direct Attach Copper Cable

$160.00

NVIDIA MCP7Y00-N001 Compatible 1m (3ft) 800Gb Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Direct Attach Copper Cable

$160.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$850.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$850.00

-

NVIDIA MCP7Y70-H001 Compatible 1m (3ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$120.00

NVIDIA MCP7Y70-H001 Compatible 1m (3ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$120.00