Most of the attention at Computex was focused on NVIDIA’s new DGX GH200 and MGX — both of which are NVIDIA’s system-level AI products, regardless of whether they are reference designs or complete servers. Those chips, boards, and systems related to CPUs and GPUs have always been hot topics for NVIDIA, as AI and HPC are just that popular.

But actually, in the context of AI HPC, especially generative AI, or what many people now call “large model” computing, and networking is also very important. In other words, it takes a large number of servers to work together to solve problems, and a large-scale cluster is needed to expand computing power across systems and nodes. Therefore, performance issues are not just about the computing power of CPUs, GPUs, and AI chips within a node.

Previously, Google mentioned that in the overall AI infrastructure, the importance of system-level architecture is even higher than that of TPU chip microarchitecture. Of course, this “system-level” may not necessarily cover networking across nodes, but obviously, when a bunch of chips works together to do calculations, the system and network become performance bottlenecks.

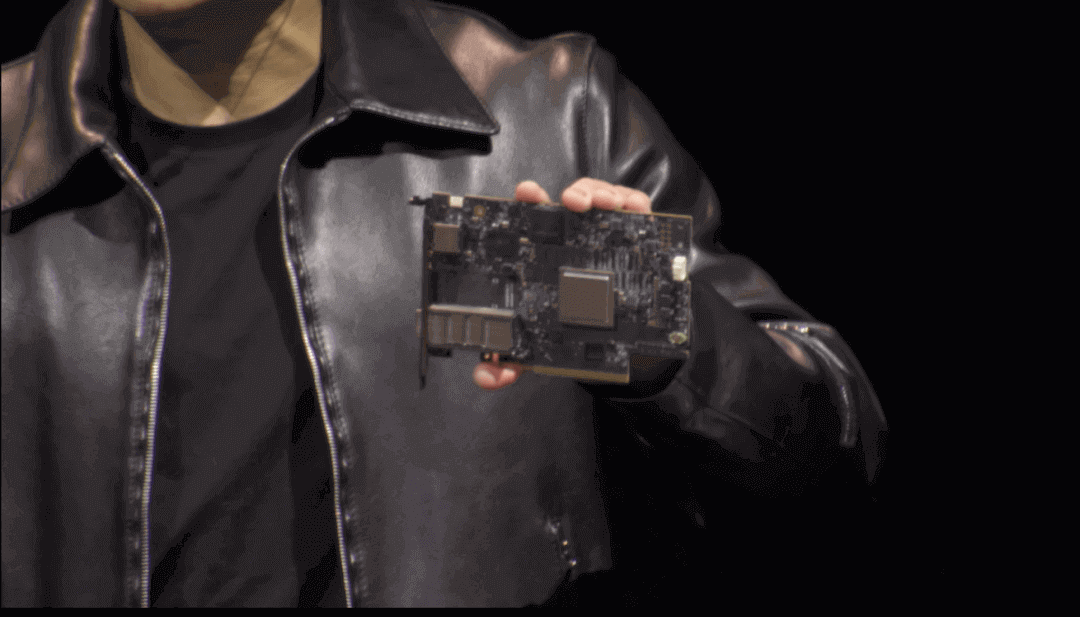

That’s why DPU is so important — rather than a subjective way to sell or compete with existing products in the market. NVIDIA’s DPU and other networking products are more like short-board supplements for their own products, subjectively not like competing with others or competing with existing products in the market. From this perspective, NVIDIA’s hardware products constitute a complete ecosystem horizontally: for example, the DPU is subjectively not intended to compete with anyone but is one part of their existing products.

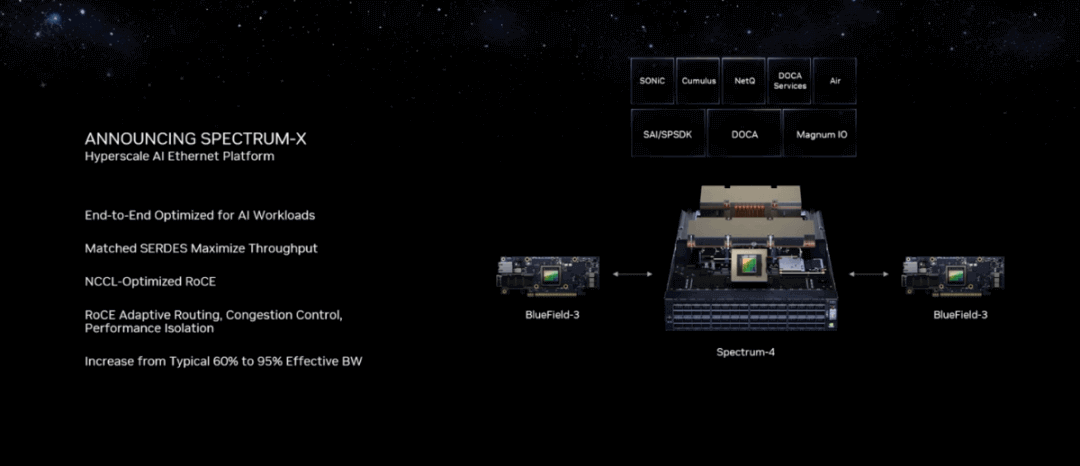

At Computex, NVIDIA announced networking products centered around its Spectrum-X Ethernet platform. NVIDIA claims this is the world’s first high-performance Ethernet product designed specifically for AI, particularly for “generative AI workloads that require a new kind of Ethernet.” We haven’t talked much about NVIDIA’s networking products in the past, including its data processing unit (DPU). With the introduction of Spectrum-X, this article attempts to discuss this Ethernet product as well as the logic behind NVIDIA’s networking products.

Table of Contents

ToggleWhy does NVIDIA Want to Build a “Switch”?

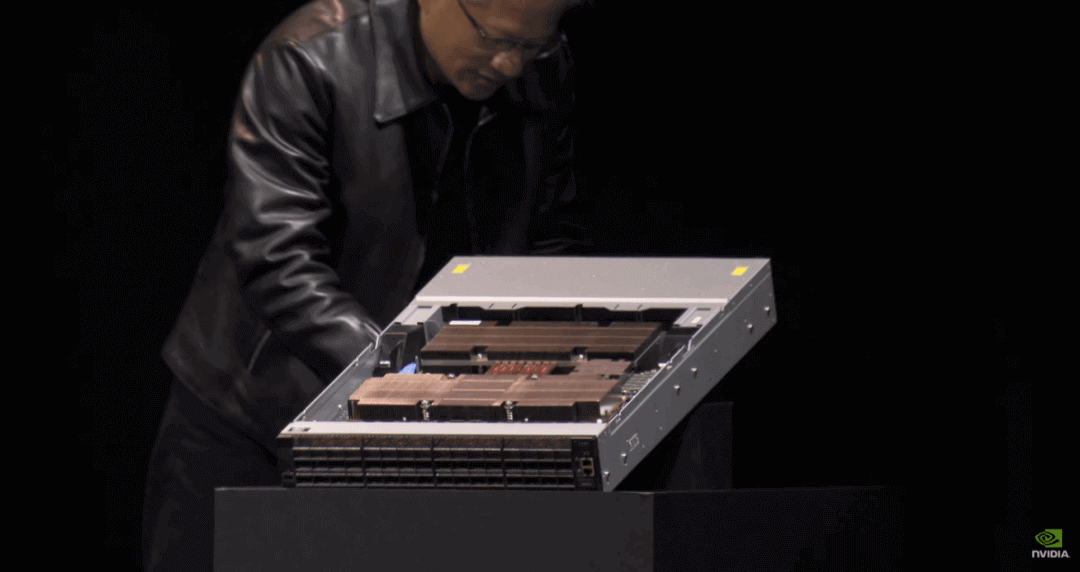

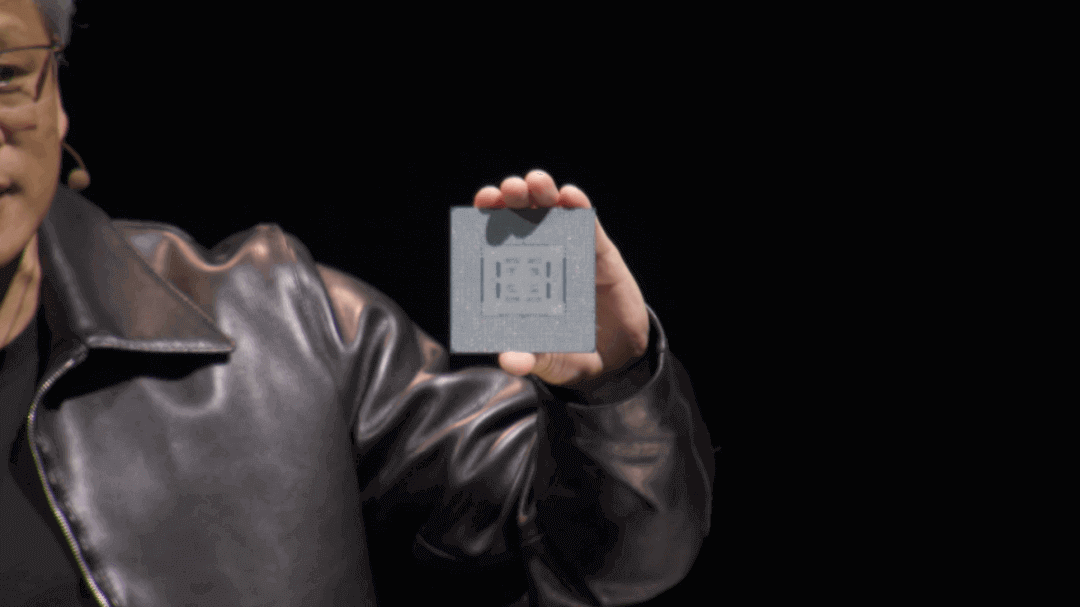

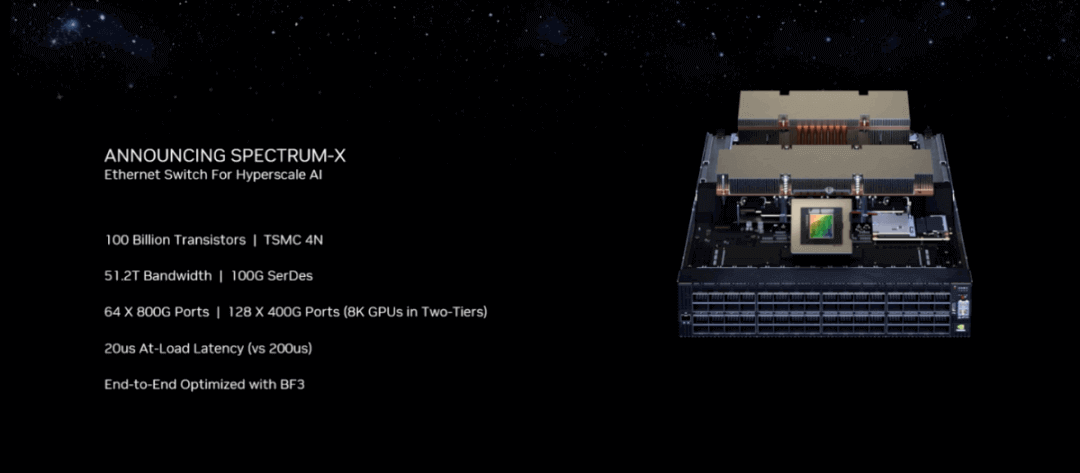

The two core components inside the Spectrum-X platform are the Spectrum-4 Ethernet Switch and the BlueField-3 DPU. The part about the DPU is not much explained; the other part of the Spectrum switch-related, the actual GTC last year NVIDIA released the Spectrum-4 400Gbps switch. The chip level is based on the Spectrum ASIC – Huang Renxun showed the chip at the Computex keynote, which is a big guy that includes 100 billion transistors, 90x90mm, 800 solder balls at the bottom of the chip package, and 500W power consumption.

The announcement of the “first high-performance Ethernet architecture” built specifically for AI, the Spectrum-4 Ethernet Switch system, is now available for CSPs.

As shown in the figure, the system boasts a total of 128 ports with a bandwidth capacity of 51.2TB/s, which is twice as much as traditional Ethernet switches. According to the company, this new technology will enable network engineers, AI data scientists, and cloud service providers to produce results and make decisions at a faster rate, while also enabling generative AI clouds. High bandwidth and low latency are crucial for alleviating performance bottlenecks during GPU scaling across nodes. The entire switch consumes 2800W of power.

At last year’s GTC, NVIDIA explained that the switch was not intended to compete with the conventional network switches in handling routine “mouse flow” traffic, but instead focuses on processing “elephant flow” traffic, leveraging hardware products for large-scale AI, digital twins, and simulation applications.

“Traditional switches are too slow for handling the current generative AI workloads. Moreover, we are still at the beginning of the AI revolution. Traditional switches may suffice for commodity clouds, but they cannot provide the required performance for AI cloud loads that involve generative AI,” said Gilad Shainer, NVIDIA’s Networking SVP during his keynote speech.

During the pre-briefing, a reporter asked specifically if NVIDIA Spectrum competes directly with switches from Arista and other companies. Shainer’s response was that there is no competition: “Other Ethernet switches on the market are used to build ordinary commodity clouds, or north-south traffic that includes user access and cloud control. However, there is currently no solution on the market that can meet the demands of generative AI for Ethernet. As the world’s first Ethernet network for east-west traffic in generative AI, Spectrum-4 has created a brand new Ethernet solution specifically targeting this goal.” Shainer also mentioned during the briefing that Broadcom’s existing switching products do not compete with Spectrum-4. NVIDIA emphasizes that Spectrum-X creates a lossless Ethernet network, which may be particularly important in explaining the Spectrum-X platform.

InfiniBand vs Ethernet

Ethernet has evolved over time. Lossless is specific because Ethernet was originally designed for lossy network environments. In other words, packet loss is allowed on this network. To ensure reliability, the upper layer of the IP network requires the TCP protocol. That is, if packet loss occurs during packet transmission, the TCP protocol enables the sender to retransmit the lost packets. However, due to these error correction mechanisms, latency increases, which can cause problems for certain types of applications. Additionally, in order to deal with sudden traffic spikes in the network, switches need to allocate extra cache resources to temporarily store information, which is why Ethernet switch chips are larger and more expensive than InfiniBand chips of similar specifications.

However, “lossy networks are unacceptable for high-performance computing (HPC) data centers.” Huang Renxun stated, “The entire cost of running an HPC workload is very high, and any loss in the network is difficult to bear.” Furthermore, due to requirements such as performance isolation, lossy networks are indeed difficult to bear. NVIDIA has been using a networking communication standard called InfiniBand. InfiniBand is commonly used in HPC applications that require high throughput and low latency. Unlike Ethernet, which is more universal, InfiniBand is better suited for data-intensive applications.

InfiniBand is not exclusive to NVIDIA. It was originally developed by a number of companies, including Intel, IBM, and Microsoft, among others, and there was even a specialized alliance called IBTA. Mellanox began promoting InfiniBand products around 2000. According to Wikipedia’s introduction, the initial goal of InfiniBand was to replace PCI in I/O and Ethernet in interconnecting machine rooms and clusters.

Unfortunately, InfiniBand was developed during the period of the dot-com bubble burst, and its development was suppressed. Participants such as Intel and Microsoft all had new choices. However, according to the TOP500 list of supercomputers in 2009, there were already 181 internal connections based on InfiniBand (the rest were Ethernet), and by 2014, more than half of them were using InfiniBand, although 10Gb Ethernet quickly caught up in the following two years. When NVIDIA acquired Mellanox in 2019, Mellanox had already become the main supplier of InfiniBand communication products on the market.

From the design perspective, Ethernet, which was born in the 1980s, was only concerned with achieving information interoperability among multiple systems. In contrast, InfiniBand was born to eliminate the bottleneck in cluster data transmission in HPC scenarios, such as in terms of latency, and its Layer 2 switching processing design is quite direct, which can greatly reduce forwarding latency. Therefore, it is naturally suited for HPC, data centers, and supercomputer clusters: high throughput, low latency, and high reliability.

From the reliability point of view, InfiniBand itself has a complete protocol definition for network layers 1-4: it prevents packet loss through end-to-end flow control mechanisms, which in itself achieves the lossless property. Another major difference between the two is that InfiniBand is based on a switched fabric network design, while Ethernet is based on a shared medium shared channel. Theoretically, the former is better able to avoid network conflict problems.

Since InfiniBand is so good, why does Nvidia want to develop Ethernet? Thinking from intuition, Ethernet’s market base, versatility and flexibility should be important factors. In his keynote, Huang talked about how “we want to bring generative AI to every data center,” which requires forward compatibility; “many enterprises are deploying Ethernet,” and “to get InfiniBand capability is hard for them, so we’re bringing that capability to the Ethernet market. This is the business logic behind the Spectrum-4 rollout. But we think that’s definitely not the whole story.

NVIDIA is working on both Ethernet and InfiniBand products, with the former being the Spectrum Ethernet platform and the latter being called Quantum InfiniBand. If you look at the official page, you will find that InfiniBand solutions “deliver unmatched performance at lower cost and complexity on top of HPC, AI and supercluster cloud infrastructures”; while Spectrum is accelerated Ethernet switching for AI and cloud. Clearly, the two are competing to some degree.

Why Ethernet?

In his keynote, Huang scientifically explained the different types of data centers – in fact, at GTC last year, NVIDIA had clearly divided data centers into six categories. And in the AI scenario we are discussing today, data centers can be divided into two main categories. One category is the one that needs to be responsible for a whole bunch of different application loads, where there may be many tenants and weak dependencies between loads.

But there is another category typically such as supercomputing or the now popular AI supercomputing, which has very few tenants on it (as few as 1 bare metal) and tightly coupled loads – demanding high throughput for large computational problems. The difference in infrastructure required by these two types of data centers is significant. Judging from intuition, the most primitive lossy environment of Ethernet would not be suitable for the latter requirement. The reasons for this have already been addressed in the previous article.

SemiAnalysis recently wrote an article that specifically talked about the many problems with InfiniBand – mainly technical, which can be used as a reference for NVIDIA to develop Ethernet at the same time. Some of them are extracted here for reference. In fact, both InfiniBand and Ethernet themselves are constantly evolving.

The flow control of InfiniBand uses a credit-based flow control mechanism. Each link is pre-assigned with some specific credits — reflecting attributes such as link bandwidth. When a packet is received and processed, the receiving end returns a credit to the sending end. Ideally, such a system would ensure that the network is not overloaded, as the sender would need to wait for the credits to return before sending out more packets.

But there are problems with such a mechanism. For example, if a sending node sends data to a receiving node at a faster rate than the receiving node can process the data, the receiving node’s buffer may become full. The receiving node cannot return credits to the sending node, and as a result, the sending node cannot send more data packets because the credits are exhausted. If the receiving node cannot return credits and the sending node is also a receiving node for other nodes, the inability to return credits in the case of bandwidth overload can lead to back pressure spreading to a larger area. Other problems include deadlocks and error rates caused by different components.

Some inherent issues with InfiniBand become more severe as the system’s scale and complexity increase. Currently, the largest commercially implemented InfiniBand solution is probably from Meta, where a research cluster deployed a total of 16,000 NICs and 16,000 A100 GPUs.

This scale is undoubtedly massive, but SemiAnalysis states that training GPT-4 will require an even larger scale, and future “large model” developments will likely require cluster expansion. In theory, InfiniBand can continue to expand its overall capacity, but it will increasingly suffer from the effects of inherent issues. From an inference perspective, latency and performance can still benefit from InfiniBand, but for inference loads, different requests will be transmitted at various speeds continuously. Moreover, future architectures will require multiple large models to be included in various batch sizes within the same large-scale cluster, which demands continuous credit-based flow control changes.

The credit flow control mechanism is difficult to respond quickly to network environment changes. If there is a large amount of diverse traffic within the network, the receiving node’s buffer state can change rapidly. If the network becomes congested, the sending node is still processing earlier credit information, making the problem even more complex. Moreover, if the sending node constantly waits for credits and switches between the two states of data transmission, it can easily cause performance fluctuations.

In terms of practicality, NVIDIA’s current Quantum-2 achieves bandwidths of 25.6TB/s, which at least numerically speaking, is lower than Spectrum-4’s 51.2TB/s. The faster Quantum chips and infrastructure will not be available until next year, which creates a different pace. Additionally, from a cost perspective, achieving the same scale (8000+ GPUs) of GPU conventional deployment requires an additional layer of switching and significantly more cables (high-cost optical cables). Therefore, the typical scale InfiniBand network deployment cost is significantly higher than Ethernet. (DPU and NIC costs are not considered here.)

From the customer’s perspective, the market of Ethernet is much larger than InfiniBand, which also helps reduce deployment costs. There are other specific comparable factors, such as traditional service front-end systems based on Ethernet and the supplier binding issue with InfiniBand for customers. Ethernet obviously provides more choices, and its deployment elasticity and scalability may also be better. At the technical level, there seems to be potential value in future deployments of optical transmission infrastructure for Ethernet.

These may be the theoretical basis for NVIDIA’s focus on Ethernet or part of the reason NVIDIA chose Ethernet for generative AI clouds. However, one reason that should only be taken as a reference is that InfiniBand has evolved greatly by NVIDIA, and many inherent problems have solutions.

Finally, let’s talk about the question mentioned at the beginning, which is that Ethernet was originally a lossy network. But actually, with the development of technologies such as RoCE (RDMA over Converged Ethernet), some of InfiniBand’s advantages have also been brought to Ethernet. In fact, technology expansion is to some extent the integration of the advantages of different technologies, including InfiniBand’s high performance and lossless, Ethernet’s universality, cost-effectiveness, and flexibility, etc.

The RoCE mentioned in the Spectrum-X platform features achieves losslessness on Ethernet networks by relying on priority-based flow control (PFC) on the endpoint side NIC, rather than switch devices. In addition, RoCE++ has some new optimized extensions, such as ASCK, which handles packet loss and arrival order issues, allowing the receiving end to notify the sending end to only retransmit lost or damaged packets, achieving higher bandwidth utilization; there are also ECN, flow control mechanism, and error optimization, all of which contribute to improving efficiency and reliability. In addition, to alleviate the scalability problems of endpoint NICs on standard Ethernet with RoCE networks, the Bluefield NIC mode can be used, and the overall cost of DPU can still be diluted by Ethernet and some new technologies.

In his keynote speech, Huang Renxun specifically mentioned Spectrum-X, which mainly brings two important characteristics to Ethernet: adaptive routing and congestion control. In addition, NVIDIA has previously cooperated with IDC to issue a white paper report on the commercial value of Ethernet switching solutions.

In large-scale AI applications, perhaps Ethernet will be an inevitable choice in the future. Therefore, in the promotion of Spectrum-X, NVIDIA’s position is specially prepared for generative AI clouds, the “first” solution for east-west traffic of generative AI. However, there may be more reasons than Ethernet’s strong universality. Under AI HPC loads, there is a certain probability of the possibility of a comprehensive shift to Ethernet.

The development of different standards is itself a process of constantly checking and supplementing each other’s deficiencies, and absorbing the essence. Just like InfiniBand, there are various mitigation solutions to solve inherent defects, and some extended attributes of InfiniBand are also very helpful for its application in AI. This is a comparison problem between choice and technology development. We can wait and see whether NVIDIA will tilt towards the development of InfiniBand or Ethernet in the future, even if these two have their respective application scenarios.

Related Products:

-

NVIDIA(Mellanox) MMA1B00-E100 Compatible 100G InfiniBand EDR QSFP28 SR4 850nm 100m MTP/MPO MMF DDM Transceiver Module

$40.00

NVIDIA(Mellanox) MMA1B00-E100 Compatible 100G InfiniBand EDR QSFP28 SR4 850nm 100m MTP/MPO MMF DDM Transceiver Module

$40.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA(Mellanox) MFS1S00-H010E Compatible 10m (33ft) 200G HDR QSFP56 to QSFP56 Active Optical Cable

$365.00

NVIDIA(Mellanox) MFS1S00-H010E Compatible 10m (33ft) 200G HDR QSFP56 to QSFP56 Active Optical Cable

$365.00

-

NVIDIA(Mellanox) MFS1S00-H005E Compatible 5m (16ft) 200G HDR QSFP56 to QSFP56 Active Optical Cable

$355.00

NVIDIA(Mellanox) MFS1S00-H005E Compatible 5m (16ft) 200G HDR QSFP56 to QSFP56 Active Optical Cable

$355.00

-

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

-

NVIDIA(Mellanox) MCP1600-E01AE30 Compatible 1.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$35.00

NVIDIA(Mellanox) MCP1600-E01AE30 Compatible 1.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$35.00

-

NVIDIA(Mellanox) MCP1600-E002E30 Compatible 2m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$35.00

NVIDIA(Mellanox) MCP1600-E002E30 Compatible 2m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$35.00

-

HPE NVIDIA(Mellanox) P06248-B22 Compatible 1.5m (5ft) Infiniband HDR 200G QSFP56 to 2x100G QSFP56 PAM4 Passive Breakout Direct Attach Copper Cable

$80.00

HPE NVIDIA(Mellanox) P06248-B22 Compatible 1.5m (5ft) Infiniband HDR 200G QSFP56 to 2x100G QSFP56 PAM4 Passive Breakout Direct Attach Copper Cable

$80.00

-

NVIDIA(Mellanox) MC220731V-025 Compatible 25m (82ft) 56G FDR QSFP+ to QSFP+ Active Optical Cable

$99.00

NVIDIA(Mellanox) MC220731V-025 Compatible 25m (82ft) 56G FDR QSFP+ to QSFP+ Active Optical Cable

$99.00

-

NVIDIA(Mellanox) MC2207130-004 Compatible 4m (13ft) 56G FDR QSFP+ to QSFP+ Copper Direct Attach Cable

$45.00

NVIDIA(Mellanox) MC2207130-004 Compatible 4m (13ft) 56G FDR QSFP+ to QSFP+ Copper Direct Attach Cable

$45.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

-

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

-

NVIDIA (Mellanox) MCX621102AN-ADAT SmartNIC ConnectX®-6 Dx Ethernet Network Interface Card, 1/10/25GbE Dual-Port SFP28, Gen 4.0 x8, Tall&Short Bracket

$315.00

NVIDIA (Mellanox) MCX621102AN-ADAT SmartNIC ConnectX®-6 Dx Ethernet Network Interface Card, 1/10/25GbE Dual-Port SFP28, Gen 4.0 x8, Tall&Short Bracket

$315.00

-

NVIDIA (Mellanox) MCX631102AN-ADAT SmartNIC ConnectX®-6 Lx Ethernet Network Interface Card, 1/10/25GbE Dual-Port SFP28, Gen 4.0 x8, Tall&Short Bracket

$385.00

NVIDIA (Mellanox) MCX631102AN-ADAT SmartNIC ConnectX®-6 Lx Ethernet Network Interface Card, 1/10/25GbE Dual-Port SFP28, Gen 4.0 x8, Tall&Short Bracket

$385.00

-

NVIDIA (Mellanox) MCX4121A-ACAT Compatible ConnectX-4 Lx EN Network Adapter, 25GbE Dual-Port SFP28, PCIe3.0 x 8, Tall&Short Bracket

$249.00

NVIDIA (Mellanox) MCX4121A-ACAT Compatible ConnectX-4 Lx EN Network Adapter, 25GbE Dual-Port SFP28, PCIe3.0 x 8, Tall&Short Bracket

$249.00