NIC is Network Interface Controller, also known as a network adapter or network interface card, which is used on computers and servers. It can communicate with switches, storage devices, servers, and workstations through network connection cables (twisted pair cables, optical fibers, etc.). With the increase in market popularity and technical capability, there are also various types of network cards, such as Ethernet cards and InfiniBand cards. This article also focuses on the differences between an Ethernet card and an InfiniBand adapter, hoping to help you choose the most suitable network card.

Table of Contents

ToggleWhat is an Ethernet card?

An Ethernet card is a network adapter plugged into a motherboard slot and supports the Ethernet protocol standard. Each network adapter has a globally unique physical address, called a MAC address. Data can be accurately sent to the destination computer based on the MAC address of the network adapter. There are many types of Ethernet cards and various classification methods. They can be divided according to bandwidth, network interface, and bus interface. An optical interface Ethernet card is called a fiber Ethernet card (Fiber-Ethernet adapter). It follows the optical fiber Ethernet communication protocol and is usually connected to an optical fiber Ethernet switch through an optical fiber cable. It is the most widely used type of network card. At present, Fiber-Ethernet adapters can be classified by bus interface and bandwidth.

Bus Interface Classification

Ethernet card bus interface types can be classified as PCI, PCI-Express (abbreviated as PCI-E), USB, and ISA. ISA/PCI is the earlier bus interface and is gradually being phased out as the technology becomes more mature. PCIe is currently the most used bus interface and is very common in industrial-grade applications and server computers.

1. How does the PCIe card work?

PCIe is a serial attachment that works more like a network than a bus. The most obvious difference from other bus interfaces that handle data from different sources is that PCIe controls the flow of data through a switch for a point-to-point connection. The PCle card inserts into the slot and generates a logical connection (interconnection or link) to communicate with each other. It generally supports point-to-point communication channels between the two PCle ports and can perform the actions of sending and receiving normal PCI requests or interrupts.

2. What are the categories of PCIe cards?

Specifications: Usually the number of channels in the PCle slot determines a specification for the PCle card. Common PCle cards have the following physical specifications: x1, x4, x8, x16, and x32. For example, a PClex8 card means that the card has eight channels.

Version: PCIe replaces the old PCI and PCI-X bus standards and is constantly modified to meet the growing demand for high bandwidth. The earliest PCIe 1.0 (2.5GT/s) version was released in 2002, Later, PCIe 2.0 (5GT/s), PCIe 3.0 (8GT/s), PCIe 4.0 (16GT/s), PCIe 5.0 (32GT/s), PCIe 6.0 (64GT/s), and PCIe7.0 (128GT/s) appeared. All PCIe versions support backward compatibility.

Classification of Bandwidth

As Internet traffic grows day by day, network equipment is under pressure from major ISPs to constantly iterate and update with higher performance requirements to achieve 10G, 25G, 40G, or up to 100G data transfer. Some basic information about them will be detailed in the following sections.

1. 10G Fiber Adapter(Ethernet Server Adapter)

10G fiber network adapter adopts 32/64-bit PCI bus network interface card, supports 10G single-mode or multi-mode fiber, and is suitable for enterprise and campus backbone network construction. At the same time, 10G Ethernet can transmit up to 100 kilometers, which can meet the transmission requirements in the metropolitan area. Deploying a 10G network at the backbone layer of the MAN can greatly simplify the network structure, reduce costs, and facilitate maintenance. End-to-end Ethernet is used to build a MAN with low cost, high performance, and rich service support capabilities.

2. 25G Fiber Adapter

25G fiber adapters make up for the low bandwidth of 10G Ethernet and the high cost of 40G Ethernet by using 25Gb/s single channel physical layer technology to achieve 100G transmission based on four 25Gbps fiber channels. Since the SFP28 package is based on the SFP+ package and both are the same size, the 25G fiber adapter port can support 25G as well as 10G rates. Compared to 10G network adapter, 25G fiber NIC’s greater bandwidth to meet the network needs of high-performance computing clusters, in the 100G or even higher speed network upgrades, 25G fiber adapter will be one of the indispensable infrastructures.

3. 40G Fiber Adapter

40G fiber adapter has a 40G QSFP+ interface that supports 40Gbps transmission bandwidth. It also supports PCI-e x8 standard slot to ensure efficient and stable operation. Typically available in single and dual ports, 40G fiber NICs are the highest-performance interconnect solution for enterprise data centers, cloud computing, high-performance computing, and embedded environments.

4. 100GFiber Adapter

Currently, the most common 100G fiber network adapters in the market are single-port and dual-port. Each port can provide up to 100 Gbit /s Ethernet, ensuring the adaptive routing of reliable transmission and enhancing the unload capability of vSwitch/vRouter. 100G Fiber network cards provide a high-performance and flexible solution, offering a range of innovative offloads and accelerators in the hardware to improve the efficiency of data center networks and storage connections.

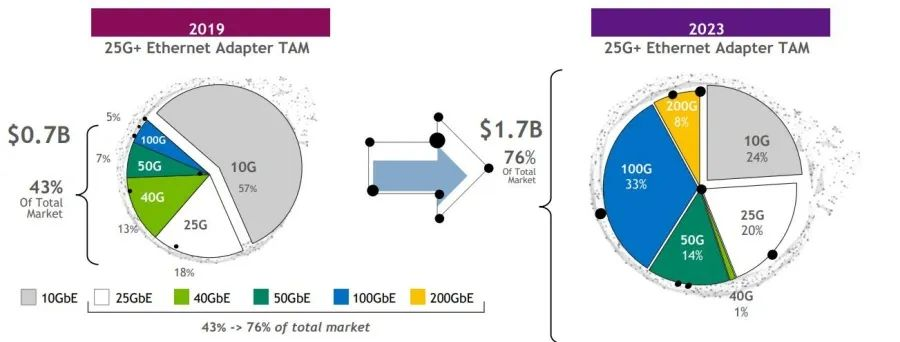

The connection between the server and the switch is increasing to higher bandwidth. The 25G Ethernet card has become the mainstream as the intermediate device between the 25G server and the 100G switch. And as the data center is growing to 400G at an unprecedented speed, the connection between servers and switches will grow to 100G, 100G network cards also play an indispensable role in the data center.

What is InfiniBand Adapter?

InfiniBand Network

As a computer network communication standard, InfiniBand is widely used in high-performance computing (HPC) due to its advantages of high throughput bandwidth and ultra-low network transmission delay. The InfiniBand network can be extended horizontally through switch networks to adapt to networking requirements of various scales. As of 2014, most TOP500 supercomputers use InfiniBand network technology. In recent years, AI/big data-related applications have also adopted InfiniBand networks to achieve high-performance cluster deployment.

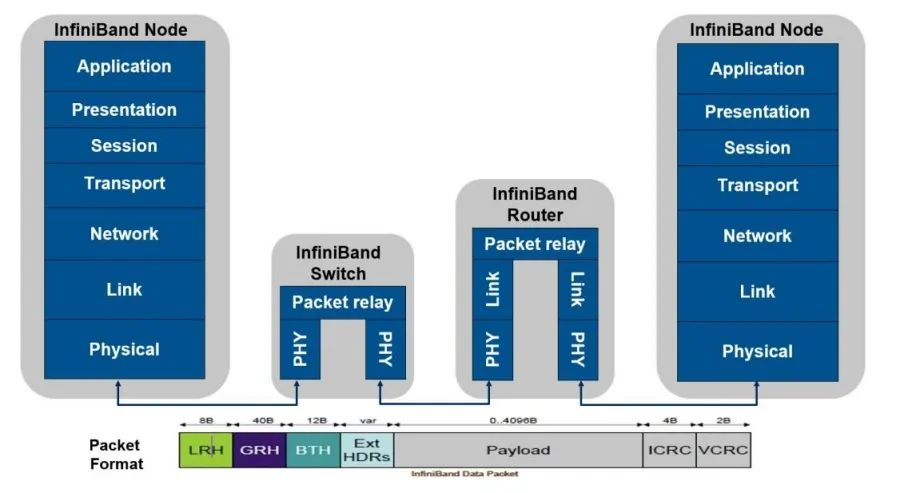

As a layered protocol, InfiniBand defines a layered protocol stack similar to the OSI seven-layer protocol model. IB switches create a private and protected direct channel on the server node. In this channel, the transmission of data and messages will no longer require CPU processing but will be implemented directly through RDMA. In this way, the receiving and sending functions are offloaded to the InfiniBand adapter for processing. Physically, InfiniBand adapters connect to CPU memory based on PCIe interfaces, providing higher bandwidth, lower latency, and greater scalability than other communication protocols.

InfiniBand Adapter

The InfiniBand architecture defines a set of hardware components necessary to deploy the architecture, and the InfiniBand network card is one of them. The InfiniBand adapter is also called the HCA – Host Channel Adapter. The HCA is the point at which an InfiniBand end node (such as a server or storage device) connects to the InfiniBand network. InfiniBand architects spent a great deal of time ensuring that the architecture could implement more than a specific HCA function required in a single piece of hardware, firmware, or software so that the final collection of hardware, software, and firmware representing the HCA function provides access to network resources for an application. In effect, the queue structure used by the application to access the InfiniBand hardware will appear directly in the application’s virtual address. The same address translation mechanism is a means for HCAs to access memory on behalf of user-level applications. Often applications refer to virtual addresses; HCAs have the ability to convert these addresses to physical addresses for information transfer.

Advantages of InfiniBand Adapter

- InfiniBand network cards provide the highest performance and most scalable interconnection solution for servers and storage systems. Particularly in HPC, Web 2.0, cloud computing, big data, financial services, virtualized data centers, and storage applications will see significant performance improvements, resulting in shorter completion times and lower overall process costs.

- InfiniBand adapters are the ideal solution for HPC clusters that require high bandwidth, high message rates, and low latency to achieve high server efficiency and application productivity.

- The InfiniBand card offloads the protocol processing and data movement of the CPU from the CPU to the interconnection, maximizing the efficiency of the CPU and performing ultra-fast processing of high-resolution simulation, large data sets, and highly parallelized algorithms.

How to Select A InfiniBand Network Card

- Network bandwidth: 100G, 200G, 400G

- Number of adapters in a single machine

- Port rate: 100Gb/s (HDR100/EDR), 200Gb/s (HDR)

- Number of ports: 1/2

- Host interface type: PCIe3/4 x8/x16, OCP2.0/3.0

- Whether the Socket-Direct or Multi-host function is required

InfiniBand vs. Ethernet

- Bandwidth: Because the two applications are different, the bandwidth requirements are also different. Ethernet is more of a terminal device interconnection, there is no high demand for bandwidth. InfiniBand is used to interconnect servers in high-performance computing. It takes into account not only interconnection but also how to reduce CPU load during high-speed network transmission. Benefiting from RDMA technology, InfiniBand network can achieve CPU unloading in high-speed transmission and improve network utilization. Therefore, a large increase in CPU speed will not sacrifice more resources for network processing and slow down the development of HPC performance.

- Latency: Mainly divided into switch and network adapter for comparison. For switch: As the second layer of technology in the network transmission model, Ethernet switches have a long processing process, which is generally in several us (more than 200ns supporting cut-though), while InfiniBand switches have a very simple layer 2 processing. The Cut-Through technology can greatly shorten the forwarding delay to less than 100ns, which is much faster than Ethernet switches. For network adapters: Using RDMA technology, InfiniBand network cards can forward packets without going through the CPU, which greatly speeds up the packet encapsulation and decapsulation delay (usually receiving and sending delay is 600ns). However, the transmitting and sending delay of TCP and UDP applications based on Ethernet is about 10us, with a difference of more than ten times.

- Reliability: In the field of high-performance computing, packet loss retransmission has a great impact on the overall performance. Therefore, a highly reliable network protocol is needed to ensure the lossless characteristics of the network from the mechanism level, so as to achieve its high-reliability characteristics. InfiniBand is a complete network protocol with its own Layer 1 to Layer 4 formats. Packets are sent and received on the network based on end-to-end flow control. There is no cache accumulation in transmission over InfiniBand network, so the delay jitter is controlled to a minimum, thus creating an ideal pure network. The network constructed by Ethernet does not have a scheduling flow control mechanism, which is not only high cost but also larger power consumption. In addition, because there is no end-to-end flow control mechanism, the network will appear in slightly extreme cases, resulting in cache congestion and packet loss, which makes the data forwarding performance fluctuate greatly.

- Networking: Ethernet networking requires each server in the network to periodically send packets to ensure the real-time update of entries. When the number of nodes in the network increases to a certain level, broadcast storms are generated, resulting in a serious waste of network bandwidth. At the same time, the lack of table entry learning mechanism of the Ethernet network will lead to a loop network, and Ethernet does not have SDN special, so the packet format or forwarding mechanism needs to be changed to meet the requirements of SDN during network deployment, thus increasing the complexity of network configuration. InfiniBand is born with the concept of SDN. Each layer 2 InfiniBand network has a subnet manager to configure the node ID (LocalID) in the network. Then the forwarding path information is uniformly calculated through the control plane and delivered to the InfiniBand switch. In this way, a Layer 2 InfiniBand network can be configured without any configuration, flooding is avoided, and VLANs and ring network breaks are avoided. Therefore, a large Layer 2 network with tens of thousands of servers can be easily deployed.

Network Card Recommendations

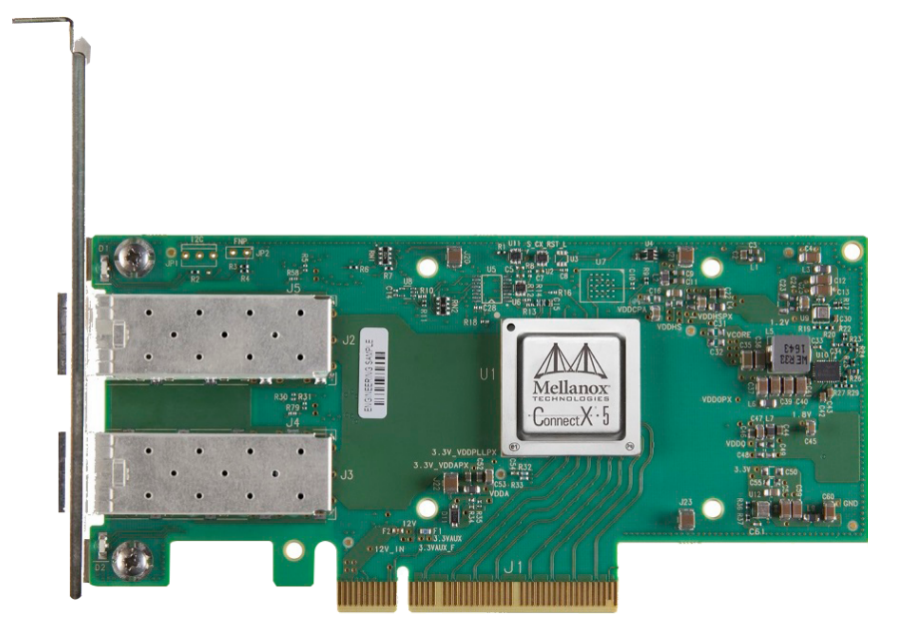

ConnectX-5 Ethernet Card (MCX512A-ACAT)

The ConnectX-5 Ethernet Network interface card features up to two 10/25GbE connection ports, 750ns latency, up to 200 million packets per second (Mpps), and Data Plane Development Kits (DPDK). For storage workloads, ConnectX-5 offers a range of innovative accelerations, such as signature switching in hardware (T10-DIF), embedded PCIe switches, and NVMe over Fabric target offloading. ConnectX-5 adapter cards also bring advanced Open vSwitch offloading to telecom and cloud data centers to drive extremely high packet rates and throughput while reducing CPU resource consumption, thereby increasing the efficiency of data center infrastructure.

ConnectX-6 Ethernet Card (MCX623106AN-CDAT)

ConnectX-6 Dx SmartNIC supports transfer rates of 1/10/25/40/50/100GbE, flexible programmable pipes for new network flows, multi-homing with advanced QoS, IPsec, and TLS inline encryption acceleration support and encryption acceleration to block static data. It is the industry’s most secure and advanced cloud network interface card for accelerating mission-critical data centers applications such as security, virtualization, SDN/NFV, big data, machine learning, and storage.

ConnectX-6 VPI IB Network Card (MCX653105A-ECAT-SP)

ConnectX-6 VPI card provides a single port, sub 600ns latency, and 215 million messages per second for HDR100 EDR InfiniBand and 100GbE Ethernet connectivity. PCIe 3.0 and PCIe 4.0 support; advanced storage features, including block-level encryption and checksum offload; for the highest performance and most flexible solution designed to meet the growing needs of data center applications.

ConnectX-5 VPI IB Network Card (MCX556A-ECAT)

ConnectX-5 InfiniBand adapter cards offer a high-performance and flexible solution with dual-port 100Gb/s InfiniBand and Ethernet connectivity ports, low latency, and high message rates, as well as embedded PCIe switches and NVMe over Fabrics, offload. These intelligent Remote Direct Memory Access (RDMA) enabled adapters to provide advanced application offload capabilities for High-Performance Computing (HPC), cloud hyperscale, and storage platforms.

From the InfiniBand adapters and Ethernet cards mentioned above, it is easy to see that they both have their own characteristics and corresponding application areas. Which type of card to deploy depends not only on the protocols supported by the card but also on your network environment and budget.

Related Products:

-

Intel® I210 F1 Single Port Gigabit SFP PCI Express x1 Ethernet Network Interface Card PCIe v2.1

$60.00

Intel® I210 F1 Single Port Gigabit SFP PCI Express x1 Ethernet Network Interface Card PCIe v2.1

$60.00

-

Intel® 82599EN SR1 Single Port 10 Gigabit SFP+ PCI Express x8 Ethernet Network Interface Card PCIe v2.0

$115.00

Intel® 82599EN SR1 Single Port 10 Gigabit SFP+ PCI Express x8 Ethernet Network Interface Card PCIe v2.0

$115.00

-

Intel® 82599ES SR2 Dual Port 10 Gigabit SFP+ PCI Express x8 Ethernet Network Interface Card PCIe v2.0

$159.00

Intel® 82599ES SR2 Dual Port 10 Gigabit SFP+ PCI Express x8 Ethernet Network Interface Card PCIe v2.0

$159.00

-

Intel® X710-BM2 DA2 Dual Port 10 Gigabit SFP+ PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$179.00

Intel® X710-BM2 DA2 Dual Port 10 Gigabit SFP+ PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$179.00

-

Intel® XL710-BM1 DA4 Quad Port 10 Gigabit SFP+ PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$309.00

Intel® XL710-BM1 DA4 Quad Port 10 Gigabit SFP+ PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$309.00

-

NVIDIA (Mellanox) MCX4121A-ACAT Compatible ConnectX-4 Lx EN Network Adapter, 25GbE Dual-Port SFP28, PCIe3.0 x 8, Tall&Short Bracket

$249.00

NVIDIA (Mellanox) MCX4121A-ACAT Compatible ConnectX-4 Lx EN Network Adapter, 25GbE Dual-Port SFP28, PCIe3.0 x 8, Tall&Short Bracket

$249.00

-

Intel® XXV710 DA2 Dual Port 25 Gigabit SFP28 PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$269.00

Intel® XXV710 DA2 Dual Port 25 Gigabit SFP28 PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$269.00

-

Intel® XL710-BM1 QDA1 Single Port 40 Gigabit QSFP+ PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$309.00

Intel® XL710-BM1 QDA1 Single Port 40 Gigabit QSFP+ PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$309.00

-

Intel® XL710 QDA2 Dual Port 40 Gigabit QSFP+ PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$359.00

Intel® XL710 QDA2 Dual Port 40 Gigabit QSFP+ PCI Express x8 Ethernet Network Interface Card PCIe v3.0

$359.00

-

Intel®Ethernet Controller E810-CAM2 100G Dual-Port QSFP28, Ethernet Network Adapter PCIe 4.0 x16

$609.00

Intel®Ethernet Controller E810-CAM2 100G Dual-Port QSFP28, Ethernet Network Adapter PCIe 4.0 x16

$609.00

-

NVIDIA (Mellanox) MCX512A-ACAT SmartNIC ConnectX®-5 EN Network Interface Card, 10/25GbE Dual-Port SFP28, PCIe 3.0 x 8, Tall&Short Bracket

$318.00

NVIDIA (Mellanox) MCX512A-ACAT SmartNIC ConnectX®-5 EN Network Interface Card, 10/25GbE Dual-Port SFP28, PCIe 3.0 x 8, Tall&Short Bracket

$318.00

-

NVIDIA (Mellanox) MCX631102AN-ADAT SmartNIC ConnectX®-6 Lx Ethernet Network Interface Card, 1/10/25GbE Dual-Port SFP28, Gen 4.0 x8, Tall&Short Bracket

$385.00

NVIDIA (Mellanox) MCX631102AN-ADAT SmartNIC ConnectX®-6 Lx Ethernet Network Interface Card, 1/10/25GbE Dual-Port SFP28, Gen 4.0 x8, Tall&Short Bracket

$385.00

-

NVIDIA (Mellanox) MCX621102AN-ADAT SmartNIC ConnectX®-6 Dx Ethernet Network Interface Card, 1/10/25GbE Dual-Port SFP28, Gen 4.0 x8, Tall&Short Bracket

$315.00

NVIDIA (Mellanox) MCX621102AN-ADAT SmartNIC ConnectX®-6 Dx Ethernet Network Interface Card, 1/10/25GbE Dual-Port SFP28, Gen 4.0 x8, Tall&Short Bracket

$315.00

-

NVIDIA MCX623106AN-CDAT SmartNIC ConnectX®-6 Dx EN Network Interface Card, 100GbE Dual-Port QSFP56, PCIe4.0 x 16, Tall&Short Bracket

$1200.00

NVIDIA MCX623106AN-CDAT SmartNIC ConnectX®-6 Dx EN Network Interface Card, 100GbE Dual-Port QSFP56, PCIe4.0 x 16, Tall&Short Bracket

$1200.00

-

NVIDIA NVIDIA(Mellanox) MCX515A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Single-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$715.00

NVIDIA NVIDIA(Mellanox) MCX515A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Single-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$715.00

-

NVIDIA NVIDIA(Mellanox) MCX516A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Dual-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$985.00

NVIDIA NVIDIA(Mellanox) MCX516A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Dual-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$985.00

-

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

-

NVIDIA NVIDIA(Mellanox) MCX653106A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1600.00

NVIDIA NVIDIA(Mellanox) MCX653106A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1600.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00