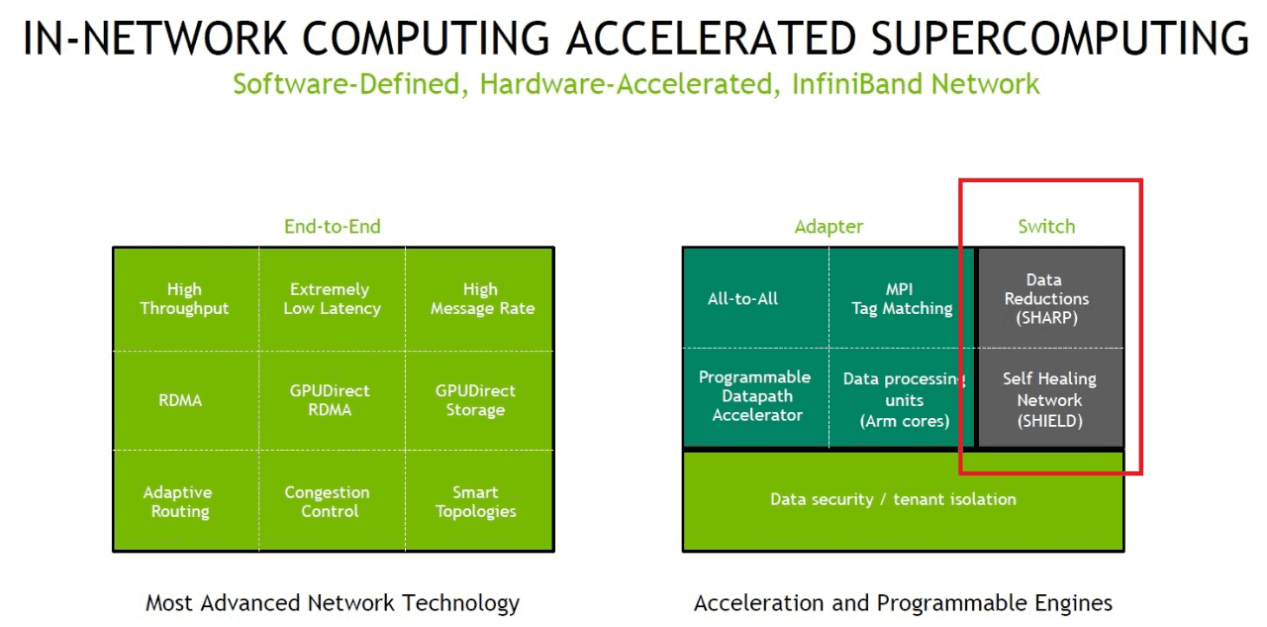

As an emerging technology, artificial intelligence has undergone rapid development in recent years. Among them, a series of AI technologies such as ChatGPT have begun to gradually change production and lifestyles. The continuous optimization of deep learning algorithms and the expansion of training datasets have also led to increasing computing resources required for training large language models, including CPUs, GPUs, and DPUs, which need to be connected to servers for model training through networks. Therefore, network bandwidth and latency have a direct impact on training speed and efficiency. To address this problem, NVIDIA has launched the Quantum-2 InfiniBand platform, which provides powerful network performance and comprehensive features to help AI developers and researchers overcome difficulties.

Based on its understanding of high-speed network development trends and rich experience in implementing high-performance network projects, NVIDIA has introduced the NDR (Next Data Rate) network solution, which is built on the basis of the Quantum-2 InfiniBand platform. NVIDIA’s NDR solution mainly consists of Quantum-2 InfiniBand 800G switches (2x400G NDR interfaces), ConnectX-7 InfiniBand host adapters, and LinkX InfiniBand optical connectors, aimed at providing low-latency, high-bandwidth super-strong network performance for critical fields such as high-performance computing, large-scale cloud data centers, and artificial intelligence.

The use cases include:

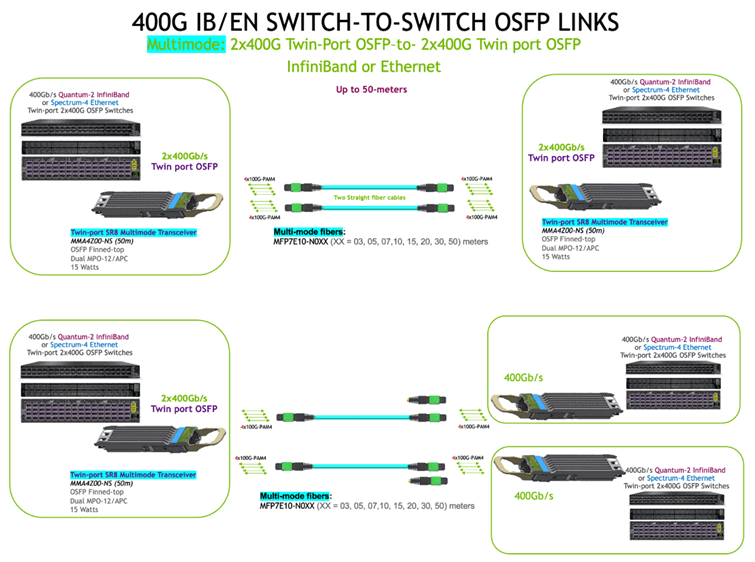

1. Connect two switches at a speed of 800Gb/s or connect to two switches at a speed of 400Gb/s each.

To connect two OSFP-based switches, you can use two Twin port OSFP transceivers (MMA4Z00-NS) and two straight, multimode fiber cables (MFP7E10-Nxxx) up to a distance of 50 meters. This will allow you to achieve a speed of 800G (2x400G). Alternatively, you can route the two fiber cables to two different switches to create two separate 400Gb/s links. The additional Twin port OSFP ports can then be used to connect to more switches if needed.

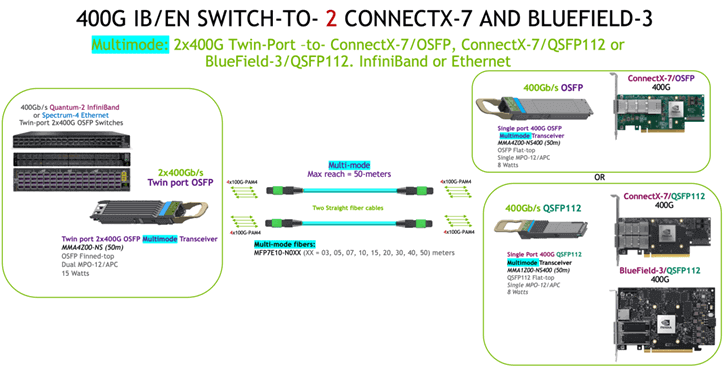

2. Connect to two combinations of ConnectX-7 BlueField-3 with a speed of 400G each.

By using a twin port OSFP transceiver with two straight fiber cables, you can connect up to two adapter and/or DPU combinations using ConnectX-7 or BlueField-3. Each cable has four channels and can link to a 400G transceiver in either OSFP (MMA4Z00-NS400) or QSFP112 (MMA1Z00-NS400) form factor for distances up to 50 meters. Both single-port OSFP and QSFP112 form factors have the same electronics, optics, and optical connectors and consume 8 watts of power.

Please note that only ConnectX-7/OSFPs support the single port OSFP form factor, while the QSFP112 form factor is used in ConnectX-7/QSFP112s and/or BlueField-3/QSFP112 DPUs. You can use any combination of ConnectX-7 and BlueField-3 using OSFP or QSFP112 on the same twin port OSFP transceiver simultaneously.

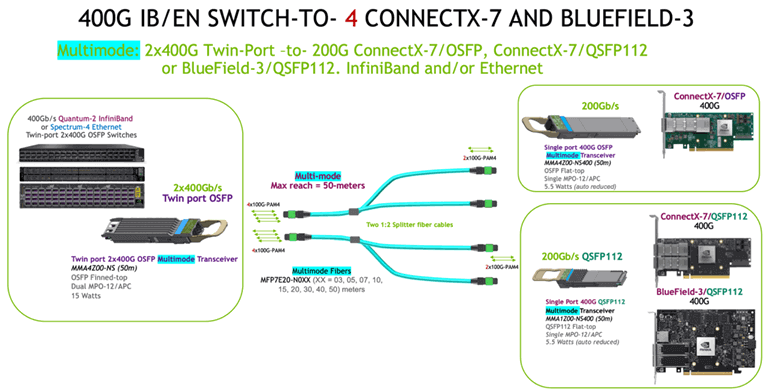

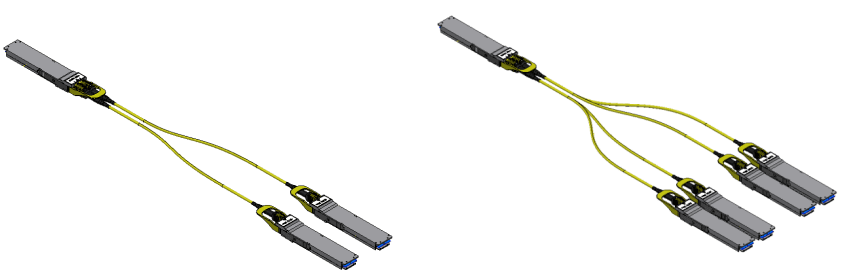

3. Connect to four combinations of ConnectX-7 and/or BlueField-3 with a speed of 200G each.

If you want to switch up to four adapter and/or DPU combinations using ConnectX-7 or BlueField-3, you can use a Twin port OSFP transceiver with two 1:2 fiber splitter cables. Each of the two, 4-channel 1:2 fiber splitter cables (MFP7E20-N0xx) can link to a 400G transceiver up to 50 meters in either OSFP (MMA4Z00-NS400) or QSFP112 (MMA1Z00-NS400) form-factor. The same electronics, optics, and optical connectors are used for both single-port OSFP and QSFP112 form factors. When you connect the two-fiber channel ends, only two lanes in the 400G transceiver activate, creating a 200G device. This also automatically reduces the power consumption of the 400G transceivers from 8 watts to 5.5 watts, while the Twin port OSFP power consumption remains at 15 watts.

Please note that only ConnectX-7/OSFPs are compatible with the single port OSFP form factor, while the QSFP112 form factor is used in ConnectX-7/QSFP112s and/or BlueField-3/QSFP112 DPUs. You can use any combination of ConnectX-7 types and BlueField-3 on the same Twin port OSFP transceiver.

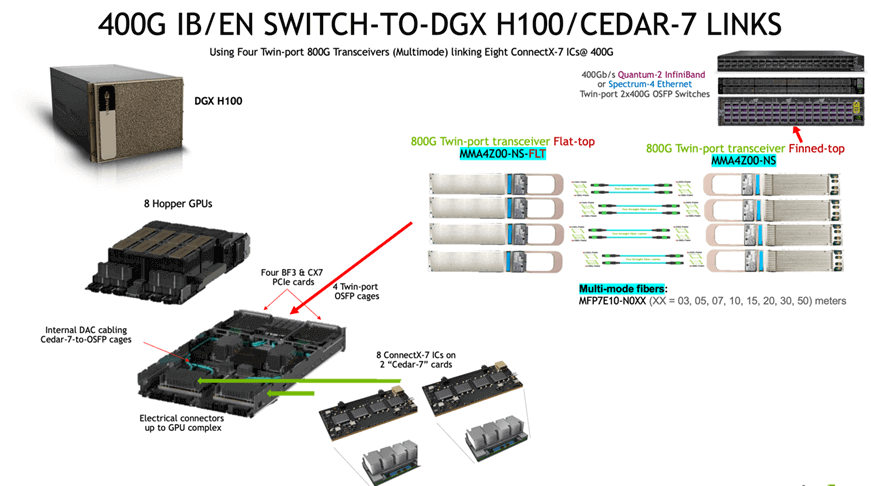

4. Link the switch to the DGX H100 “Viking” CPU chassis in the Cedar-7 complex.

The DGX-H100 system is equipped with eight Hopper H100 GPUs located in the top chassis, along with two CPUs, storage, and InfiniBand or Ethernet networking in the bottom server section. To facilitate GPU-to-GPU communication, the Cedar-7 cards are used which contain eight 400Gb/s ConnectX-7 ICs mounted on two mezzanine boards. These cards are connected internally to four 800G Twin-port OSFP cages with internal riding heat sinks for cooling purposes.

The switches that support 400G IB/EN require finned-top 2x400G transceivers due to reduced air flow inlets. The Cedar-7-to-Switch links can use either single-mode or multi-mode optics or active copper cables (ACC) for InfiniBand or Ethernet connectivity.

The Twin-port 2x400G transceiver provides two 400G ConnectX-7 links from the DGX to the Quantum-2 or Spectrum-4 switch, thereby reducing the complexity and number of transceivers required as compared to DGX A100. DGX-H100 also supports up to four ConnectX-7 and/or two BlueField-3 Data Processing Units (DPUs) in InfiniBand and/or Ethernet for traditional networking to storage, clusters, and management.

The PCIe card slots located on both sides of the OSFP GPU cages can accommodate separate cables and/or transceivers to facilitate additional networking using 400G or 200G with OSFP or QSFP112 devices.

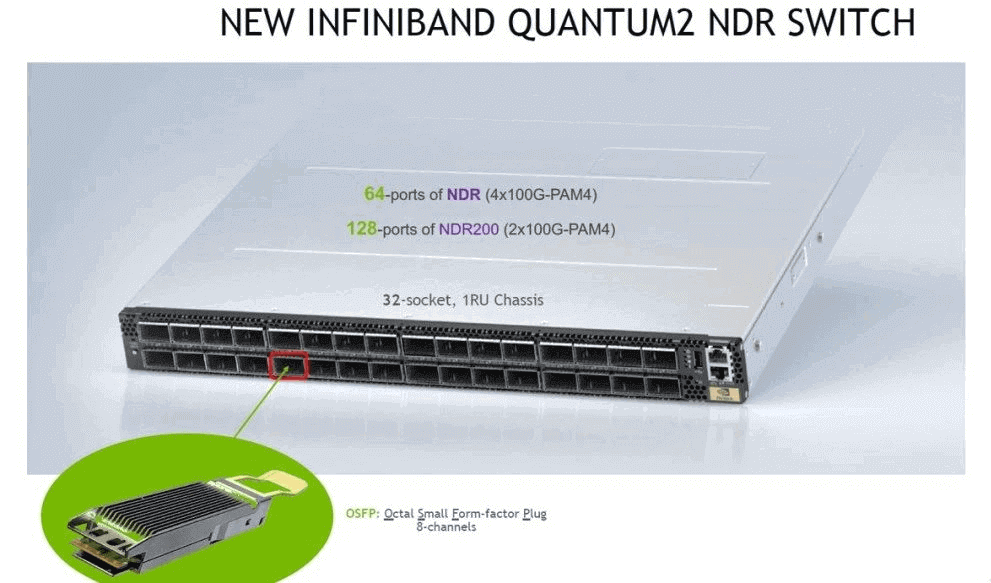

InfiniBand Quantum-2 Switch

The QM9700 and QM9790 switches from NVIDIA Quantum-2 are the mainstream IB (InfiniBand) switches in the field of modern artificial intelligence and high-performance computing. Through technological innovation and reliability testing services, NVIDIA Networks provides users with excellent network acceleration services.

These two switches use a 1U standard chassis design, with a total of 32 800G physical interfaces, and support 64 NDR 400Gb/s InfiniBand ports (which can be split into up to 128 200Gb/s ports). They support third-generation NVIDIA SHARP technology, advanced congestion control, adaptive routing, and self-healing network technology. Compared to the previous generation HDR products, NDR provides twice the port speed, three times the switch port density, five times the switch system capacity, and 32 times the switch AI acceleration capability.

QM9700 and QM9790 switches are products for rack-mounted InfiniBand solutions, including air-cooled and liquid-cooled, as well as managed and unmanaged switches. Each switch can support a bidirectional aggregate bandwidth of 51.2Tb/s and has an amazing throughput capacity of over 66.5 billion packets per second (BPPS). This is about five times the switching capacity of the previous generation Quantum-1.

QM9700 and QM9790 switches have strong flexibility and can support various network topologies such as Fat Tree, DragonFly+, and multidimensional Torus. They also support backward compatibility with previous generations of products and have extensive software system support.

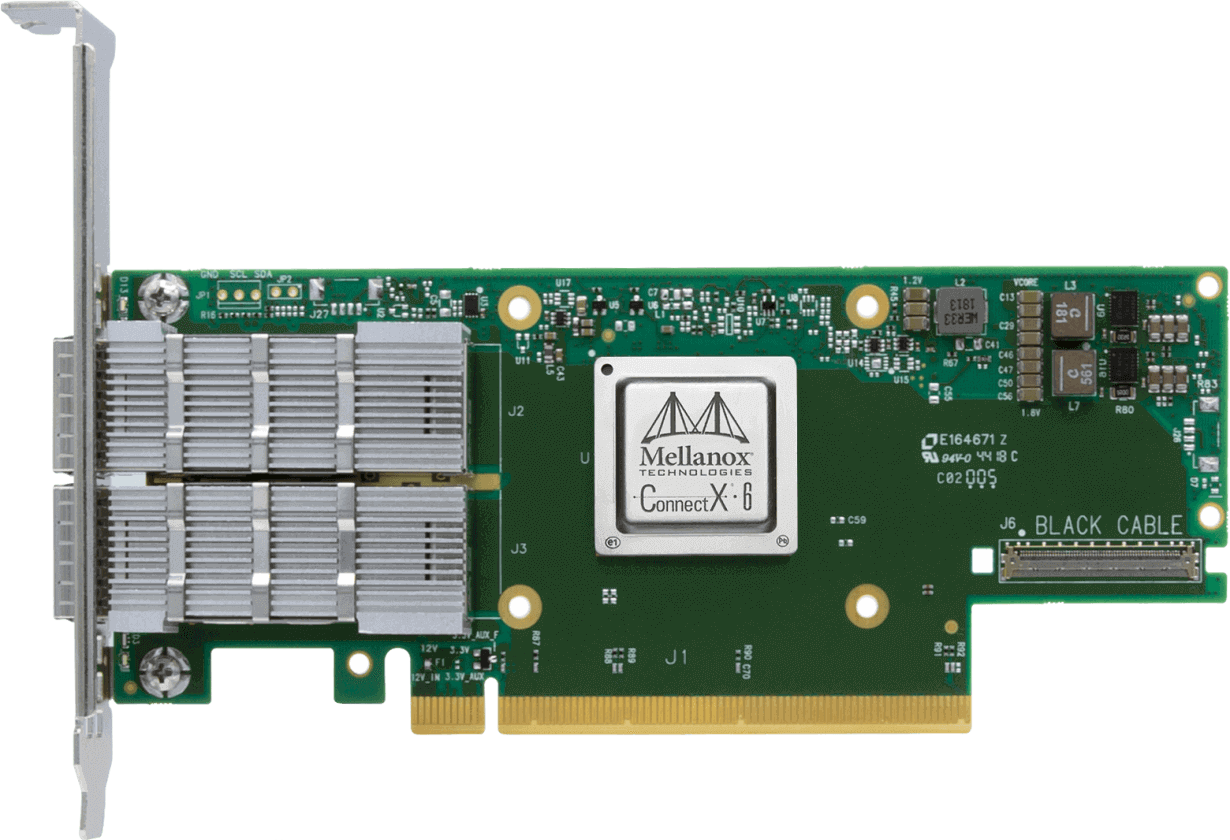

Quantum-2 ConnectX-7 Smart NIC

NVIDIA provides single-port or dual-port NDR or NDR200 NVIDIA ConnectX 7 intelligent network cards as a Quantum-2 solution. Using NVIDIA Mellanox Socket Direct technology, this network card achieves 32 channels of PCIe Gen4. Designed with 7-nanometer technology, ConnectX-7 contains 8 billion transistors and has a data transfer rate that is twice that of the leading high-performance computing network chip, NVIDIA ConnectX-6. It also doubles the performance of RDMA, GPUDirect Storage, GPUDirect RDMA, and network computing.

The NDR HCA includes multiple programmable compute cores that can unload preprocessing data algorithms and application control paths from the CPU or GPU to the network, providing higher performance, scalability, and overlap between computing and communication tasks. This intelligent network card meets the most demanding requirements for traditional enterprises and global workloads in artificial intelligence, scientific computing, and large-scale cloud data centers.

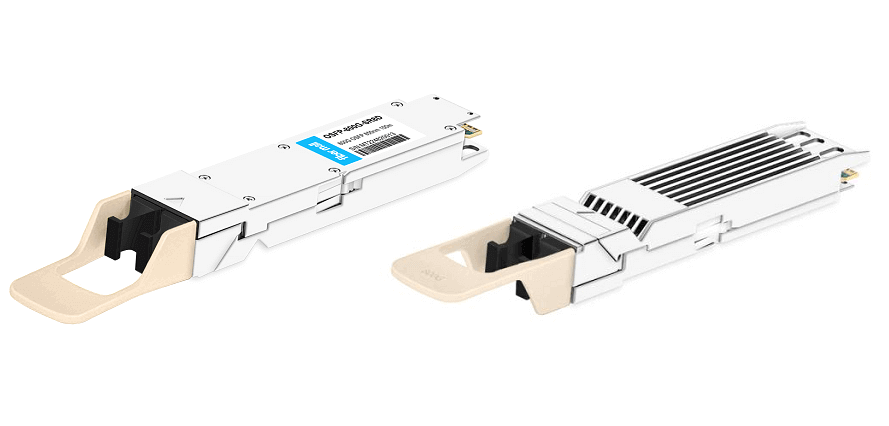

LinkX InfiniBand Optical Connector

FiberMall offers flexible 400Gb/s InfiniBand optical connectivity solutions, including single-mode and multi-mode transceivers, MPO fiber jumpers, active copper cables (ACC), and passive copper cables (DAC), to meet the needs of various network topologies.

The solution includes dual-port transceivers with OSFP connectors featuring fins designed for air-cooled fixed-configuration switches, while those with flat OSFP connectors are suitable for liquid-cooled modular switches and HCA.

For switch interconnection, a new OSFP-packaged 2xNDR (800Gbps) optical module can be used for interconnecting two QM97XX switches. The fin design significantly improves the heat dissipation of the optical modules.

For interconnection between switches and HCA, the switch end uses an OSFP-packaged 2xNDR (800Gbps) optical module with fins, while the NIC end uses a flat OSFP 400Gbps optical module. MPO fiber jumpers can provide 3-150 meters, and a one-to-two splitter fiber can provide 3-50 meters.

The connection between the switch and HCA provides a solution using DAC (up to 1.5 meters) or ACC (up to 3 meters). A breakout cable of one to two can be used to connect one OSFP port of the switch (equipped with two 400Gb/s InfiniBand ports) to two independent 400Gb/s HCAs. A breakout cable of one to four can be used to connect one OSFP switch port of the switch to four 200Gb/s HCAs.

Advantages

The NVIDIA Quantum-2 InfiniBand platform is a high-performance networking solution capable of achieving transmission speeds of 400Gb/s per port. By implementing NVIDIA Port Splitting technology, it achieves twice the speed in port density, three times the switch port density, and five times the switch system capacity. When using the Dragonfly+ topology, a network based on Quantum-2 can achieve 400Gb/s connectivity for over a million nodes within three hops, while reducing power consumption, latency, and spatial requirements.

In terms of performance, NVIDIA has introduced the third-generation SHARP technology (SHARPv3), which creates near-infinite scalability for large data aggregation through a scalable network supporting up to 64 parallel streams. AI acceleration capabilities have increased by 32 times compared to the previous HDR product.

In terms of user costs, using NDR devices can reduce network complexity and improve efficiency. When upgrading the rate later, only cables and network cards need to be replaced. NDR networks require fewer devices than those supporting the same network, making them more cost-effective for overall budgets and future investments. Compared to the previous HDR, NDR devices can reduce costs and improve efficiency.

Related Products:

-

OSFP-400G-SR4-FLT 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

OSFP-400G-SR4-FLT 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

QSFP112-400G-LR4 400G QSFP112 LR4 PAM4 CWDM 10km Duplex LC SMF FEC Optical Transceiver Module

$1500.00

QSFP112-400G-LR4 400G QSFP112 LR4 PAM4 CWDM 10km Duplex LC SMF FEC Optical Transceiver Module

$1500.00

-

QSFP112-400G-FR1 4x100G QSFP112 FR1 PAM4 1310nm 2km MTP/MPO-12 SMF FEC Optical Transceiver Module

$1200.00

QSFP112-400G-FR1 4x100G QSFP112 FR1 PAM4 1310nm 2km MTP/MPO-12 SMF FEC Optical Transceiver Module

$1200.00

-

QSFP112-400G-DR4 400G QSFP112 DR4 PAM4 1310nm 500m MTP/MPO-12 with KP4 FEC Optical Transceiver Module

$850.00

QSFP112-400G-DR4 400G QSFP112 DR4 PAM4 1310nm 500m MTP/MPO-12 with KP4 FEC Optical Transceiver Module

$850.00

-

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

-

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

-

QSFP-DD-400G-SR4.2 400Gb/s QSFP-DD SR4 BiDi PAM4 850nm/910nm 100m/150m OM4/OM5 MMF MPO-12 FEC Optical Transceiver Module

$900.00

QSFP-DD-400G-SR4.2 400Gb/s QSFP-DD SR4 BiDi PAM4 850nm/910nm 100m/150m OM4/OM5 MMF MPO-12 FEC Optical Transceiver Module

$900.00

-

QSFP-DD-400G-LR4 400G QSFP-DD LR4 PAM4 CWDM4 10km LC SMF FEC Optical Transceiver Module

$600.00

QSFP-DD-400G-LR4 400G QSFP-DD LR4 PAM4 CWDM4 10km LC SMF FEC Optical Transceiver Module

$600.00

-

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00

-

QSFP-DD-800G-DR8D QSFP-DD 8x100G DR PAM4 1310nm 500m DOM Dual MPO-12 SMF Optical Transceiver Module

$1250.00

QSFP-DD-800G-DR8D QSFP-DD 8x100G DR PAM4 1310nm 500m DOM Dual MPO-12 SMF Optical Transceiver Module

$1250.00

-

OSFP-800G-FR8L OSFP 800G FR8 PAM4 CWDM8 Duplex LC 2km SMF Optical Transceiver Module

$3000.00

OSFP-800G-FR8L OSFP 800G FR8 PAM4 CWDM8 Duplex LC 2km SMF Optical Transceiver Module

$3000.00

-

OSFP-800G-LR8 OSFP 8x100G LR PAM4 1310nm MPO-16 10km SMF Optical Transceiver Module

$1800.00

OSFP-800G-LR8 OSFP 8x100G LR PAM4 1310nm MPO-16 10km SMF Optical Transceiver Module

$1800.00

-

OSFP-800G-FR8 OSFP 8x100G FR PAM4 1310nm MPO-16 2km SMF Optical Transceiver Module

$1200.00

OSFP-800G-FR8 OSFP 8x100G FR PAM4 1310nm MPO-16 2km SMF Optical Transceiver Module

$1200.00

-

OSFP-800G-SR8 OSFP 8x100G SR8 PAM4 850nm MTP/MPO-16 100m OM4 MMF FEC Optical Transceiver Module

$650.00

OSFP-800G-SR8 OSFP 8x100G SR8 PAM4 850nm MTP/MPO-16 100m OM4 MMF FEC Optical Transceiver Module

$650.00

-

OSFP-400G-LR4 400G LR4 OSFP PAM4 CWDM4 LC 10km SMF Optical Transceiver Module

$1199.00

OSFP-400G-LR4 400G LR4 OSFP PAM4 CWDM4 LC 10km SMF Optical Transceiver Module

$1199.00

-

OSFP-400G-DR4+ 400G OSFP DR4+ 1310nm MPO-12 2km SMF Optical Transceiver Module

$850.00

OSFP-400G-DR4+ 400G OSFP DR4+ 1310nm MPO-12 2km SMF Optical Transceiver Module

$850.00

-

OSFP-2x200G-FR4 2x 200G OSFP FR4 PAM4 2x CWDM4 CS 2km SMF FEC Optical Transceiver Module

$1500.00

OSFP-2x200G-FR4 2x 200G OSFP FR4 PAM4 2x CWDM4 CS 2km SMF FEC Optical Transceiver Module

$1500.00

-

OSFP-400G-SR8 400G SR8 OSFP PAM4 850nm MTP/MPO-16 100m OM3 MMF FEC Optical Transceiver Module

$400.00

OSFP-400G-SR8 400G SR8 OSFP PAM4 850nm MTP/MPO-16 100m OM3 MMF FEC Optical Transceiver Module

$400.00