- Brian

Harry Collins

Answered on 8:05 am

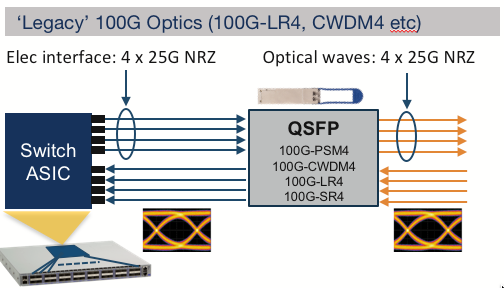

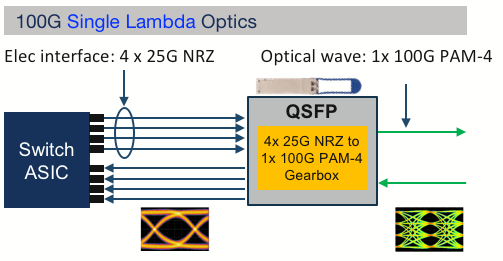

100G Lambda Optics is an optical transceiver transmitting 100 gigabits per second (Gbps) of data over a single wavelength or lambda. They use a modulation technique called PAM4, which stands for Pulse Amplitude Modulation 4, to encode two bits of information per symbol. This allows them to achieve higher data rates than traditional NRZ (Non-Return-to-Zero) modulation, which encodes one bit per symbol.

100G Lambda Optics are compatible with 200G and 400G Ethernet standards, which use multiple lanes of 100G signals to achieve higher bandwidth. They can also interoperate with other 100G optics that use different modulation schemes or wavelengths, such as 100G DR (Duplex Reach), 100G FR (Four Wavelengths), or 100G LR4 (Long Reach). They can support various distances and applications, depending on the type and model of the transceiver.

- QSFP-100G-DR: 100GBASE-DR single lambda QSFP, up to 500m over duplex SMF.

- QSFP-100G-FR: 100GBASE-FR single lambda QSFP, up to 2km over duplex SMF.

- QSFP-100G-LR: 100GBASE-LR single lambda QSFP, up to 10km over duplex SMF.

Some of the benefits of 100G Lambda Optics are:

They reduce the complexity and cost of the optical modules and the fiber infrastructure, as they use fewer components and wavelengths than other 100G optics.

They enable future-proofing and scalability of the network, as they can be reused or upgraded to new form factors or higher data rates without sacrificing performance or compatibility.

They provide operational flexibility and efficiency for data center operators, as they can connect to different types of devices and networks using the same type of optic.

People Also Ask

Analysis of Core Port Ratios in Intelligent Computing Center Network Design

Two Key Design Principles for GPU Cluster Networks The Definition of Core Ports In a typical Spine-Leaf (CLOS) network architecture for intelligent computing centers: Consistent Access-to-Core Port Ratios The number and bandwidth of “downlink ports” (used to connect servers) on a Leaf switch should maintain a fixed and sufficient ratio—typically 1:1

NVIDIA Spectrum-X Network Platform Architecture Whitepaper

Improving AI Performance and Efficiency AI workload demands are growing at an unprecedented rate, and the adoption of generative AI is skyrocketing. Every year, new AI factories are springing up. These facilities, dedicated to the development and operation of artificial intelligence technologies, are increasingly expanding into the domains of Cloud

NVIDIA GB200 NVL72: Defining the New Benchmark for Rack-Scale AI Computing

The explosive growth of Large Language Models (LLM) and Mixture-of-Experts (MoE) architectures is fundamentally reshaping the underlying logic of computing infrastructure. As model parameters cross the trillion mark, traditional cluster architectures—centered on standalone servers connected by standard networking—are hitting physical and economic ceilings. In this context, NVIDIA’s GB200 NVL72 is

In-Depth Analysis Report on 800G Switches: Architectural Evolution, Market Landscape, and Future Outlook

Introduction: Reconstructing Network Infrastructure in the AI Era Paradigm Shift from Cloud Computing to AI Factories Global data center networks are undergoing the most profound transformation in the past decade. Previously, network architectures were primarily designed around cloud computing and internet application traffic patterns, dominated by “north-south” client-server models. However,

Global 400G Ethernet Switch Market and Technical Architecture In-depth Research Report: AI-Driven Network Restructuring and Ecosystem Evolution

Executive Summary Driven by the explosive growth of the digital economy and Artificial Intelligence (AI) technologies, global data center network infrastructure is at a critical historical node of migration from 100G to 400G/800G. As Large Language Model (LLM) parameters break through the trillion level and demands for High-Performance Computing (HPC)

Related Articles

800G SR8 and 400G SR4 Optical Transceiver Modules Compatibility and Interconnection Test Report

Version Change Log Writer V0 Sample Test Cassie Test Purpose Test Objects:800G OSFP SR8/400G OSFP SR4/400G Q112 SR4. By conducting corresponding tests, the test parameters meet the relevant industry standards, and the test modules can be normally used for Nvidia (Mellanox) MQM9790 switch, Nvidia (Mellanox) ConnectX-7 network card and Nvidia (Mellanox) BlueField-3, laying a foundation for

Analysis of Core Port Ratios in Intelligent Computing Center Network Design

Two Key Design Principles for GPU Cluster Networks The Definition of Core Ports In a typical Spine-Leaf (CLOS) network architecture for intelligent computing centers: Consistent Access-to-Core Port Ratios The number and bandwidth of “downlink ports” (used to connect servers) on a Leaf switch should maintain a fixed and sufficient ratio—typically 1:1

NVIDIA Spectrum-X Network Platform Architecture Whitepaper

Improving AI Performance and Efficiency AI workload demands are growing at an unprecedented rate, and the adoption of generative AI is skyrocketing. Every year, new AI factories are springing up. These facilities, dedicated to the development and operation of artificial intelligence technologies, are increasingly expanding into the domains of Cloud

NVIDIA GB200 NVL72: Defining the New Benchmark for Rack-Scale AI Computing

The explosive growth of Large Language Models (LLM) and Mixture-of-Experts (MoE) architectures is fundamentally reshaping the underlying logic of computing infrastructure. As model parameters cross the trillion mark, traditional cluster architectures—centered on standalone servers connected by standard networking—are hitting physical and economic ceilings. In this context, NVIDIA’s GB200 NVL72 is

In-Depth Analysis Report on 800G Switches: Architectural Evolution, Market Landscape, and Future Outlook

Introduction: Reconstructing Network Infrastructure in the AI Era Paradigm Shift from Cloud Computing to AI Factories Global data center networks are undergoing the most profound transformation in the past decade. Previously, network architectures were primarily designed around cloud computing and internet application traffic patterns, dominated by “north-south” client-server models. However,

Why Is It Necessary to Remove the DSP Chip in LPO Optical Module Links?

If you follow the optical module industry, you will often hear the phrase “LPO needs to remove the DSP chip.” Why is this? To answer this question, we first need to clarify two core concepts: what LPO is and the role of DSP in optical modules. This will explain why

Global 400G Ethernet Switch Market and Technical Architecture In-depth Research Report: AI-Driven Network Restructuring and Ecosystem Evolution

Executive Summary Driven by the explosive growth of the digital economy and Artificial Intelligence (AI) technologies, global data center network infrastructure is at a critical historical node of migration from 100G to 400G/800G. As Large Language Model (LLM) parameters break through the trillion level and demands for High-Performance Computing (HPC)

Related posts:

- If the Server’s Module is OSFP and the Switch’s is QSFP112, can it be Linked by Cables to Connect Data?

- Can I Plug an OSFP Module into a QSFP-DD Port, or a QSFP-DD Module into an OSFP Port?

- What is the Difference Between 400G-BIDI, 400G-SRBD and 400G-SR4.2?

- What is the 100G-SRBD (or “BIDI”) Transceiver?