- Catherine

- September 7, 2023

- 8:10 am

John Doe

Answered on 8:10 am

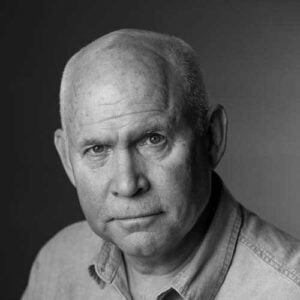

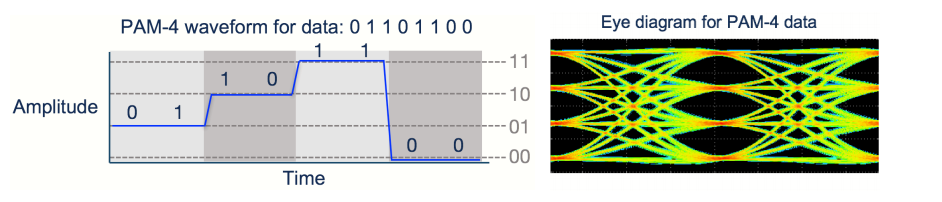

PAM-4 and NRZ are two different modulation techniques that are used to transmit data over an electrical or optical channel. Modulation is the process of changing the characteristics of a signal (such as voltage, amplitude, or frequency) to encode information. PAM-4 and NRZ have different advantages and disadvantages depending on the channel characteristics and the data rate.

PAM-4 stands for Pulse Amplitude Modulation 4-level. It means that the signal can have four different levels of amplitude (or voltage), each representing two bits of information. For example, a PAM-4 signal can use 0V, 1V, 2V, and 3V to encode 00, 01, 11, and 10 respectively. PAM-4 can transmit twice as much data as NRZ for the same symbol rate (or baud rate), which is the number of times the signal changes per second. However, PAM-4 also has some drawbacks, such as higher power consumption, lower signal-to-noise ratio (SNR), and higher bit error rate (BER). PAM-4 requires more sophisticated signal processing and error correction techniques to overcome these challenges. PAM-4 is used for high-speed data transmission such as 400G Ethernet.

NRZ stands for Non-Return-to-Zero. It means that the signal can have two different levels of amplitude (or voltage), each representing one bit of information. For example, a NRZ signal can use -1V and +1V to encode 0 and 1 respectively. NRZ does not return to zero voltage between symbols, hence the name. NRZ has some advantages over PAM-4, such as lower power consumption, higher SNR, and lower BER. NRZ is simpler and more robust than PAM-4, but it also has a lower data rate for the same symbol rate. NRZ is used for short-distance data transmission such as 100G Ethernet.

When a signal is referred to as “25Gb/s NRZ” or “25G NRZ”, it means the signal is carrying data at 25 Gbit / second with NRZ modulation. When a signal is referred to as “50G PAM-4”, or “100G PAM-4” it means the signal is carrying data at a rate of 50 Gbit / second, or 100 Gbit / second, respectively, using PAM-4 modulation.

People Also Ask

AI Compute Clusters: Powering the Future

In recent years, the global rise of artificial intelligence (AI) has captured widespread attention across society. A common point of discussion surrounding AI is the concept of compute clusters—one of the three foundational pillars of AI, alongside algorithms and data. These compute clusters serve as the primary source of computational

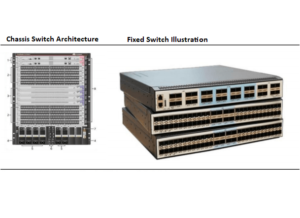

Data Center Switches: Current Landscape and Future Trends

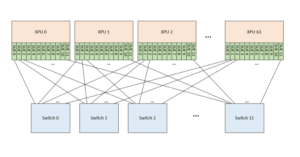

As artificial intelligence (AI) drives exponential growth in data volumes and model complexity, distributed computing leverages interconnected nodes to accelerate training processes. Data center switches play a pivotal role in ensuring timely message delivery across nodes, particularly in large-scale data centers where tail latency is critical for handling competitive workloads.

Comprehensive Guide to 100G BIDI QSFP28 Simplex LC SMF Transceivers

The demand for high-speed, cost-effective, and fiber-efficient optical transceivers has surged with the growth of data centers, telecommunications, and 5G networks. The 100G BIDI QSFP28 (Bidirectional Quad Small Form-Factor Pluggable 28) transceiver is a standout solution, enabling 100 Gigabit Ethernet (100GbE) over a single-mode fiber (SMF) with a simplex LC

NVIDIA SN5600: The Ultimate Ethernet Switch for AI and Cloud Data Centers

The NVIDIA SN5600 is a cutting-edge, high-performance Ethernet switch designed to meet the demanding needs of modern data centers, particularly those focused on artificial intelligence (AI), high-performance computing (HPC), and cloud-scale infrastructure. As part of NVIDIA’s Spectrum-4 series, the SN5600 delivers unparalleled throughput, low latency, and advanced networking features, making

How Ethernet Outpaces InfiniBand in AI Networking

Ethernet Challenges InfiniBand’s Dominance InfiniBand dominated high-performance networking in the early days of generative AI due to its superior speed and low latency. However, Ethernet has made significant strides, leveraging cost efficiency, scalability, and continuous technological advancements to close the gap with InfiniBand networking. Industry giants like Amazon, Google, Oracle,

Understanding NVIDIA’s Product Ecosystem and Naming Conventions

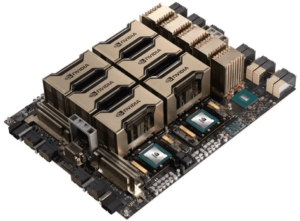

Compute Chips—V100, A100, H100, B200, etc. These terms are among the most commonly encountered in discussions about artificial intelligence. They refer to AI compute cards, specifically GPU models. NVIDIA releases a new GPU architecture every few years, each named after a renowned scientist. Cards based on a particular architecture typically

Related Articles

800G SR8 and 400G SR4 Optical Transceiver Modules Compatibility and Interconnection Test Report

Version Change Log Writer V0 Sample Test Cassie Test Purpose Test Objects:800G OSFP SR8/400G OSFP SR4/400G Q112 SR4. By conducting corresponding tests, the test parameters meet the relevant industry standards, and the test modules can be normally used for Nvidia (Mellanox) MQM9790 switch, Nvidia (Mellanox) ConnectX-7 network card and Nvidia (Mellanox) BlueField-3, laying a foundation for

AI Compute Clusters: Powering the Future

In recent years, the global rise of artificial intelligence (AI) has captured widespread attention across society. A common point of discussion surrounding AI is the concept of compute clusters—one of the three foundational pillars of AI, alongside algorithms and data. These compute clusters serve as the primary source of computational

Data Center Switches: Current Landscape and Future Trends

As artificial intelligence (AI) drives exponential growth in data volumes and model complexity, distributed computing leverages interconnected nodes to accelerate training processes. Data center switches play a pivotal role in ensuring timely message delivery across nodes, particularly in large-scale data centers where tail latency is critical for handling competitive workloads.

Comprehensive Guide to 100G BIDI QSFP28 Simplex LC SMF Transceivers

The demand for high-speed, cost-effective, and fiber-efficient optical transceivers has surged with the growth of data centers, telecommunications, and 5G networks. The 100G BIDI QSFP28 (Bidirectional Quad Small Form-Factor Pluggable 28) transceiver is a standout solution, enabling 100 Gigabit Ethernet (100GbE) over a single-mode fiber (SMF) with a simplex LC

NVIDIA SN5600: The Ultimate Ethernet Switch for AI and Cloud Data Centers

The NVIDIA SN5600 is a cutting-edge, high-performance Ethernet switch designed to meet the demanding needs of modern data centers, particularly those focused on artificial intelligence (AI), high-performance computing (HPC), and cloud-scale infrastructure. As part of NVIDIA’s Spectrum-4 series, the SN5600 delivers unparalleled throughput, low latency, and advanced networking features, making

How Ethernet Outpaces InfiniBand in AI Networking

Ethernet Challenges InfiniBand’s Dominance InfiniBand dominated high-performance networking in the early days of generative AI due to its superior speed and low latency. However, Ethernet has made significant strides, leveraging cost efficiency, scalability, and continuous technological advancements to close the gap with InfiniBand networking. Industry giants like Amazon, Google, Oracle,

Understanding NVIDIA’s Product Ecosystem and Naming Conventions

Compute Chips—V100, A100, H100, B200, etc. These terms are among the most commonly encountered in discussions about artificial intelligence. They refer to AI compute cards, specifically GPU models. NVIDIA releases a new GPU architecture every few years, each named after a renowned scientist. Cards based on a particular architecture typically