- Mia

- September 10, 2023

- 8:14 am

Harry Collins

Answered on 8:14 am

For 100G mode with QPSK modulation, the FEC type could be oFEC (OpenROADM) or SDFEC (Soft Decision FEC) with probabilistic constellation shaping.

For 200G mode with QPSK modulation, the FEC type could be SDFEC with probabilistic constellation shaping.

For 400G mode with 16-QAM modulation, the FEC type could be SDFEC with probabilistic constellation shaping.

The 400G-BIDI module can work in different ways depending on the switch and the application. It can use different types of FEC (Forward Error Correction) to improve the signal quality. FEC is a method of adding extra bits to the data to detect and correct errors.

One way is to use the 400G-SR4.2 or 4x 100G-SR1.2 mode, where the switch does the FEC and the module does not. The FEC used here is called KP-FEC, which is a type of Reed-Solomon FEC.

Another way is to use the 4x 100G-BIDI (100G-SRBD) mode, where the module does the FEC and the switch does not. The module uses a different FEC for each pair of fibers, which can work with the existing 100G-BIDI modules. This way, you can keep using your old 100G-BIDI modules with the new 400G-BIDI modules.

People Also Ask

New H3C Unveils the S12500AI: A New Generation AI Network Solution Based on the DDC Architecture

Recently, New H3C introduced its groundbreaking lossless network solution and compute cluster switch—the H3C S12500AI—built upon the DDC (Diversity Dynamic-Connectivity) architecture. Tailored to meet the demanding requirements of scenarios involving the interconnection of tens of thousands of compute cards, this solution redefines the network architecture of intelligent computing centers. Performance

Artificial Intelligence: High-Performance Computing and High-Speed Optical Module Technology Trends

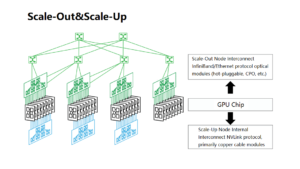

Artificial intelligence demands extraordinarily large computational power. In high-performance computing systems, there is a clear divergence in approach: scale-up systems rely on copper cable modules, while scale-out systems are increasingly dependent on optical modules. This year, detailed analyses have been conducted on copper cable modules used for scale-up applications. In

SemiAnalysis of Huawei CloudMatrix and the 910C

Huawei has recently made a significant impact on the industry with its innovative AI accelerator and rack-level architecture. China’s latest domestically developed cloud supercomputing solution, CloudMatrix M8, was officially unveiled. Built upon the Ascend 910C processor, this solution is positioned to directly rival Nvidia’s GB200 NVL72 system, exhibiting superior technological

How to Extend the Life of GPU Servers?

Routine maintenance of GPU servers is critical to ensuring their stability and extending their service life. Here are some key maintenance details. Cleaning Exterior Cleaning: Clean the server housing regularly with a microfiber cloth to avoid dust accumulation. Do not use harsh cleaners. Internal cleaning: Clean the internal dust every 3-6 months,

NVIDIA HGX B300 Overview

The NVIDIA HGX B300 platform represents a significant advancement in our computing infrastructure. Notably, the latest variant—designated as the NVIDIA HGX B300 NVL16—indicates the number of compute chips interconnected via NVLink rather than merely reflecting the number of GPU packages. This nomenclature change underscores NVIDIA’s evolving approach toward interconnect performance

Optical Transceivers Overcome Heat

The rapid development of AI and large language models has led to a surge in demand for high-speed optical transceivers in data centers and AI cluster computers. As optical transceiver speeds scale from 100 Gbps (for entry-level data center applications) to 400 Gbps (widely used in current AI clusters), to 800

Related Articles

800G SR8 and 400G SR4 Optical Transceiver Modules Compatibility and Interconnection Test Report

Version Change Log Writer V0 Sample Test Cassie Test Purpose Test Objects:800G OSFP SR8/400G OSFP SR4/400G Q112 SR4. By conducting corresponding tests, the test parameters meet the relevant industry standards, and the test modules can be normally used for Nvidia (Mellanox) MQM9790 switch, Nvidia (Mellanox) ConnectX-7 network card and Nvidia (Mellanox) BlueField-3, laying a foundation for

New H3C Unveils the S12500AI: A New Generation AI Network Solution Based on the DDC Architecture

Recently, New H3C introduced its groundbreaking lossless network solution and compute cluster switch—the H3C S12500AI—built upon the DDC (Diversity Dynamic-Connectivity) architecture. Tailored to meet the demanding requirements of scenarios involving the interconnection of tens of thousands of compute cards, this solution redefines the network architecture of intelligent computing centers. Performance

Artificial Intelligence: High-Performance Computing and High-Speed Optical Module Technology Trends

Artificial intelligence demands extraordinarily large computational power. In high-performance computing systems, there is a clear divergence in approach: scale-up systems rely on copper cable modules, while scale-out systems are increasingly dependent on optical modules. This year, detailed analyses have been conducted on copper cable modules used for scale-up applications. In

SemiAnalysis of Huawei CloudMatrix and the 910C

Huawei has recently made a significant impact on the industry with its innovative AI accelerator and rack-level architecture. China’s latest domestically developed cloud supercomputing solution, CloudMatrix M8, was officially unveiled. Built upon the Ascend 910C processor, this solution is positioned to directly rival Nvidia’s GB200 NVL72 system, exhibiting superior technological

How to Extend the Life of GPU Servers?

Routine maintenance of GPU servers is critical to ensuring their stability and extending their service life. Here are some key maintenance details. Cleaning Exterior Cleaning: Clean the server housing regularly with a microfiber cloth to avoid dust accumulation. Do not use harsh cleaners. Internal cleaning: Clean the internal dust every 3-6 months,

NVIDIA HGX B300 Overview

The NVIDIA HGX B300 platform represents a significant advancement in our computing infrastructure. Notably, the latest variant—designated as the NVIDIA HGX B300 NVL16—indicates the number of compute chips interconnected via NVLink rather than merely reflecting the number of GPU packages. This nomenclature change underscores NVIDIA’s evolving approach toward interconnect performance

Optical Transceivers Overcome Heat

The rapid development of AI and large language models has led to a surge in demand for high-speed optical transceivers in data centers and AI cluster computers. As optical transceiver speeds scale from 100 Gbps (for entry-level data center applications) to 400 Gbps (widely used in current AI clusters), to 800