Artificial intelligence workloads are characterized by a small number of tasks that handle large amounts of data transfer between GPUs, and tail latency can have a significant impact on the overall application performance. Using traditional network routing mechanisms to handle this traffic pattern may result in inconsistent GPU performance and low utilization of AI workloads.

NVIDIA Spectrum-X RoCE Dynamic Routing is a fine-grained load balancing technology that dynamically adjusts RDMA data routing to avoid congestion, combined with BlueField 3’s DDP technology, it provides optimal load balancing and achieves more efficient data bandwidth.

Table of Contents

ToggleSpectrum-X Network Platform Overview

NVIDIA® Spectrum™-X Network Platform is the first Ethernet platform designed to improve the performance and efficiency of Ethernet-based AI clouds. This breakthrough technology boosts AI performance and power efficiency by 1.7 times in large-scale AI workloads similar to LLM and ensures consistency and predictability in multi-tenant environments. Spectrum-X is based on Spectrum-4 Ethernet switches and NVIDIA BlueField®-3 DPU network cards and is optimized end-to-end for AI workloads.

Spectrum-X Key Technologies

To support and accelerate AI workloads, Spectrum-X has made a series of optimizations from DPUs to switches, cables/optical devices, networks, and acceleration software, including:

- NVIDIA RoCE adaptive routing on Spectrum-4

- NVIDIA Direct Data Placement (DDP) on BlueField-3

- NVIDIA RoCE congestion control on Spectrum-4 and BlueField-3

- NVIDIA AI acceleration software

- End-to-end AI network visibility

Spectrum-X Key Benefits

- Improve AI cloud performance: Spectrum-X boosts AI cloud performance by 1.7 times.

- Standard Ethernet connectivity: Spectrum-X fully complies with Ethernet standards and is fully compatible with Ethernet-based technology stacks.

- Improve energy efficiency: By improving performance, Spectrum-X contributes to a more energy-efficient AI environment.

- Enhanced multi-tenant protection: Perform performance isolation in multi-tenant environments, ensuring optimal and consistent performance for each tenant’s workload, driving improved customer satisfaction and service quality.

- Better AI network visibility: Monitor traffic running in the AI cloud for visibility, identify performance bottlenecks, and be a key component of modern automated network validation solutions.

- Higher AI scalability: Support scaling to 128 400G ports in one hop, or to 8K ports in a two-tier spine topology, while maintaining high performance levels, supporting AI cloud expansion.

- Faster network setup: End-to-end configuration of advanced network features automated, fully optimized for AI workloads.

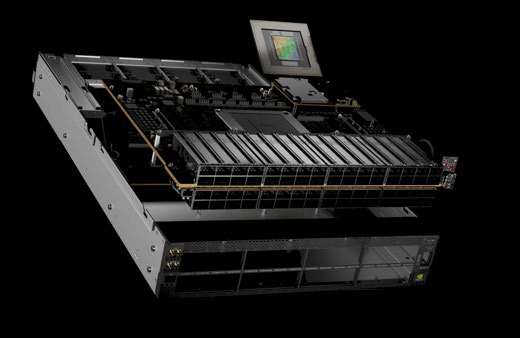

Spectrum-4 Ethernet Switch

The Spectrum-4 switch is built on a 51.2Tbps ASIC, supporting up to 128 400G Ethernet ports in a single 2U switch. Spectrum-4 is the first Ethernet switch designed for AI workloads. For AI, RoCE has been extended:

- RoCE adaptive routing

- RoCE performance isolation

- Effective bandwidth enhancement on large-scale standard Ethernet

- Low latency, low jitter, and short tail latency

NVIDIA Spectrum-4 400 Gigabit Ethernet Switch

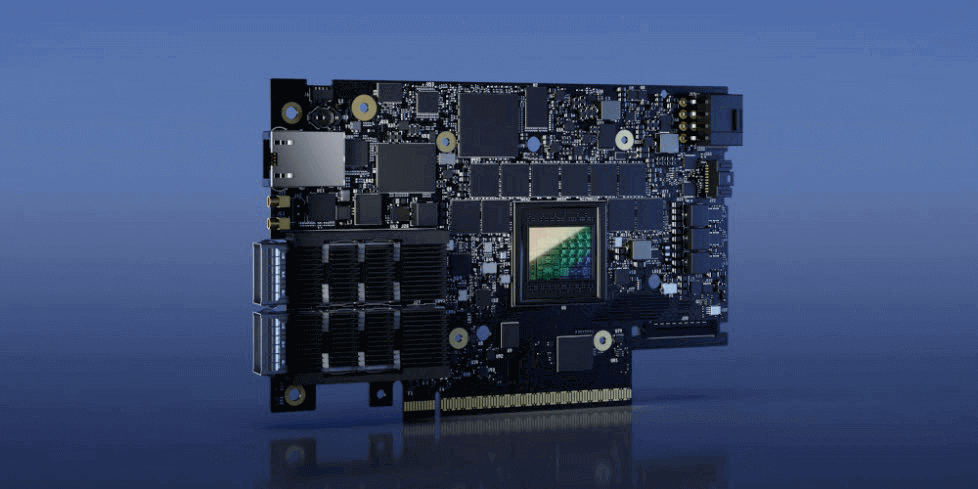

BlueField-3 DPU

The NVIDIA BlueField-3 DPU is the third-generation data center infrastructure chip that enables organizations to build software-defined, hardware-accelerated IT infrastructures from cloud to core data center to edge. With 400Gb/s Ethernet network connectivity, the BlueField-3 DPU can offload, accelerate, and isolate software-defined networking, storage, security, and management functions, thereby significantly improving the performance, efficiency, and security of data centers. BlueField-3 provides multi-tenant, secure performance capabilities for north-south and east-west traffic in cloud AI data centers powered by Spectrum-X.

NVIDIA BlueField-3 400Gb/s Ethernet DPU

BlueField-3 is built for AI acceleration, integrating an all-to-all engine for AI, NVIDIA GPUDirect, and NVIDIA Magnum IO GPUDirect Storage acceleration technologies.

In addition, it also has a special network interface mode (NIC) mode that leverages local memory to accelerate large AI clouds. These clouds contain a large number of queue pairs that can be accessed at local addresses instead of using system memory. Finally, it includes NVIDIA Direct Data Placement (DDP) technology to enhance RoCE adaptive routing.

NVIDIA End-To-End Physical Layer (PHY)

Spectrum-X is the only Ethernet network platform built on the same 100G SerDes channel, from switch to DPU to GPU, using NVIDIA’s SerDes technology.

NVIDIA’s SerDes ensures excellent signal integrity and the lowest bit error rate (BER), greatly reducing the power consumption of the AI cloud. This powerful SerDes technology, combined with NVIDIA’s Hopper GPUs, Spectrum-4, BlueField-3, and Quantum InfiniBand product portfolio, achieves the perfect balance of power efficiency and performance.

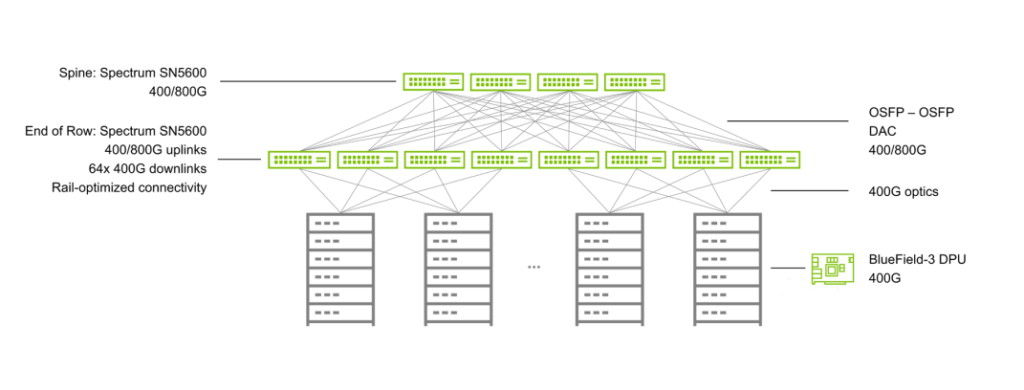

Typical Spectrum-X network topology

SerDes technology plays an important role in modern data transmission, as it can convert parallel data to serial data, and vice versa.

Using SerDes technology uniformly across all network devices and components in the network or system brings many advantages:

Cost and power efficiency: The SerDes used by NVIDIA Spectrum-X is optimized for high power efficiency, and does not require gearboxes in the network, which are used to bridge different data rates. Using gearboxes not only increases the complexity of the data path but also adds extra cost and power consumption. Eliminating the need for these gearboxes reduces the initial investment and the operational costs associated with power and cooling.

System design efficiency: Using the best SerDes technology uniformly in the data center infrastructure provides better signal integrity, reduces the need for system components, and simplifies system design. At the same time, using the same SerDes technology also makes operation easier and improves availability.

NVIDIA Acceleration Software

NetQ

NVIDIA NetQ is a highly scalable network operations toolset for real-time AI network visibility, troubleshooting, and verification. NetQ leverages NVIDIA switch telemetry data and NVIDIA DOCA telemetry to provide insights into switch and DPU health, integrating the network into the organization’s MLOps system.

In addition, NetQ traffic telemetry can map the flow paths and behaviors across switch ports and RoCE queues, to analyze the flow situation of specific applications.

NetQ samples analyze and report the latency (max, min, and average) and buffer occupancy details on each flow path. NetQ GUI reports all possible paths, the details of each path, and the flow behavior. Combining telemetry telemetry with traffic telemetry helps network operators proactively identify the root causes of server and application problems.

Spectrum SDK

NVIDIA Ethernet Switch Software Development Kit (SDK) provides the flexibility to implement switching and routing functionality, with complex programmability that does not affect packet rate, bandwidth, or latency performance. With the SDK, server, and network OEMs and network operating system (NOS), vendors can leverage the advanced network features of the Ethernet switch series integrated circuits (ICs) to build flexible, innovative, and cost-optimized switching solutions.

NVIDIA DOCA

NVIDIA DOCA is the key to unleashing the potential of the NVIDIA BlueField DPU, offloading, accelerating, and isolating data center workloads. With DOCA, developers can address the growing performance and security demands of modern data centers by creating software-defined, cloud-native, DPU-accelerated services with zero-trust protection.

NVIDIA Spectrum-X Main Features

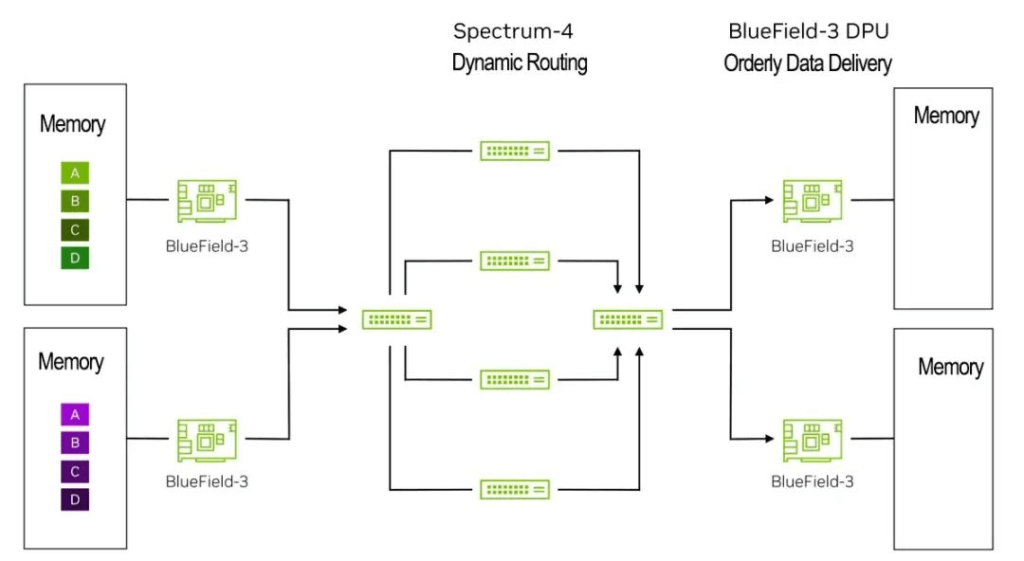

NVIDIA RoCE dynamic routing principle of work

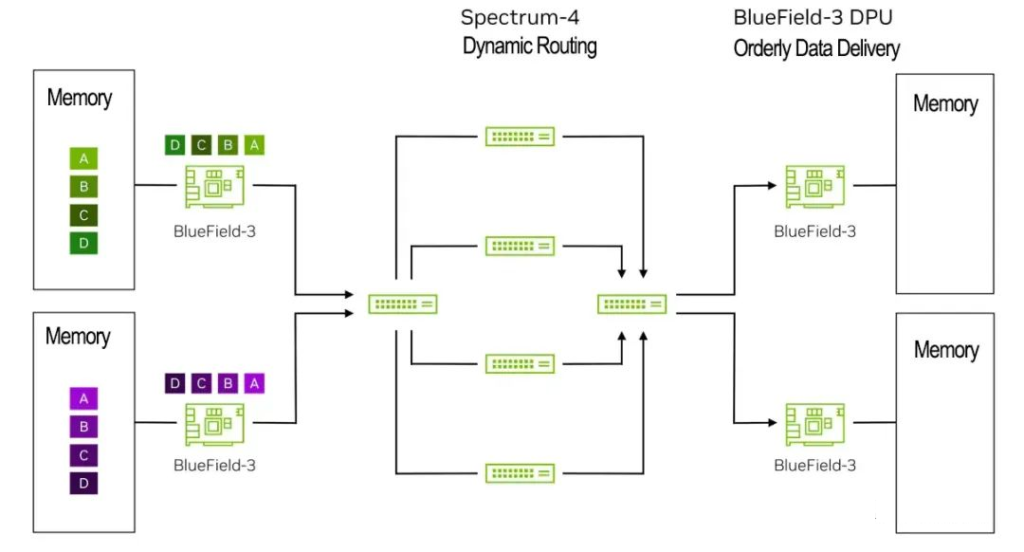

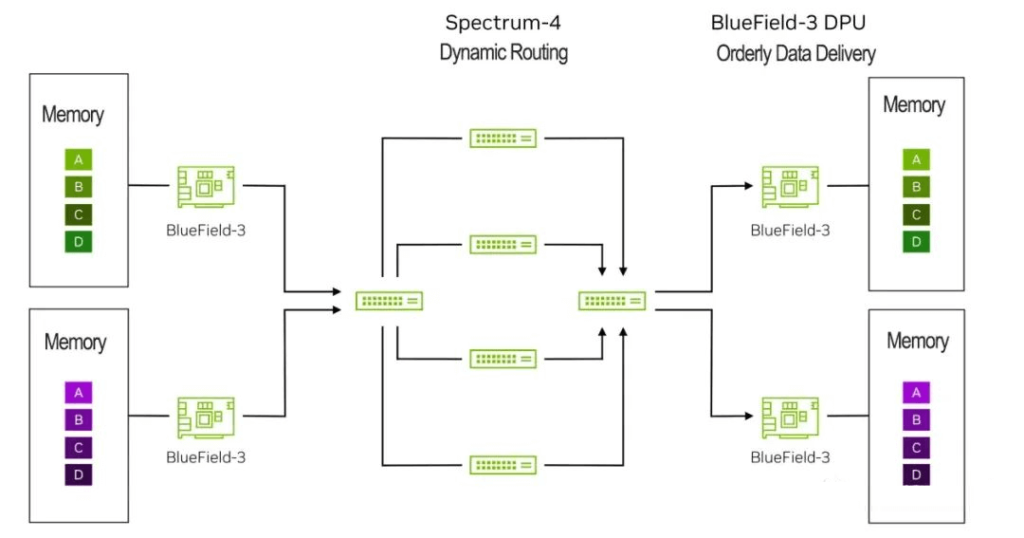

RoCE dynamic routing works between Spectrum-4 switch and BlueField-3 DPU in an end-to-end manner:

- Spectrum-4 switch is responsible for selecting each packet based on the lowest congestion port, and evenly distributing data transmission. When different packets of the same flow pass through different paths of the network, they may arrive in an unordered way to the destination.

- BlueField-3 DPU processes the data in the RoCE transmission layer to provide continuous data transparency to applications. Spectrum-4 switch evaluates the congestion situation based on the load of the outgoing queue and ensures that all ports are balanced in terms of utilization. The switch selects an output queue with the lowest load for each network packet. Spectrum-4 switch also receives status notifications from adjacent switches, which can also affect the forwarding decision. The evaluation involves queues that match with traffic classes. Therefore, Spectrum-X can achieve up to 95% effective bandwidth in large-scale systems and high-load scenarios.

2. NVIDIA RoCE dynamic routing and NVIDIA direct data placement technology

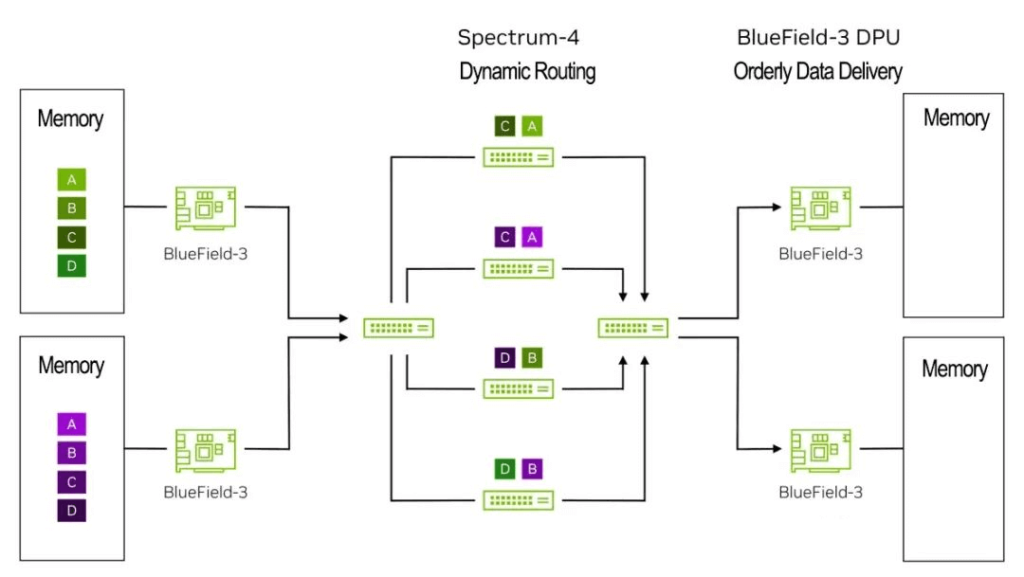

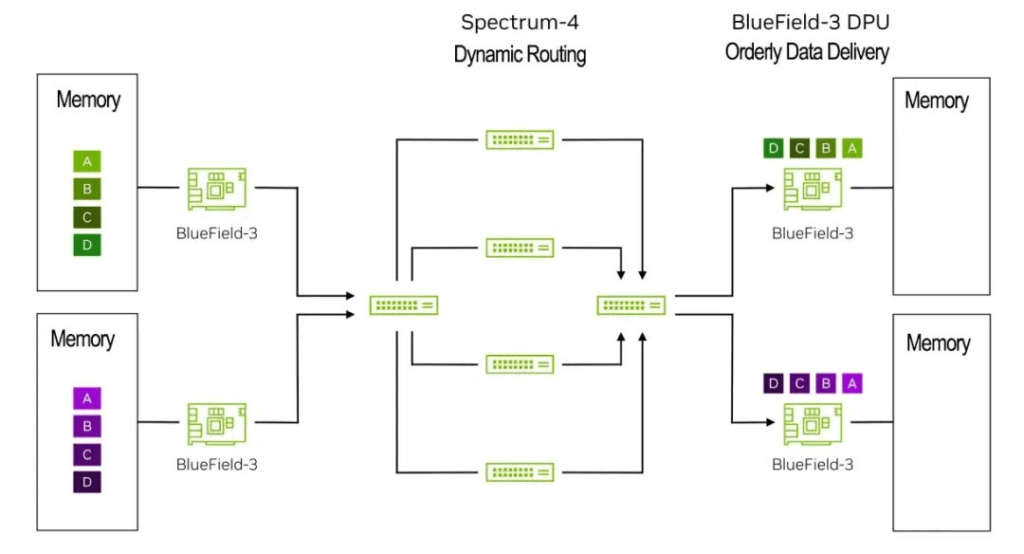

Next, let’s take a data packet-level example to show how AI flows move in the Spectrum-X network.

It shows the cooperative process between the Spectrum-4 switch and BlueField DPU at the data packet level.

Step 1: Data originates from a server or GPU memory on the left side of the graph, and reaches a server on the right side.

Step 2: BlueField-3 DPU wraps data into network packets and sends them to the first Spectrum-4 leaf switch while marking these packets so that the switch can perform RoCE dynamic routing for them.

Step 3: The left Spectrum-4 leaf switch applies RoCE dynamic routing to balance data packets from green and purple flows, and sends each flow’s packets to multiple spine switches. This increases effective bandwidth from standard Ethernet’s 60% to Spectrum-X’s 95% (1.6 times).

Step 4: These packets may arrive out of order at BlueField-3 DPU on the right side.

Step 5: Right BlueField-3 DPU uses NVIDIA direct data placement (DDP) technology to place data in the correct order into host/GPU memory.

RoCE Dynamic Routing Results

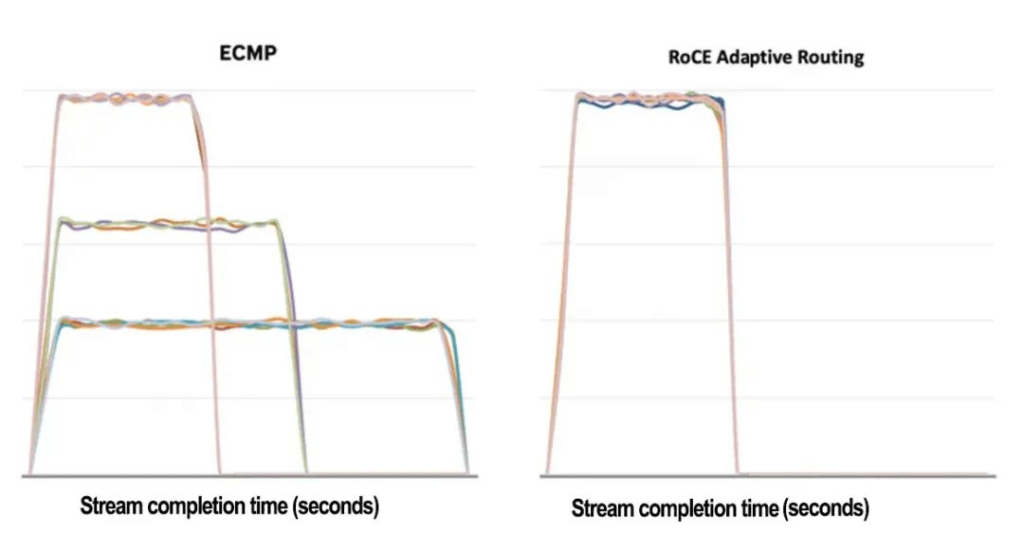

To verify the effectiveness of RoCE dynamic routing, we used an RDMA write test program to perform an initial test. In the test, we divided the host into several pairs, and each pair sent a large number of RDMA write data streams to each other for a certain time.

RoCE dynamic routing can reduce completion time.

As shown in the figure above, based on static forwarding based on hash, the uplink port suffers from conflict, resulting in increased completion time, reduced bandwidth, and decreased fairness among flows. Switching to dynamic routing solves all these problems.

In the ECMP graph, some flows show similar bandwidth and completion time, while others experience conflict, resulting in longer completion time and lower bandwidth. Specifically, in the ECMP scenario, some flows have a best completion time T of 13 seconds, while the slowest flow takes 31 seconds to complete, which is about 2.5 times longer than the ideal time T. In the RoCE dynamic routing graph, all flows finish almost at the same time and have similar peak bandwidths.

RoCE dynamic routing for AI workloads

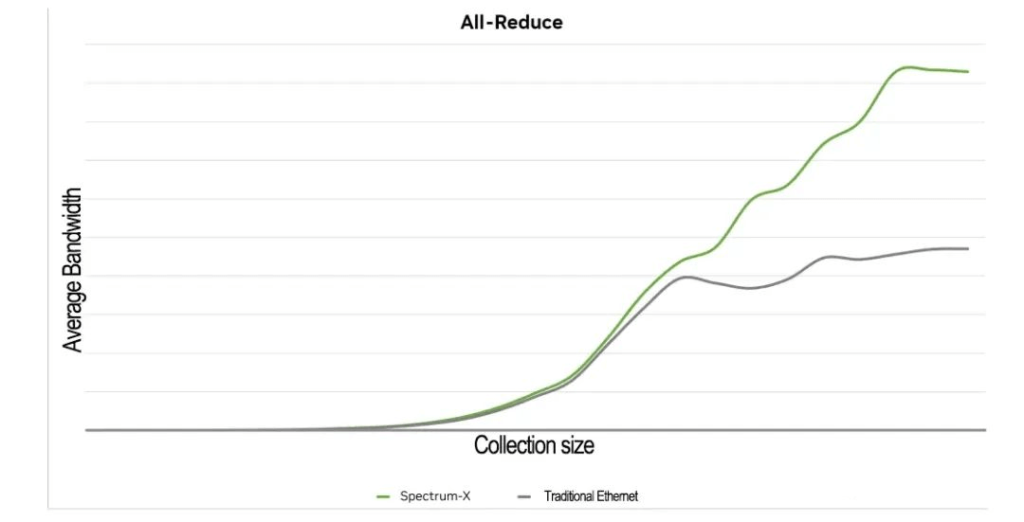

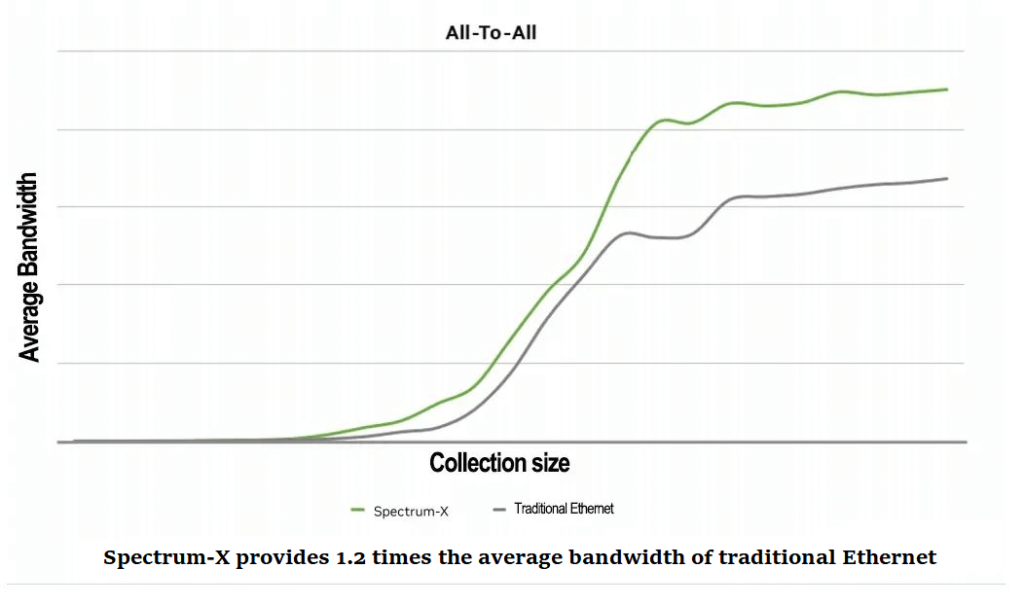

To further evaluate the performance of RoCE workloads with dynamic routing, we conducted common AI benchmarks on a test platform consisting of 32 servers on a two-layer leaf-spine network topology built by four NVIDIA Spectrum switches. These benchmarks evaluated common set operations and network traffic patterns in distributed AI training workloads such as all-to-all traffic and all-reduce set operations.

RoCE dynamic routing enhances AI all-reduce

RoCE dynamic routing enhances AI all-to-all

RoCE dynamic routing summary

In many cases, ECMP-based hash-based flow routing may cause high congestion and unstable completion time of flows, resulting in application performance degradation. Spectrum-X RoCE dynamic routing solves this problem. This technology improves actual network throughput (goodput) while minimizing the instability of the completion time of flows as much as possible, thereby improving application performance. By combining RoCE dynamic routing with NVIDIA Direct Data Placement (DDP) technology on BlueField-3 DPU, you can achieve transparent support for applications.

Using NVIDIA RoCE congestion control to achieve performance isolation

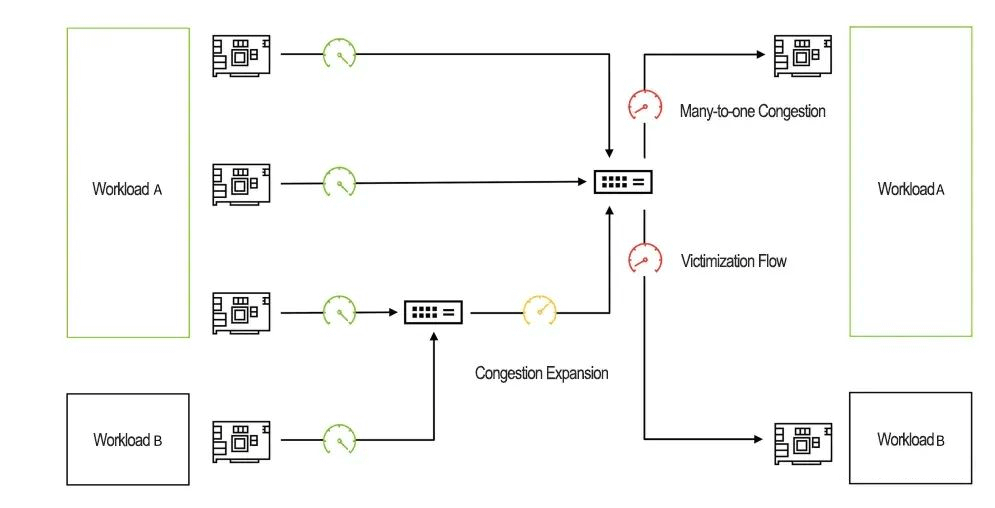

Due to network congestion, applications running in AI cloud systems may experience performance degradation and unstable running time. This congestion may be caused by the application’s network traffic or other applications’ background network traffic. The main cause of this congestion is multi-to-one congestion, which means there are multiple data senders and one data receiver.

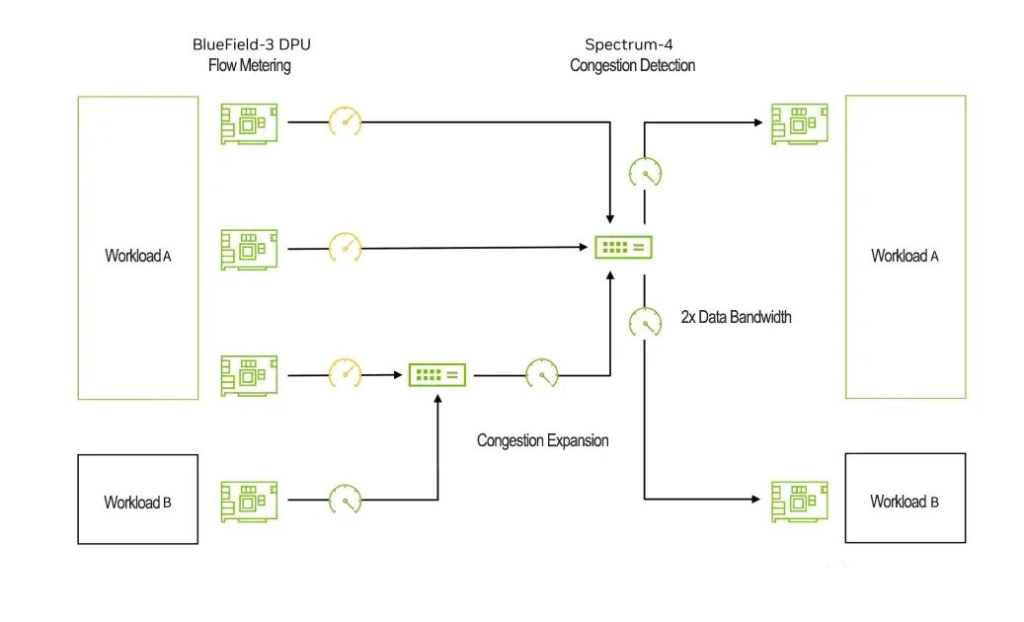

RoCE dynamic routing cannot solve this congestion problem. This problem requires measuring the network traffic of each endpoint. Spectrum-X RoCE congestion control is a point-to-point technology, where the Spectrum-4 switch provides network telemetry information to represent the real-time congestion situation in the network. This telemetry information is processed by BlueField-3 DPU, which manages and controls the data injection rate of data senders to maximize the efficiency of the shared network. If there is no congestion control, a multi-to-one scenario may cause network overload, congestion propagation, or packet loss, which severely degrade the performance of the network and application.

In the congestion control process, BlueField-3 DPU executes a congestion control algorithm, which can process tens of millions of congestion control events per second at the microsecond level and make fast and fine-grained rate decisions. Spectrum-4 switch provides accurate congestion estimation with internal telemetry for precise rate estimation and port utilization indicator to achieve fast recovery. NVIDIA’s congestion control allows telemetry data to bypass queue delay of congested flows while still providing accurate concurrent telemetry information, greatly reducing detection and response time.

The following example shows how a network experienced multi-to-one congestion, and how Spectrum-X used traffic measurement and internal telemetry for RoCE congestion control.

Network congestion leads to disturbed streams

This figure shows a flow that is affected by network congestion. Four source DPUs are sending data to two destination DPUs. Source 1, 2, and 3 send data to destination 1, using available link bandwidth for three-fifths. Source 4 sends data to destination 2 through a leaf switch shared with source 3, causing destination 2 to receive available link bandwidth for two-fifths.

If there is no congestion control, sources 1, 2, and 3 will cause a three-to-one congestion ratio because they all send data to destination 1. This congestion will cause back pressure from the leaf switch connected to source 1 and destination 1. Source 4 becomes a congested flow whose throughput at destination 2 drops to available bandwidth for thirty-three percent (expected performance for fifty percent). This adversely affects the performance of AI applications that depend on average and worst-case performance.

Spectrum-X solves congestion problems by traffic measurement and telemetry

The figure shows how Spectrum-X solved the congestion problem in Figure 14. It shows the same testing environment: four source DPUs send data to two destination DPUs. In this situation, the traffic measurement of sources 1, 2, and 3 prevents leaf switches from experiencing congestion. This eliminates the back pressure on source 4, allowing it to achieve the expected bandwidth of two-fifths. In addition, Spectrum-4 uses internal telemetry information generated by What Just Happened to reassign flow paths and queue behaviors dynamically.

RoCE Performance Isolation

AI cloud infrastructure needs to support a large number of users (tenants) and parallel applications or workloads. These users and applications compete for shared resources in the infrastructure, such as the network, which may affect their performance.

In addition, to optimize NVIDIA collective communication library (NCCL) network performance for AI applications in the cloud, all workloads running in the cloud need to be coordinated and synchronized. The traditional advantages of the cloud, such as elasticity and high availability, have a limited impact on AI applications’ performance, while performance degradation is a more important global problem.

Spectrum-X platform includes several mechanisms that can achieve performance isolation when combined. It ensures that a workload does not affect another workload’s performance. These service quality mechanisms ensure that no workload causes network congestion, which may affect other workloads’ data transmission.

By using RoCE dynamic routing, it achieved fine-grained data path balancing, avoiding data flow conflicts through the leaf switch and spine switch, which achieved performance isolation. Enabling RoCE congestion control with traffic measurement and telemetry, further enhanced performance isolation.

In addition, the Spectrum-4 switch adopts a global shared buffer design to promote performance isolation. The shared buffer provides bandwidth fairness for different-sized flows, protects workloads from being affected by noisy neighbor flows with the same destination port goal in scenarios with multiple flows targeting the same destination port, and better absorbs short-term transmissions when multiple flows are targeting different destination ports.

Related Products:

-

OSFP-400G-FR4 400G FR4 OSFP PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$900.00

OSFP-400G-FR4 400G FR4 OSFP PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$900.00

-

OSFP-400G-DR4+ 400G OSFP DR4+ 1310nm MPO-12 2km SMF Optical Transceiver Module

$850.00

OSFP-400G-DR4+ 400G OSFP DR4+ 1310nm MPO-12 2km SMF Optical Transceiver Module

$850.00

-

OSFP-400G-LR4 400G LR4 OSFP PAM4 CWDM4 LC 10km SMF Optical Transceiver Module

$1199.00

OSFP-400G-LR4 400G LR4 OSFP PAM4 CWDM4 LC 10km SMF Optical Transceiver Module

$1199.00

-

OSFP-400G-SR4-FLT 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

OSFP-400G-SR4-FLT 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

QSFP-DD-400G-LR4 400G QSFP-DD LR4 PAM4 CWDM4 10km LC SMF FEC Optical Transceiver Module

$600.00

QSFP-DD-400G-LR4 400G QSFP-DD LR4 PAM4 CWDM4 10km LC SMF FEC Optical Transceiver Module

$600.00

-

QSFP-DD-400G-SR4.2 400Gb/s QSFP-DD SR4 BiDi PAM4 850nm/910nm 100m/150m OM4/OM5 MMF MPO-12 FEC Optical Transceiver Module

$900.00

QSFP-DD-400G-SR4.2 400Gb/s QSFP-DD SR4 BiDi PAM4 850nm/910nm 100m/150m OM4/OM5 MMF MPO-12 FEC Optical Transceiver Module

$900.00

-

QSFP112-400G-DR4 400G QSFP112 DR4 PAM4 1310nm 500m MTP/MPO-12 with KP4 FEC Optical Transceiver Module

$850.00

QSFP112-400G-DR4 400G QSFP112 DR4 PAM4 1310nm 500m MTP/MPO-12 with KP4 FEC Optical Transceiver Module

$850.00

-

QSFP112-400G-FR4 400G QSFP112 FR4 PAM4 CWDM 2km Duplex LC SMF FEC Optical Transceiver Module

$750.00

QSFP112-400G-FR4 400G QSFP112 FR4 PAM4 CWDM 2km Duplex LC SMF FEC Optical Transceiver Module

$750.00

-

QSFP112-400G-SR4 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$450.00

QSFP112-400G-SR4 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$450.00

-

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

-

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

-

QSFP-DD-800G-DR8 800G-DR8 QSFP-DD PAM4 1310nm 500m DOM MTP/MPO-16 SMF Optical Transceiver Module

$1300.00

QSFP-DD-800G-DR8 800G-DR8 QSFP-DD PAM4 1310nm 500m DOM MTP/MPO-16 SMF Optical Transceiver Module

$1300.00

-

OSFP-800G-SR8 OSFP 8x100G SR8 PAM4 850nm MTP/MPO-16 100m OM4 MMF FEC Optical Transceiver Module

$650.00

OSFP-800G-SR8 OSFP 8x100G SR8 PAM4 850nm MTP/MPO-16 100m OM4 MMF FEC Optical Transceiver Module

$650.00

-

QSFP-DD-800G-DR8D QSFP-DD 8x100G DR PAM4 1310nm 500m DOM Dual MPO-12 SMF Optical Transceiver Module

$1250.00

QSFP-DD-800G-DR8D QSFP-DD 8x100G DR PAM4 1310nm 500m DOM Dual MPO-12 SMF Optical Transceiver Module

$1250.00

-

NVIDIA MCX623106AN-CDAT SmartNIC ConnectX®-6 Dx EN Network Interface Card, 100GbE Dual-Port QSFP56, PCIe4.0 x 16, Tall&Short Bracket

$1200.00

NVIDIA MCX623106AN-CDAT SmartNIC ConnectX®-6 Dx EN Network Interface Card, 100GbE Dual-Port QSFP56, PCIe4.0 x 16, Tall&Short Bracket

$1200.00

-

NVIDIA NVIDIA(Mellanox) MCX516A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Dual-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$985.00

NVIDIA NVIDIA(Mellanox) MCX516A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Dual-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$985.00