White-box switches have developed rapidly in the past three decades. The Open Networking Foundation (ONF), the Linux Foundation, the Open Compute Project (OCP), the Telecom Infra Project (Telecom Infra Project, TIP), and other open-source organizations have made significant contributions. A white-box switch is an open network device with decoupling of software and hardware. Compared with the traditional closed switch that integrates software and hardware, it has many advantages:

First of all, the white-box switch adopts an open device architecture and the idea of decoupling software and hardware. It can customize the underlying hardware and upper-layer software according to business needs. Compared with bundled purchase and monopoly use of the traditional switch software and hardware, it can significantly reduce the purchase cost of the switch. In addition, in terms of software functions, secondary development can be carried out based on open source software, reducing the development cycle and cost.

Second, white-box switches support hardware data plane programmability and software containerized deployment. They customize data plane forwarding logic through software-defined methods and make full use of modern cloud computing technology to rapidly upgrade and iterate network functions to improve network flexibility, agility, and network performance. In addition, with the help of containerized deployment, management and operation and maintenance can be simplified in a unified manner, reducing network operation and maintenance costs.

Finally, the white box of switches has been unanimously recognized by upstream and downstream switch companies such as chip manufacturers, equipment providers, cloud service providers, and telecom operators. This can link the development of white box open-source ecology and industrial ecology to form a prosperous white-box network. The network ecology can ultimately promote the continuous innovation and evolution of the network, solve current business problems, and meet future network requirements.

At present, white-box switches have formed a network ecosystem with industrialization capabilities. They have developed from commercial programmable chips to the standardization of white-box hardware devices, from the unified chip interface to the open-source switching operating systems. This article first briefly describes the development history of white-box switches, then introduces the current status of white-box switches from the perspectives of open-source ecology and industrial ecology, and finally expounds on the future development trends related to white-box switches.

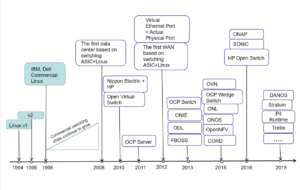

The development history of white–box switches

In 1998, IBM, Compaq, Dell, and other companies started to use commercial Linux systems one after another, and their network technology and related ecosystems began to develop rapidly.

In 2008, Linux began to combine with switching chips to provide large-capacity, high-bandwidth intra-domain data transmission services in data center scenarios.

In order to further promote the commercial development of Linux switches, Nippon Electric (NEC) and Hewlett-Packard (HP) started to study switch software technology and launched open software switches based on OVS (OpenVSwitch) in 2010. The resources and capabilities of the network have been released like never before, and network operations have begun to move toward automation and intelligence.

In 2011, based on switch software technology, OCP and other organizations began to pay attention to switch virtualization technology. They started the standardization of switch hardware white box, launched ONIE (Open Network Install Environment), FBOSS (Facebook Open Switching System), device management software, and ODL (OpenDaylight) controller standard documents, which have made major breakthroughs in the field of SDN and white box switches.

In 2015, OCP successfully launched the first white box switch, Wedge. At the same time, virtualized and white box projects such as OVN (Open Virtual Network), virtualized SDN network, ONL (Open Network Linux) operating system, ONOS (Open Network Operating System) controller, and OpenNFV and CORD in the telecom field have also emerged one after another.

Since 2016, technologies such as white-box equipment, software operating systems, and network automation have developed vigorously. Open source switch operating systems emerge one after another, such as SONiC (Software for Open Networking in the Cloud) launched by Microsoft, OpenSwitch by HP, and DANOS (Disaggregated Network Operating System) by AT&T, and Stratum launched by Google for NG-SDN (Next Generation SDN). At the same time, network management and control solutions such as ONAP, P4Runtime interface, and Trellis are also on the horizon, and the network technology related to white-box switches is unprecedentedly prosperous.

The open-source ecosystem of white-box switches mainly revolves around several open-source organizations at home and abroad:

1). the Open Computing Project, which is responsible for the formulation of hardware standards for white-box switches;

2). the Open Networking Foundation, which promotes the development and implementation of SDN-related technologies in white-box switches;

3). the telecommunications infrastructure project, which explores the use of white-box switch technology to change the traditional method of building and deploying telecom network infrastructure;

4). the Open Source Data Center Committee, works with domestic institutions to carry out open, cooperative, innovative, and win-win development around data center infrastructure.

Open Networking Foundation

The Open Computing Project (OCP) is an open hardware project launched by Facebook, Intel, Rackspace, Goldman Sachs, and Andy Bechtolsheim in 2011 to share open-source designs. It has become a fast-growing global cooperative community. OCP focuses on redesigning hardware technology to make it more efficient, flexible, and scalable to support growing computing infrastructure demands. OCP provides an architecture for individuals and organizations to share intellectual property with others and promotes the openness and popularization of services, storage and data center technologies through the combination of open-source hardware and software.

The Open Network Foundation (ONF) is an open-source organization in the network field founded by Nick McKeown and Scott Shenker in 2011, the main proponents of SDN. It aims to promote the development and implementation of SDN and is a recognized leader and standard-bearer in the SDN field. Since its inception, ONF has successfully promoted SDN to becoming a next-generation network technology generally accepted by operators, equipment vendors, and service providers.

The Telecom Infra Project (TIP) is an open organization in the telecommunications field led by Facebook in 2016. It aims to change the traditional method of building and deploying telecommunications network infrastructure through joint cooperation to develop new technologies.

The Open Data Center Committee (ODCC), under the guidance of the China Communications Standards Association, aims at openness, cooperation, innovation, and win-win. It focuses on servers, data center facilities, networks, new technologies and testing, edge computing, intelligent monitoring, management, etc.

In the white-box switch industry ecology, a complete industrial ecological chain has been formed from upstream equipment providers to downstream cloud service providers and telecom operators. Equipment suppliers mainly include Cisco and H3C, which provide opener white-box-like equipment solutions for the market. Cloud service providers mainly include Google, Microsoft, Alibaba, Tencent, etc., and they have begun to study the operating systems of white-box switches, and use them to promote new ones. Telecom operators mainly include AT&T, China Mobile, China Unicom, China Telecom, etc. They use white box switches for business transformation and network reconstruction.

In terms of the granularity controlled by devices, the development of white-box network devices has gone through two stages so far. In the first phase, network equipment and its software are centrally controlled by the network owner. The functions or protocols of the network device can be modified and configured remotely. At this stage, the network equipment/software/interface is relatively closed, together with poor protocol interoperability, solidified forwarding logic, long development time of new protocols/functions, and high research and development costs, which cannot meet the needs of flexible and diverse new network functions.

Therefore, network equipment has gradually developed into the second stage of open equipment architecture and controllable data packet forwarding. The originally fixed pipeline has been transformed into a flexible and programmable PISA (Protocol-Independent Switch Architecture). Thanks to the rise of open-source network software such as OVS, SONiC, FBOSS, FRR (FRRouting), and ONOS, opaque and closed networks have become transparent and open.

-300x194.png)

PISA(Protocol-Independent Switch Architecture)

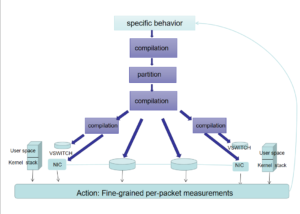

The scale of the network continues to expand, the types of services continue to increase, and the difficulty of network management and control continues to increase. Considering this, management of network equipment needs to abandon the method of management and maintenance by special personnel, and build an end-to-end white-box open system including 5G to achieve end-to-end, top-down, fully software-defined programmability. It is necessary to adopt an open network architecture with advanced separation of software and hardware, flexible programmability, and on-demand change. It should also strive to meet the differentiated and customized network requirements of different industries, and accelerate the deep integration of the network and the real economy.

For the network management plane, the network administrator only needs to describe the management behavior at the top to build a closed-loop of intelligent network management. The network will automatically partition, compile and run according to the behavior. Network resources (including cloud, ISP, and 5G network) are regarded as programmable carriers. Daily verification and real-time inspection are performed through software automation.

A closed-loop of intelligent network management

In order to realize the above functions, it is necessary to master the following three key technologies:

(1) High controllable maintenance: research on high-performance BFD (Bidirectional Forwarding Detection) to realize millisecond-level status detection of network resources;

(2) High-precision network perception: Based on INT (In-band Network Telemetry) and Telemetry, etc. Carry out high-precision network measurement research, realize in-band network telemetry, and verify whether each data packet or all states are “correct”;

(3) Efficient network scheduling: SR routing mechanism suitable for large-scale networks to achieve efficient scheduling and control of traffic bandwidth and paths.

White box switches involve multiple levels of cooperation, including not only hardware selection and adaptation, but also a number of new network technologies. In order to sort out the architecture and technologies involved in white-box switches and better promote technical research and ecological construction in this field, this chapter will introduce key technical points of white box switch design from the four aspects: software and hardware decoupling technology, programmable network technology, hardware acceleration technology, and white-box security technology.

1. Software and hardware decoupling technology

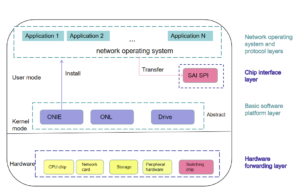

AT&T breaks down the white box switch ecosystem into four layers:

- Hardware 1 Layer: The commercial chip layer is responsible for the underlying switching and forwarding. At present, there is no hard standard for this layer.

- Software 1 Layer: The chip interface layer, which extracts the functions of the chip and provides services upward. This layer requires standardization in principle, but it takes time.

- Hardware 2 Layer: The network function reference design layer, which provides the network function design reference for the hardware devices. This layer mainly includes the reference design of the hardware device network function formulated by the OCP project.

- Software 2 Layer: The network operating system and protocol layer, is responsible for implementing the functions of the plane control and management. This layer mainly includes the network operating system and the upper-layer network protocol application, which is the most important layer.

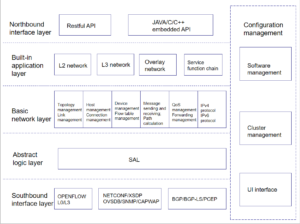

2. Network programmable technology

The control plane mainly performs centralized management on the underlying network switching equipment, including status monitoring, forwarding decision-making, processing and scheduling of data plane traffic, and realizes functions such as link discovery, topology management, policy formulation, and table entry delivery. Upward operations are through northbound interfaces to provide flexible network resource abstraction for upper-layer business applications and resource management systems and open multiple levels of programmable capabilities.

multiple levels of programmable capabilities

The development of control plane programmable technology will bring the following advantages:

1) White box switches use a network operating system similar to that of servers, which can use existing server management tools to achieve network automation, and support easy access to open-source server software packages. It can also use the exact same configuration management interface on the switch as on the server, to increase the speed of innovation;

2) Turn the special network environment of traditional switches into a more general environment, so as to efficiently expand and manage network services, and improve the programmability and network visibility of white-box switches;

3) It can realize dynamic programmability in the network operating system of the switch through APIs and controllers. It also writes the required network functions (such as network splitters), thereby reducing hardware deployment on each switch and centralizing the network management and monitoring.

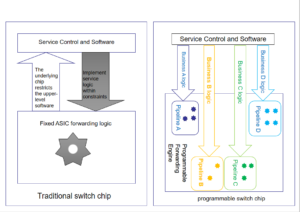

The traditional data plane solidifies all the message processing and forwarding logic of the network in the hardware chip, which is completed by the full wire-speed chip logic, thus greatly improving the network performance. However, it cannot meet the growing requirements of today’s upper-layer business and control software for the underlying network. The forwarding plane is largely constrained by fixed-function ASIC chips.

Traditional switch chip vs. Programmable switch chip

The core of programmable network technology is a switch chip with programmable characteristics, that is, the message processing and forwarding logic of the chip can be adjusted as needed through software. At present, the hardware carrier of a programmable switching chip is a combination of ASIC and FPGA (Field Programmable Gate Array).

3. Hardware acceleration technology

In most scenarios, the switch is responsible for the transmission of network data packets, and the processing and calculation are performed after the data packets finally arrive at the destination server. However, with the rapid growth of network traffic, limited by the performance bottleneck of CPU and switching chips, the existing data plane architecture can no longer meet users’ requirements for low latency and high transmission.

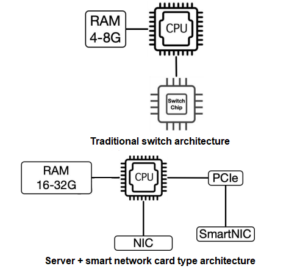

In order to solve the above problems, hardware acceleration cards such as smart network cards and FPGAs can be integrated into the data plane, and hardware acceleration technology can be used to unload network traffic. Reducing overall network latency and resource consumption of CPU/switch chips can significantly improve the overall performance and service quality of the network.

Traditional switch architecture vs. CPU+SmartNIC architecture

The data plane can use a heterogeneous combination of CPU+SmartNIC. The CPU is connected to the SmartNIC through a high-speed PCIe interface. During the forwarding process, the part that needs special processing for the data packet (the network function that consumes huge CPU resources or has a large hardware processing gain) can be directly offloaded to the smart network card. This combination method can not only realize normal network packet forwarding but also strengthen the processing capability of the device, which can effectively improve the performance of the white-box switch and reduce network delay.

4. White box security technology

The open architecture of white-box switches has security issues that cannot be ignored. For example, ONIE allows users to deploy or replace network operating systems (including booting and restoring network operating systems from vendors such as Big Switch Networks, Cumulus Networks, etc.) without replacing hardware.

Taking advantage of ONIE’s vulnerabilities and flaws, including a lack of authentication and encryption, a hacker could insert malicious code during the switch’s boot phase (i.e. before the operating system is fully loaded). The loaded malicious code is considered a known/good component because the security software of the operating system cannot run during the boot phase. Even if an attack is detected, it can be costly for users to remove malicious code by replacing the firmware.

5. Device Architecture

The white box switch is divided into two parts: hardware and software. The hardware generally includes switching chips, CPU chips, network cards, storage, and peripheral hardware devices, etc. Its interfaces and structures need to comply with the OCP standardization specifications. Software refers to Network Operating System (NOS) and its web applications. In a white-box switch, the NOS is generally installed through the guidance of the basic software platform (such as ONIE). The chip interface layer (such as SAI) encapsulates the hardware functions of the switching chip into a unified interface, decoupling the upper-layer application and the underlying hardware. Specifically, the upper-layer application customizes the underlying forwarding logic by calling the chip interface to provide the programmable function of the network.

Hardware and software levels

The hardware forwarding layer usually includes the following types of devices: 1) Switch chips: used to forward data; 2) CPU chips: mainly control the operation of the system; 3) Network cards: provide CPU-side management functions; 4) Storage devices: including memory, hard disks, etc.; 5) Peripheral hardware: including fans, power supplies, etc. Among them, the switching chip is responsible for the switching and forwarding of the underlying data packets of the switch and is the core component of the switch.

According to CrehanResearch, the purchases of white-box switches by Amazon, Google, and Facebook in 2018 have exceeded two-thirds of its total market size, although the overall market adoption of white-box switches in data center switching is within the range of 20%.

But with Amazon, Google, and Facebook tending to adopt these devices earlier to meet the quest for newer and faster network speeds, white-box switches will continue to grow. Almost all of Google’s 400GbE data centers today are powered by white-box switches.

400G optical module packaging and electrical standards have been released, and 400G optical modules can be adapted to a variety of application scenarios. There are two main organizations for the standard formulation of optical modules, IEEE and MSA.

MSA (Multi-Source Agreement) is an industry-standard formulated by representative manufacturers in the industry for a specific field. For example, in the field of optical modules, there are packaging standards SFF, MSA, and the implementation standards of 100G optical modules: 100G QSFP28 PSM4 MSA and 100G QSFP28 CWDM4 MSA, etc.

As for 400G optical modules, the relevant MSAs mainly include 400G QSFP-DD, 400G OSFP, and 400G CFP8 related to packaging, and 400G QSFP-DD CWDM8 related to transmission mode. The relevant standards have been formulated and released.

FiberMall’s QSFP-DD-400G-LR4

In addition, the IEEE 802.3 series of standards specifically define the medium access control of the physical layer and data link layer of the wired network. Among them, 400G optical modules are related to the definition of various types of physical medium-dependent (PMD) interfaces.

The release of relevant standards has laid the foundation for the industry to promote the commercial use of 400G optical modules. At the same time, the abundant standards also help 400G optical modules to adapt to a variety of application scenarios with different requirements for distance, number of optical fibers, single-wave rate, etc.

Table of Contents

ToggleRelated Products:

-

SFP28-25G-SR 25G SFP28 SR 850nm 100m LC MMF DDM Transceiver Module

$25.00

SFP28-25G-SR 25G SFP28 SR 850nm 100m LC MMF DDM Transceiver Module

$25.00

-

SFP28-25G-LR 25G SFP28 LR 1310nm 10km LC SMF DDM Transceiver Module

$45.00

SFP28-25G-LR 25G SFP28 LR 1310nm 10km LC SMF DDM Transceiver Module

$45.00

-

QSFP28-100G-SR4 100G QSFP28 SR4 850nm 100m MTP/MPO MMF DDM Transceiver Module

$40.00

QSFP28-100G-SR4 100G QSFP28 SR4 850nm 100m MTP/MPO MMF DDM Transceiver Module

$40.00

-

QSFP28-100G-IR4 100G QSFP28 IR4 1310nm (CWDM4) 2km LC SMF DDM Transceiver Module

$110.00

QSFP28-100G-IR4 100G QSFP28 IR4 1310nm (CWDM4) 2km LC SMF DDM Transceiver Module

$110.00

-

QSFP28-100G-LR4 100G QSFP28 LR4 1310nm (LAN WDM) 10km LC SMF DDM Transceiver Module

$285.00

QSFP28-100G-LR4 100G QSFP28 LR4 1310nm (LAN WDM) 10km LC SMF DDM Transceiver Module

$285.00

-

QSFP56-200G-SR4M 200G QSFP56 SR4 PAM4 850nm 100m MTP/MPO APC OM3 FEC Optical Transceiver Module

$139.00

QSFP56-200G-SR4M 200G QSFP56 SR4 PAM4 850nm 100m MTP/MPO APC OM3 FEC Optical Transceiver Module

$139.00

-

QSFP56-200G-FR4S 200G QSFP56 FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$650.00

QSFP56-200G-FR4S 200G QSFP56 FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$650.00

-

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

-

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

-

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00