FiberMall will analyze the necessity of introducing liquid cooling technology into data center switches from the perspective of policy and chip and discuss the differentiation of different solutions of liquid cooling technology, as well as the research and development experience and achievements of Ruijie in cold plate liquid cooling switch and immersion liquid cooling switch.

With the increase of the Internet, cloud computing, and big data services, the total energy consumption of data centers is increasing, and its energy efficiency is getting more and more attention. According to statistics, the average Power Usage Effectiveness (PUE) of a data center is 1.49, far higher than the requirement that the PUE be less than 1.25 for a new large data center.

PUE reduction is imminent. How can network equipment manufacturers significantly reduce power consumption while ensuring high performance? As a key factor affecting both performance and power consumption, the cooling system has become the focus of data center reform, and liquid cooling technology is gradually replacing traditional air cooling as the mainstream cooling solution due to its unique advantages.

Table of Contents

ToggleUnderstanding Liquid Cooled Switches: A Core Definition

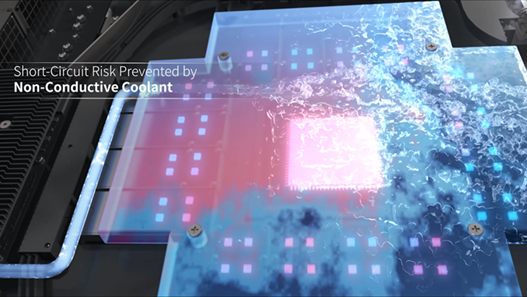

A liquid cooled switch is an advanced networking device designed for data centers, utilizing liquid-based cooling systems to dissipate heat more efficiently than traditional air-cooled alternatives. In essence, liquid cooled technology circulates coolants—such as water or specialized fluids—directly or indirectly over high-heat components like chips and processors, ensuring optimal performance under heavy loads.

This innovation addresses the growing demands of modern data centers, where escalating power consumption from AI, cloud computing, and big data requires superior thermal management. By integrating liquid cooled mechanisms, switches can handle higher bandwidths, like 51.2Tbps, without compromising reliability. FiberMall’s expertise in optical-communication solutions makes us a go-to source for implementing liquid cooled switches that align with energy-efficient policies and cutting-edge chip designs.

Energy Consumption and Policy Perspective

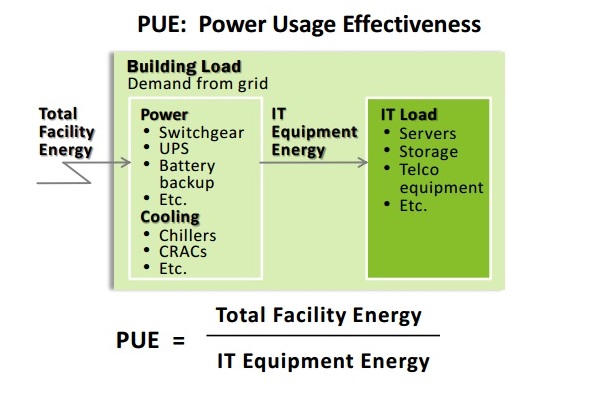

The PUE value is the ratio of the total energy consumption of a data center to the energy consumption of IT equipment. The closer the PUE value is to 1, the less energy consumption of non-IT equipment is, the better the energy efficiency level and the greener the data center is.

Figure 1. The PUE Metric

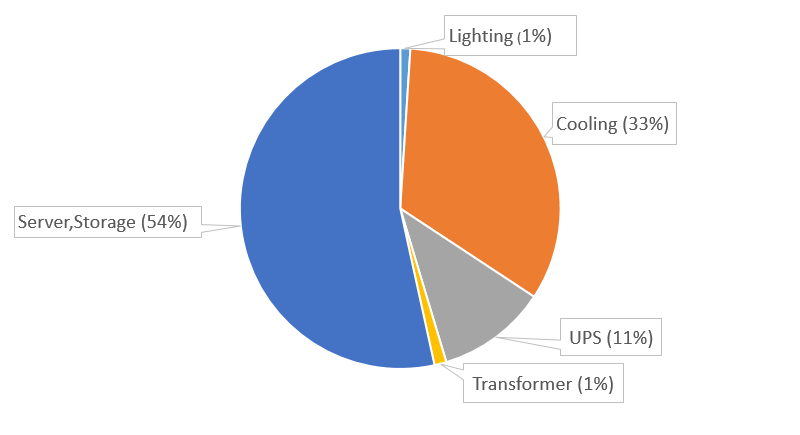

FiberMall found that the average energy consumption of the cooling system is as high as 33% of the data center’s energy consumption, which is close to one-third of the total consumption. This is because the air cooling system used in the traditional data center uses air with deficient specific heat capacity as the cooling medium. Driven by the fan in the equipment, the heat transferred by the CPU and other heat sources to the radiator is taken away from the IT equipment, and the air is cooled by circulating the fan coil heat exchanger or air conditioning refrigeration, which is also the limitation of air cooling. Therefore, solving the energy use efficiency of the cooling system has become a technology iteration challenge faced by equipment manufacturers under the new policy environment.

Figure 2. Data Center Energy Consumption Composition

Chip Cooling Demand Perspective

With the development of switch chips, high-performance chip processes (such as 5nm) can effectively reduce the power consumption per unit of computing power. However, as the bandwidth of the switching chip increases to 51.2Tbps, the total power consumption of a single chip has risen to about 900W. Therefore, how to solve the heat dissipation problem of the device chip has become a difficulty in the hardware design of the entire device.

Although the current air-cooled cooling system can still support the operation, when the chip heat flux (a flow of energy per unit area per unit time) is greater than 100W/cm², the problem will come one after another:

First, the further reduction of the thermal resistance of the heat sink encounters a bottleneck. In order to be able to resolve the small chip nearly kilowatts of heat power, the heat sink needs to use a lower total thermal resistance of the architectural approach. This also means that better thermal conductivity and heat sink design is needed if the increased capacity of the heat sink is to balance the increased power consumption of the chip. But at present, the design and processing of high-performance air-cooled heat sinks have mostly obtained heat pipe, vapor chamber and 3D VC support, which are already close to the limit of performance optimization.

Second, limited by the height requirements of the switch products, it is difficult to solve the heat dissipation problem by expanding the volume of the heat sink. Because the heat starting from the chip breaks through the chip shell, thermal interface materials, vapor chamber, solder, heat pipe, etc., but is finally stuck in the interface fins of solid air. And because of the low convective heat transfer coefficient between the fins and the air, in order to come up with the required heat dissipation area for high-power chip cooling, thermal design engineers have to expand the size of the heat sink over and over, almost filling up the available space inside the servers and switches. It can be said that the final bottleneck of air cooling heat dissipation is its finned structure to the inelastic demand for space. In addition, in order to increase the air volume, the fan speed has reached 30,000 RPM, and the aircraft takeoff-like noise is a deeply troubling development and operations staff.

Finally, with the chip power consumption still rising, the cooling capacity of the air cooling system is about to reach its limit. Even if air-cooled heat sinks can solve the current switch cooling problem, in the future, when 102.4/204.8Tbps becomes mainstream and chip power consumption is greater, air-cooled heat sinks will eventually be unable to cope. Therefore, higher-performance liquid cooling technology emerges for the next generation of IT equipment. In the next 5-10 years, it has become a consensus in the industry that air cooling will gradually be replaced by liquid cooling in data centers.

Key Benefits of Liquid Cooled Switches

Adopting liquid cooled switches offers transformative advantages for data center operators aiming to reduce PUE and enhance sustainability. Here are the primary benefits:

- Superior Heat Dissipation: Liquid cooled systems can manage heat fluxes exceeding 100W/cm², far outperforming air cooling by using fluids with higher specific heat capacity, leading to cooler operation and extended hardware lifespan.

- Energy Efficiency Gains: By lowering overall power usage—potentially reducing cooling energy consumption by up to 40%—liquid cooled switches help achieve PUE values closer to 1, aligning with global green data center mandates.

- Noise Reduction and Space Savings: Unlike noisy fans in air-cooled setups, liquid cooled designs operate quietly and require less physical space, ideal for dense enterprise networks and cloud environments.

- Scalability for AI and High-Performance Computing: In AI-enabled networks, liquid cooled switches support intensive workloads without thermal throttling, ensuring seamless data flow in wireless systems and access networks.

- Cost-Effectiveness Over Time: Initial investments in liquid cooled technology yield long-term savings through reduced maintenance and energy bills, making it a value-driven choice.

Classification, advantages, and disadvantages of liquid cooling technology

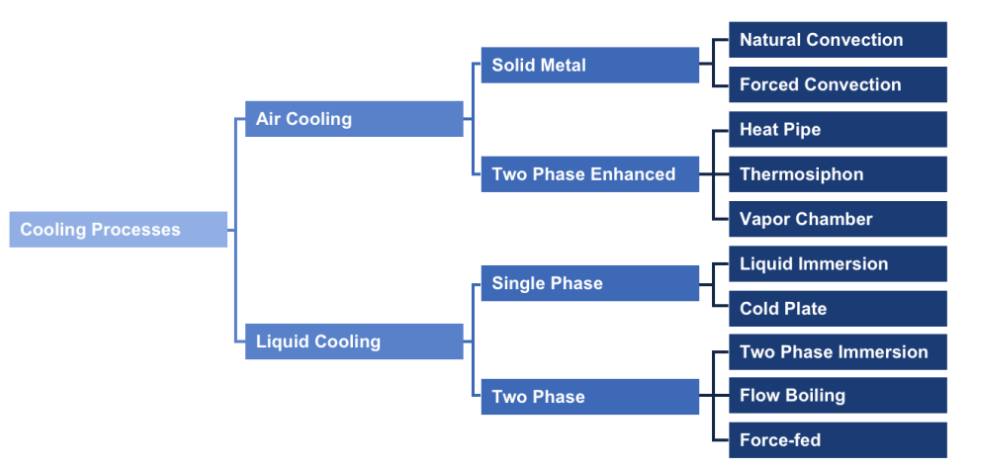

The current liquid cooling technology is mainly divided into single-phase liquid cooling and two-phase liquid cooling. In the COBO white paper “Design Considerations of Optical Connectivity in a Co-Packaged or On-Board Optics Switch”, Ruijie has comprehensively sorted out and classified the forms of cooling systems for IT equipment in data centers (Figure 3).

Single-phase liquid cooling means that the coolant always maintains liquid in the process of heat dissipation cycle and easily takes away heat through high specific heat capacity.

Two-phase liquid cooling means that the coolant undergoes a phase change during the heat dissipation process, and the coolant removes heat from the equipment through the very high latent heat of vaporization.

In contrast, single-phase liquid cooling has lower complexity and is easier to achieve, and its heat dissipation capacity is sufficient to support IT devices in the data center. Therefore, it is the best choice in the current phase.

Figure 3. Main heat dissipation modes of IT devices in the data center

Single-phase liquid cooling is divided into cold plate liquid cooling and immersion liquid cooling. Cold plate liquid cooling fixes the liquid cold plate on the main heating device of the equipment and relies on the liquid flowing through the cold plate to take away the heat to achieve the purpose of heat dissipation. There are already several applications for the supercomputer data center, and the OCP Committee has promoted the deployment of the Manifold architecture standard through Open Rack V3.0.

Immersion liquid cooling is to immerse the whole machine directly in the coolant, relying on the natural or forced circulation flow of liquid to take away the heat generated by the operation of the server and other equipment. It has been widely used in digital currency mining and supercomputing and has also been a hot topic discussed by OCP, ODCC, and other organizations in recent years. The data center of a large cloud computing company has carried out large-scale deployment.

The advantages of immersion liquid cooling include:

- because the coolant directly contacts the equipment, the heat dissipation capacity is stronger, and the overtemperature risk of the device is lower;

- Immersion liquid cooling equipment does not require fans, resulting in less equipment vibration and longer life of hardware devices.

- Immersion liquid cooling room side chilled water supply temperature is high, the outdoor side is easier to heat. Hence, the site selection of the room is no longer as air cooling era, so restricted by the region and temperature.

Of course, Immersion liquid cooling also has disadvantages, including high cost, high safety requirements, and high load-bearing requirements.

The advantages of cold plate liquid cooling are as follows:

There are few changes to the equipment room. Only the racks, Coolant Distribution Units (CDU), and water supply systems need to be changed. Moreover, cold plate liquid cooling can use more types of coolant, and the amount is much less than the immersion type, so the initial investment cost is lower. In addition, the cold plate liquid cooling industry chain is more mature, the market is more acceptable. However, the cold plate also has some limitations. First, liquid lines and connectors may leak, causing equipment damage and service interruption.

Liquid Cooled vs. Air Cooled Switches: A Detailed Comparison

When evaluating cooling options for data center switches, understanding the differences between liquid cooled and air-cooled systems is crucial. Here’s a side-by-side comparison:

| Aspect | Liquid Cooled Switches | Air Cooled Switches |

|---|---|---|

| Heat Management | Excellent; handles high heat flux efficiently with coolants. | Limited; struggles beyond 100W/cm², reliant on fans. |

| Energy Efficiency | High; reduces PUE by minimizing non-IT energy use. | Moderate; cooling accounts for ~33% of total consumption. |

| Noise Levels | Low; minimal fan usage. | High; constant fan operation. |

| Scalability | Ideal for AI, cloud, and big data applications. | Suitable for lower-bandwidth setups but limits growth. |

| Initial Cost | Higher due to advanced components. | Lower, but higher long-term operational costs. |

| Maintenance | Requires fluid monitoring but less frequent. | Fan replacements common; dust buildup issues. |

For organizations transitioning to liquid cooled switches, FiberMall offers expert guidance and products that bridge the gap between traditional air cooling and innovative liquid cooled solutions, ensuring a smooth upgrade.

Research and development experience of immersion liquid cooled switch

In recent years, major companies have explored the solution of immersion liquid-cooled data centers, and Ruijie Network has accumulated more experience in the research and development of immersion liquid cooled switch, which is mainly reflected in the structural appearance, fan cutout, material compatibility, SI characteristics (signal integrity) four aspects:

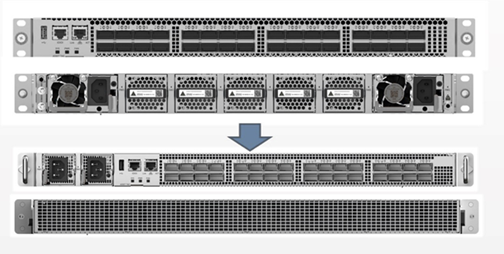

- Structural appearance

First, the biggest change is that the power supply has been moved from the rear panel of the switch to the front panel. The panel interface also increases the switch width from 19 inches to 21-23 inches in order to fit two power supplies. The overall printed circuit board (PCB) power supply alignment design will also change.

Figure 4. Changes in the appearance of the switch

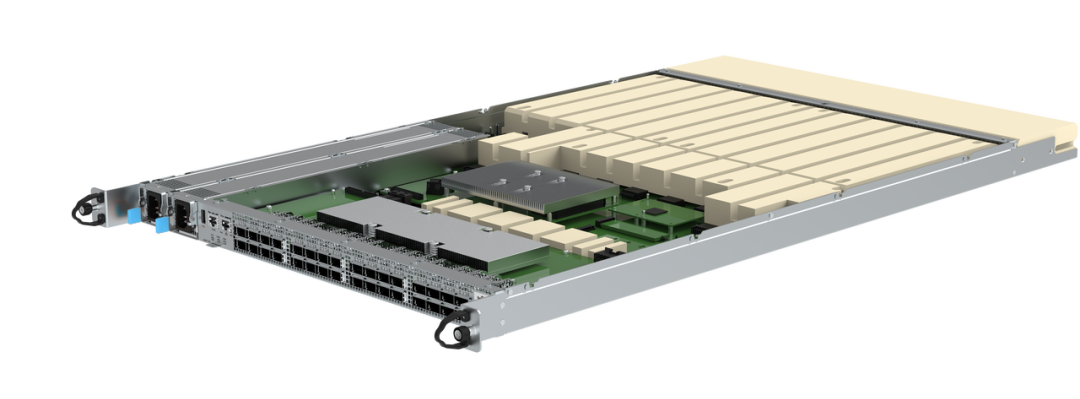

Because of the high cost of coolant, in order to save the total use of coolant as much as possible, the extra space is filled with filler to achieve the purpose of occupying more coolant space in the server-based customized immersion tank. As shown in Figure 5, the yellow block is the filler, which is used to occupy the liquid.

Figure 5. Switch structure evolution

- Fan cutout

Structural changes also lead to overall fan clipping. Not only do designers no longer need to design device fans for the switch, they can also simply choose a fanless design for the power supply. Such a change not only reduces the PUE value but also significantly reduces the noise in the server room.

- Material compatibility

Since the coolant of immersion liquid cooling is mainly divided into fluorocarbons and a variety of oils, the switch should pay attention to the following two points:

- Whether the materials of the optical devices used are sealed. If they are not sealed and leakage occurs, optical path pollution may lead to signal attenuation and switch failure;

- Whether all devices will react physically or chemically with the coolant. If a reaction occurs, the material proportion of some components of the original switch will change, which will bring risks such as the change of insulation. Therefore, non-metallic structural parts, various electrical parts, TIM materials, filler blocks, plastic handles, hanging lug assemblies, labels, glues, connectors, cables, and printed circuit boards (PCB) should be compatible with coolant.

- SI characteristics (Signal Integrity)

As the immersion liquid-cooled switch will be in direct contact with the liquid, SI (Signal Integrity) will be affected by the liquid. So there are special requirements for PCB boards as follows.

(1) Try to avoid the surface-mount of key models.

(2) The inner signal is not affected, and the low-speed signal surface-mount without much attention.

(3) High-speed signal must be surface-mounted, to improve the impedance design;

(4) Fan out of BGA and connectors to minimize the surface line length;

(5) The loss design and impedance design of 25G and 50G SerDes are different from the traditional ones.

Research and development experience of cold-plate liquid cooled switch

Based on the special characteristics of silicon photonics technology, Ruijie Network has developed cold-plate liquid-cooled switches. Among them, OBO technology and NPO technology are to package the optical module on the motherboard, as close as possible to the MAC chip. However, this will make the heat source too concentrated, and the equipment height is limited by the design requirements of the expected 1RU high-density form, so it is difficult to solve the problem with the traditional air-cooled heat sink. If immersion liquid cooling is used, the sealing of the optical link is severely challenged.

Figure 6. Switch structure evolution

In this regard, Ruijie adopts a cold plate liquid cooling heat sink to cover the MAC chip and the surrounding optical module in an integrated way, and carries away the heat through the flow of the cooling liquid in the flow channel in the plate. In addition, to minimize the complexity and leakage risk of liquid pipelines, other heating components of the device are cooled by fans. The cold plate cooling solution can kill two birds with one stone. It can not only meet the heat dissipation requirements of NPO/CPO high power and high density heat source, but also reduce the height of the device to an extremely thin 1RU.

Ruijie’s research and development achievements in liquid cooled switch

In 2019, Ruijie Network cooperated with a domestic Internet customer to deliver the immersed liquid cooled 32*100Gbps data center switch and the corresponding gigabit network management switch. In 2022, Ruijie Network began to distribute 100/200/400G immersion liquid-cooled switches and cold plate liquid cooled switch.

Ruijie Network has launched two commercial immersion liquid cooled switches, namely 32-port 100G data center access switch and 48-port 1G management network switch. Both switches are 21 “wide and compatible with 3M FC-40 coolant. Power supply supports 1+1 redundancy. The pluggable ABS+PC module greatly saves the cost of coolant. The grooves on the module facilitate the flow of liquid for heat dissipation, and cleverly balances buoyancy and gravity.

At the Global OCP Summit in November 2021, Ruijie Network officially released 64*400G cold plate liquid-cooled NPO switch to meet the high reliability requirements of data centers and carrier networks.

Under the leadership of OIF, Ruijie Network cooperated with many manufacturers in the industry to release 64*800G cold plate liquid-cooled NPO switch structural prototype in 2022 OFC Summit. The front panel supports 64 800G fiber connectors, each of which can also be divided into two 400G ports for forward compatibility. The number of external laser source modules has increased to 16. Due to the Blind-mate design, the damage of high power laser to human eyes is avoided and the safety of operation and maintenance personnel is guaranteed to a greater extent. Switch chips and NPO modules support cold plate cooling for efficient heat dissipation, which solves the problem of highly concentrated heat flux. Compared with the performance of the switch with the traditional pluggable optical module and air-cooled solution, the power consumption is greatly reduced.

Frequently Asked Questions About Liquid Cooled Switches

Q: What makes a liquid cooled switch different from traditional switches?

A liquid cooled switch uses fluid circulation for cooling, offering better efficiency than air-based systems, especially in high-power environments like data centers.

Q: Are liquid cooled switches suitable for small enterprise networks?

Yes, liquid cooled technology scales well, providing energy savings and reliability even in smaller setups, though it’s most beneficial in high-density applications.

Q: How does liquid cooled technology impact data center PUE?

Liquid cooled switches can significantly lower PUE by reducing cooling energy needs, helping meet policies requiring values under 1.25.

Q: What are the main types of liquid cooled switches?

Common types include immersion and cold-plate liquid cooled designs, each with unique advantages in heat transfer and compatibility.

Q: Where can I find reliable liquid cooled switch solutions?

FiberMall provides top-tier liquid cooled switch options as part of our optical-communication portfolio, designed for global data centers and AI networks.

Related Products:

-

OSFP-800G85F-MPO60M 800G OSFP SR8 MPO-12 Female Plug Pigtail 60m Immersion Liquid Cooling Optical Transceivers

$2400.00

OSFP-800G85F-MPO60M 800G OSFP SR8 MPO-12 Female Plug Pigtail 60m Immersion Liquid Cooling Optical Transceivers

$2400.00

-

OSFP-800G85M-MPO60M 800G OSFP SR8 MPO-12 Male Plug Pigtail 60m Immersion Liquid Cooling Optical Transceivers

$2400.00

OSFP-800G85M-MPO60M 800G OSFP SR8 MPO-12 Male Plug Pigtail 60m Immersion Liquid Cooling Optical Transceivers

$2400.00

-

OSFP-800G85F-MPO5M 800G OSFP SR8 MPO-12 Female Plug Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$2330.00

OSFP-800G85F-MPO5M 800G OSFP SR8 MPO-12 Female Plug Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$2330.00

-

OSFP-800G85M-MPO5M 800G OSFP SR8 MPO-12 Male Plug Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$2330.00

OSFP-800G85M-MPO5M 800G OSFP SR8 MPO-12 Male Plug Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$2330.00

-

OSFP-400GF-MPO1M 400G OSFP SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$1950.00

OSFP-400GF-MPO1M 400G OSFP SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$1950.00

-

OSFP-400GM-MPO1M 400G OSFP SR4 MPO-12 Male Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$1950.00

OSFP-400GM-MPO1M 400G OSFP SR4 MPO-12 Male Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$1950.00

-

OSFP-400GF-MPO3M 400G OSFP SR4 MPO-12 Female Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$1970.00

OSFP-400GF-MPO3M 400G OSFP SR4 MPO-12 Female Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$1970.00

-

OSFP-400GM-MPO3M 400G OSFP SR4 MPO-12 Male Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$1970.00

OSFP-400GM-MPO3M 400G OSFP SR4 MPO-12 Male Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$1970.00

-

200G QSFP56 SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$600.00

200G QSFP56 SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$600.00

-

200G QSFP56 SR4 MPO-12 Male Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$600.00

200G QSFP56 SR4 MPO-12 Male Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$600.00

-

200G QSFP56 SR4 MPO-12 Female Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$620.00

200G QSFP56 SR4 MPO-12 Female Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$620.00

-

200G QSFP56 SR4 MPO-12 Male Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$620.00

200G QSFP56 SR4 MPO-12 Male Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$620.00

-

100G QSFP28 SR 850nm MPO Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$86.00

100G QSFP28 SR 850nm MPO Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$86.00

-

100G QSFP28 SR 850nm MPO Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$91.00

100G QSFP28 SR 850nm MPO Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$91.00

-

100G QSFP28 SR 850nm MPO Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$95.00

100G QSFP28 SR 850nm MPO Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$95.00

-

100G QSFP28 SR 850nm MPO Pigtail 7m Immersion Liquid Cooling Optical Transceivers

$100.00

100G QSFP28 SR 850nm MPO Pigtail 7m Immersion Liquid Cooling Optical Transceivers

$100.00