As we all know, the explosive growth of Internet data has brought great challenges to the processing capacity of data centers.

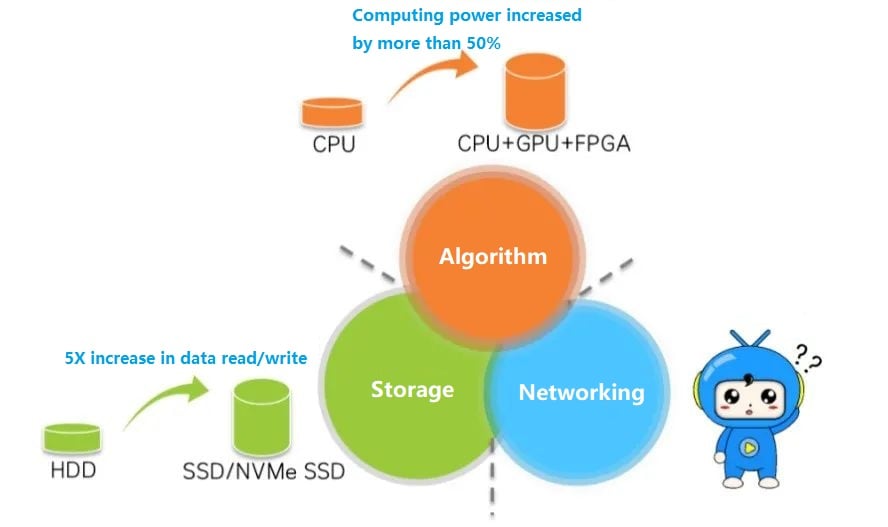

Computing, storage, and network are the three driving forces driving the development of data centers.

With the development of CPU, GPU, and FPGA, computing power has been greatly improved. Storage With the introduction of solid state drive (SSD), data access latency has been greatly reduced.

However, the development of the network is obviously lagging behind, the transmission delay is high, gradually becoming the bottleneck of data center performance.

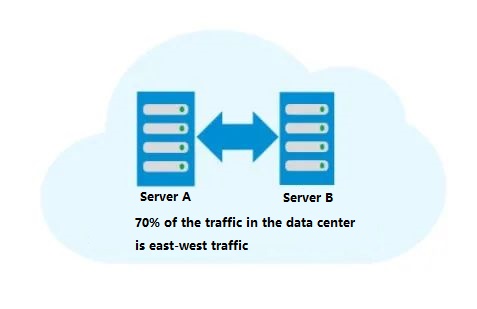

In a data center, 70% of the traffic is east-west traffic (traffic between servers). This traffic generally processes data flow during high-performance distributed parallel computing in data centers and is transmitted over TCP/IP networks.

If the TCP/IP transmission rate between servers increases, the performance of the data center will also increase.

TCP/IP transfer between servers

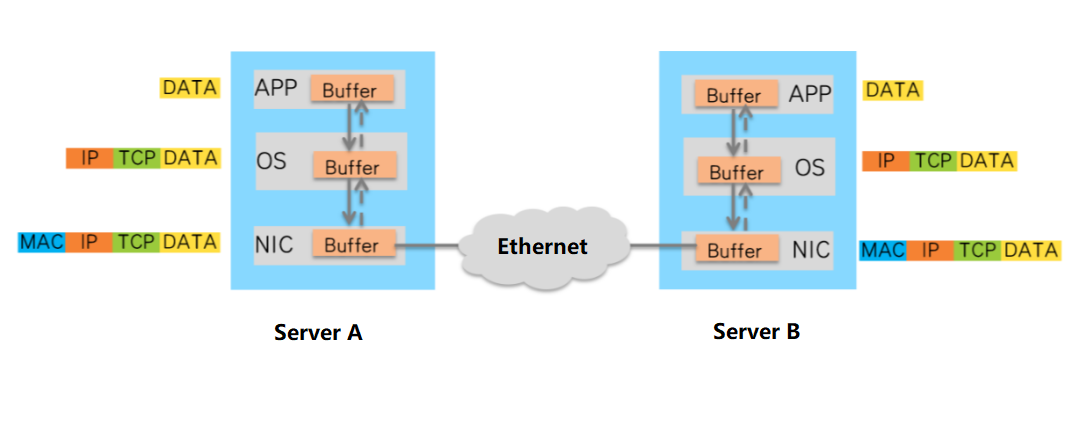

The process for server A to send data to server B in the data center is as follows:

- The CPU control data is copied from A’s APP Buffer to the operating system Buffer.

- CPU Control data Add TCP and IP headers to the operating system (OS) Buffer.

- Add TCP and IP packet headers to send the data to NIC, and add Ethernet packet headers.

- The packet is sent by the network adapter and transmitted to the network adapter of server B over the Ethernet network.

- The network adapter of server B unloads the Ethernet header of the packet and transfers it to the operating system Buffer.

- The CPU unloads TCP and IP packet headers in the operating system Buffer.

- The CPU controls the transfer of uninstalled data to the APP Buffer.

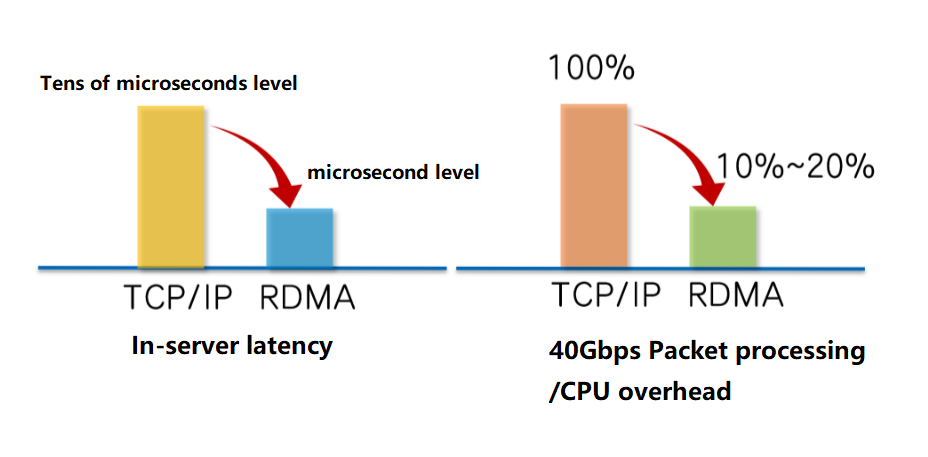

As can be seen from the data transmission process, data is copied several times in the server’s Buffer, and TCP and IP headers need to be added or uninstalled in the operating system. These operations not only increase the data transmission delay but also consume a lot of CPU resources, which cannot meet the requirements of high-performance computing.

So, how to construct a high-performance data center network with high throughput, ultra-low latency, and low CPU overhead?

RDMA technology can do that.

What is RDMA

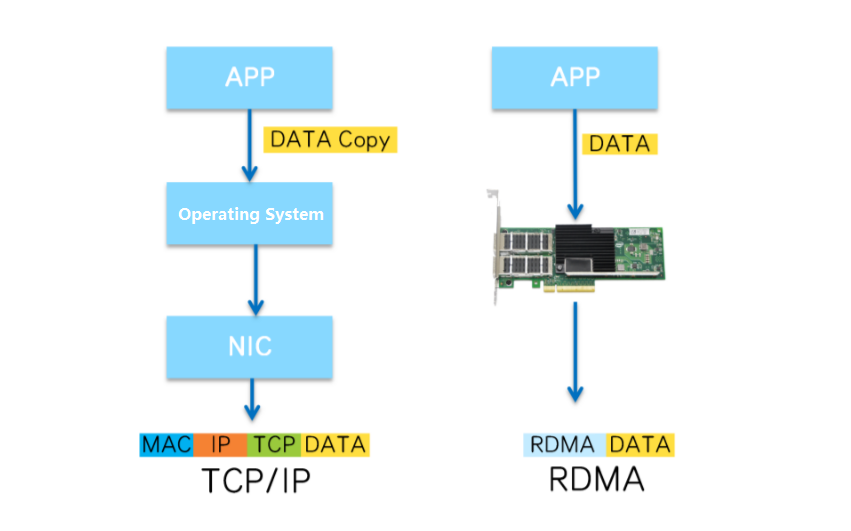

Remote Direct Memory Access (RDMA) is a new memory access technology that allows servers to read and write memory data from other servers at a high speed without time-consuming processing by the operating system /CPU.

RDMA is not a new technology and has been widely used in high performance computing (HPC). With the development demand for high bandwidth and low delay in data centers, RDMA has been gradually applied in some scenarios that require data centers to have high performance.

For example, in 2021, the shopping festival transaction volume of a large online mall reached a new record of more than 500 billion yuan, an increase of nearly 10% compared with 2020. Behind such a huge transaction volume is massive data processing. The online mall uses RDMA technology to support a high-performance network and ensure a smooth shopping festival.

Let’s take a look at some of RDMA’s tricks for low latency.

RDMA directly transfers the server application data from the memory to the intelligent network card (INIC) (solidified RDMA protocol), and the INIC hardware completes the RDMA transmission packet encapsulation, freeing the operating system and CPU.

This gives RDMA two major advantages:

- Zero Copy: A process that eliminates the need to copy data to the operating system kernel and process the packet headers, resulting in significantly reduced transmission latency.

- Kernel Bypass and Protocol Offload: The operating system kernel is not involved and there is no complicated header logic in the data path. This reduces latency and greatly saves CPU resources.

Three major RDMA networks

At present, there are three types of RDMA networks, namely InfiniBand, RoCE (RDMA over Converged Ethernet), and iWARP (RDMA over TCP).

RDMA was originally exclusive to Infiniband network architecture to ensure reliable transport at the hardware level, while RoCE and iWARP are Ethernet-based RDMA technologies.

InfiniBand

- InfiniBand is a network designed specifically for RDMA.

- The Cut-Through forwarding mode is adopted to reduce forwarding delay.

- Credit-based flow control mechanism ensures no packet loss.

- It requires dedicated network adapters, switches, and routers of InfiniBand, which has the highest network construction cost.

RoCE

- The transport layer is the InfiniBand protocol.

- RoCE comes in two versions: RoCEv1 is implemented on the Ethernet link layer and can only be transmitted at Layer L2; RoCEv2 hosts RDMA based on UDP and can be deployed on Layer 3 networks.

- Support for RDMA dedicated intelligent network adapter, no need for dedicated switch and router (support ECN/PFC technology, reduce packet loss rate), the lowest network construction cost.

iWARP

- The transport layer is the iWARP protocol.

- iWARP is implemented at the TCP layer of Ethernet TCP/IP protocol and supports transmission at the L2/L3 layer. TCP connections on large-scale networks consume a lot of CPU, so it is rarely used.

- iWARP only requires network adapters to support RDMA, without private switches and routers, and costs between InfiniBand and RoCE.

With advanced technology but a high price, Infiniband is limited to HPC high-performance computing. With the emergence of RoCE and iWARPC, RDMA costs are reduced and RDMA technology is popularized.

Using these three types of RDMA networks in high-performance storage and computing data centers can greatly reduce data transfer latency and provide higher CPU resource availability for applications.

The InfiniBand network delivers extreme performance to data centers, with transmission latency as low as 100 nanoseconds, one order of magnitude lower than that of Ethernet devices.

RoCE and iWARP networks bring high-cost performance to data centers and host RDMA over Ethernet, taking full advantage of RDMA’s high performance and low CPU usage, while not costing much to build.

The UDP-based RoCE performs better than TCP-based iWARP and, combined with lossless Ethernet flow control technology, solves the problem of packet loss sensitivity. RoCE network has been widely used in high-performance data centers in various industries.

Conclusion

With the development of 5G, artificial intelligence, industrial Internet, and other new fields, the application of RDMA technology will be more and more popular, and RDMA will make a great contribution to the performance of data centers.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

Cisco QDD-400G-SR8-S Compatible 400G QSFP-DD SR8 PAM4 850nm 100m OM4 MPO-16 DDM MMF Optical Transceiver Module

$149.00

Cisco QDD-400G-SR8-S Compatible 400G QSFP-DD SR8 PAM4 850nm 100m OM4 MPO-16 DDM MMF Optical Transceiver Module

$149.00

-

Arista Networks QDD-400G-SR8 Compatible 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

Arista Networks QDD-400G-SR8 Compatible 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$149.00

-

Arista Networks QDD-400G-DR4 Compatible 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

Arista Networks QDD-400G-DR4 Compatible 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$400.00

-

Juniper Networks QDD-400G-FR4 Compatible 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00

Juniper Networks QDD-400G-FR4 Compatible 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$500.00