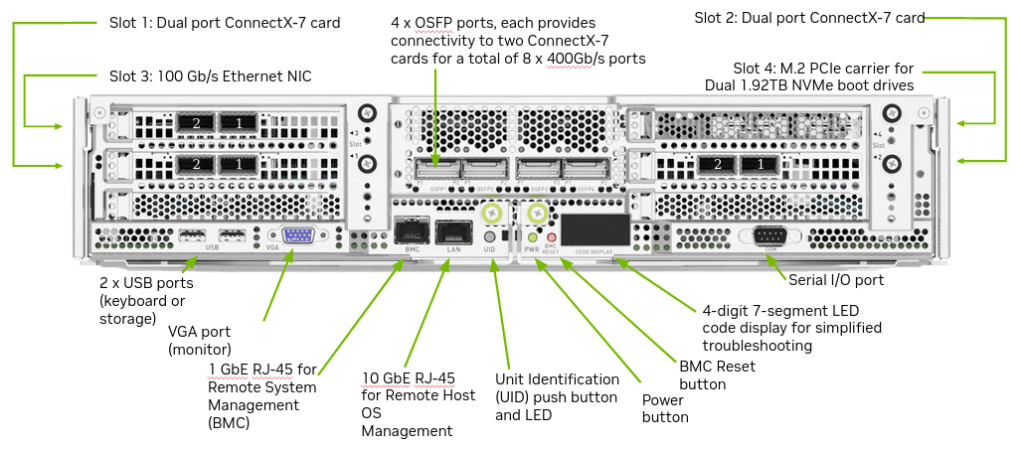

The NVIDIA DGX H100, released in 2022, is equipped with 8 single-port ConnectX-7 network cards, supporting NDR 400Gb/s bandwidth, and 2 dual-port Bluefield-3 DPUs (200Gb/s) that can support IB/Ethernet networks. The appearance is shown in the following figure.

The DGX H100 has 4 QSFP56 ports for storage network and In-Band management network; In addition, there is one 10G Ethernet port for Remote Host OS management and one 1G Ethernet port for Remote System Management.

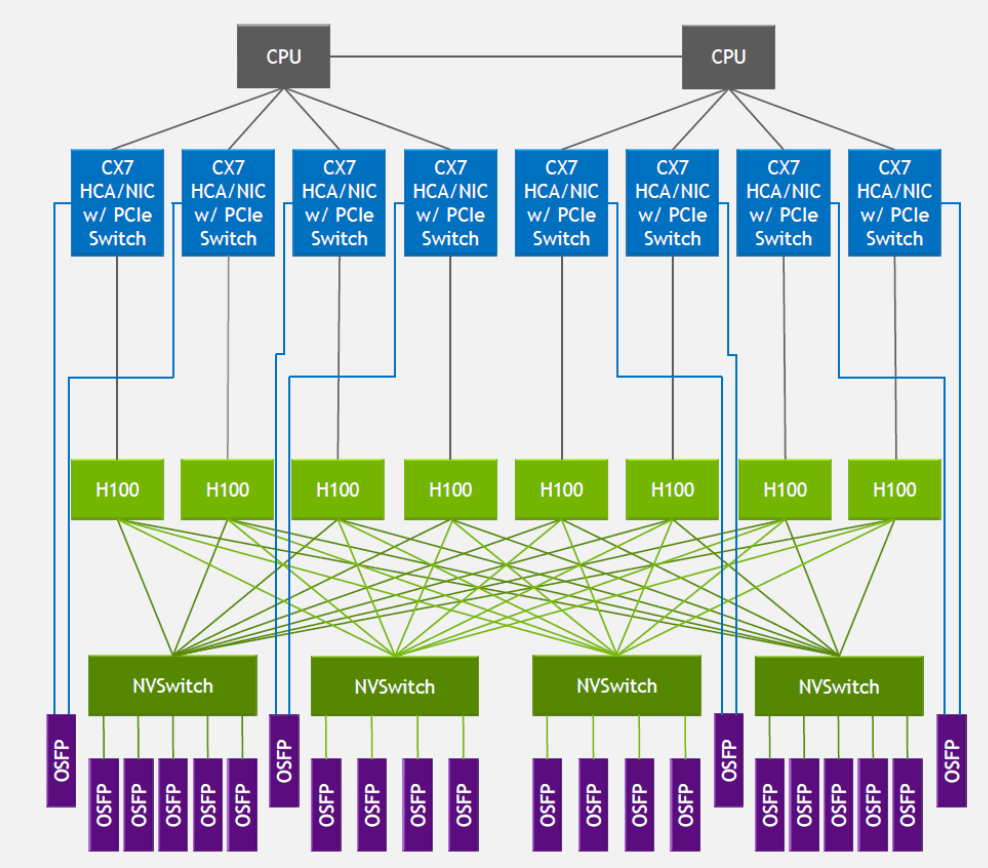

From the figure of the internal network topology of the server, there are 4 OSFP ports for computing network connection (the purple ones), and the blue blocks are network cards, which can act as network cards and also play the role of PCIe Switch expansion, becoming the bridge between CPU and GPU.

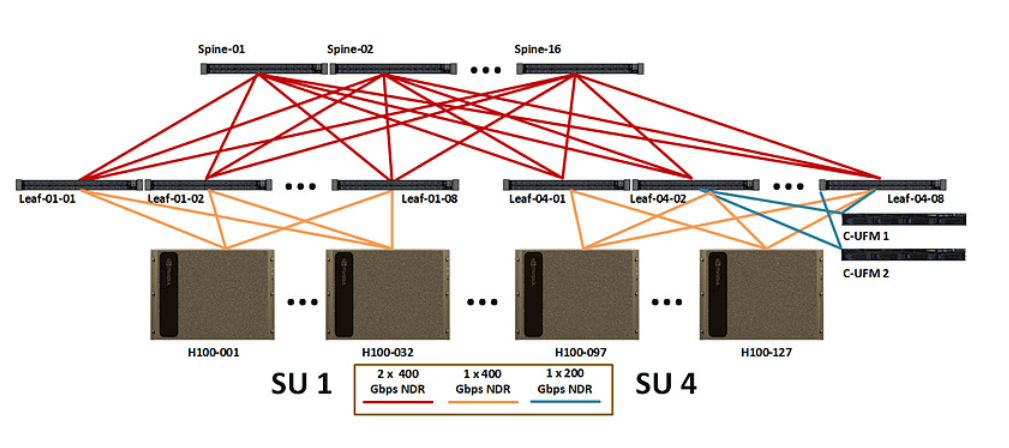

If the NVIDIA SuperPOD NVLink cluster interconnection scheme is adopted, 32 H100s will be interconnected through external NVLink switches. The 8 GPUs inside the server are connected to 4 NVSwitch modules, each NVSwitch module corresponds to 4-5 OSFP optical modules, a total of 18 OSFPs, and the OSFPs are then connected to 18 external NVLink switches. (Currently, the H100s on the market do not have these 18 OSFP modules) This article does not discuss the NVLink networking method but focuses on the IB networking method. According to the NVIDIA reference design document: In the DGX H100 server cluster, every 32 DGX H100s form an SU, and every 4 DGX H100s are placed in a separate rack (it is estimated that the power of each rack is close to 40KW), and various switches are placed in two independent racks. Therefore, each SU contains 10 racks (8 for placing servers and 2 for placing switches). The computing network only needs to use Spine-Leaf two-layer switches (Mellanox QM9700), the network topology is shown in the following figure.

Switch usage: In the cluster, every 32 DGX H100s form an SU (there are 8 Leaf switches in each SU), and there are 4 SUs in the 128 H100 server cluster, so there are a total of 32 Leaf switches. Each DGX H100 in the SU needs to have a connection with all 8 Leaf switches. Since each server only has 4 OSFP ports for computing network connection, after connecting 800G optical modules to each port, one OSFP port is expanded to two QSFP ports through the expansion port, achieving the connection of each DGX H100 with 8 Leaf switches. Each Leaf switch has 16 uplink ports that connect to 16 Spine switches.

Optical module usage: 400G optical modules are required for the downlink ports of the Leaf switch, and the demand is 3284. 800G optical modules are used for the uplink ports of the Leaf switch, and the demand is 1684. 800G optical modules are used for the downlink ports of the Spine switch. Therefore, in the 128 H800 server cluster, the computing network used 800G optical modules 1536 and 400G optical modules 1024.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00